Can Jony Ive and Sam Altman build the fourth great interface? That’s the question behind io

You couldn’t have missed the news: Jony Ive and Sam Altman have teamed up, after OpenAI acquired Ive’s company io for $6.5 billion. The plan? For Ive, and a sizable team of ex-employees from Apple, it’s to create a series of hardware products for OpenAI. The news alone dropped shares of Apple by 1.8% as two of the most celebrated software and hardware development teams in the modern era have combined to realize the potential of artificial intelligence and change the way we live. Hopefully for the better. The first io product, according to The Wall Street Journal, arrives in 2026. It will be a small object “capable of being fully aware of a user’s surroundings and life.” I imagine an environmental (audio, video, etc.) monitor the size of a macaron or iPod shuffle that Ive says accompanies a smartphone and laptop as a third device—which you can carry on your person or put onto the table. Despite the immensity of the partnership, it’s easy to be skeptical. After all, AI hardware has flopped thus far, due to a lack of vision and a lack of execution. And as wondrous as ChatGPT is, it still hallucinates and requires vast amounts of energy to train and operate. But within these barely explored large language models, there’s still hidden potential that designers have yet to tap. As Ive told me back in 2023, there have been only three significant modalities in the history of computing. After the original command line, we got the graphical user interface (the desktop, folders, and mouse of Xerox, Mac OS, and Windows), then voice (Alexa, Siri), and, finally, with the iPhone, multitouch (not just the ability to tap a screen, but to gesture and receive haptic feedback). When I brought up some other examples, Ive quickly nodded but dismissed them, acknowledging these as “tributaries” of experimentation. Then he said that to him the promise, and excitement, of building new AI hardware was that it might introduce a new breakthrough modality to interacting with a machine. A fourth modality. Ive’s fourth modality, as I gleaned, was about translating AI intuition into human sensation. And it’s the exact sort of technology we need to introduce ubiquitous computing, also called quiet computing and ambient computing. These are terms coined by the late UX researcher Mark Weiser, who in the 1990s began dreaming of a world that broke us free from our desktop computers to usher in devices that were one with our environment. Weiser did much of this work at Xerox PARC, the same R&D lab that developed the mouse and GUI technology that Steve Jobs would eventually adopt for the Macintosh. (I would also be remiss to ignore that ubiquitous computing is the foundation of the sci-fi film Her, one of Altman’s self-stated goalposts.) I’ve written about the premise and promise of ubiquitous computing at length, having been privileged enough to have spoken with several of Weiser’s peers. But one idea has stuck with me the most from Weiser’s theories. He often compared the vision of quiet computing to a forest. In a forest, you’re surrounded by information—plants, animals, and weather are all signaling you at once. And yet, despite your senses taking in all this data, you’re never overwhelmed. You never find yourself distracted, or unhappy. (Perhaps it’s disconcerting when the storm clouds roll in, but I’d still take the sound of low rolling thunder over a Microsoft Teams notification any day.) Ive never described what that fourth approach to interface looked like—he chooses his descriptors carefully and would not pigeonhole a burgeoning idea with limiting words. But for a man who planted 9,000 native trees when designing Apple Park and has expressed his own responsibility for the negative impacts of the smartphone, it’s hard to imagine he feels all that differently from Weiser. And that all comes to mind when analyzing the very little we know about the first io product. This first machine from io seems to be the input device needed for ubiquitous computing. I imagine the macaron will likely leverage notifications on your phone and audio in your ear to communicate with you. Down the line the partnership has teased “a family of products,” meaning, who knows what other UX possibilities io could dream up. [Images: Jason marz/Getty Images, Yevhen Borysov/iStock/Getty Images Plus] While we can barely say anything more specific, there does seem to be a fork in the road here philosophically. Whereas it appears that Google, Apple, Meta, and Snap are all betting on smart glasses to introduce the idea of ubiquitous computing—sensors and pixels that sit in your eyes all the time—at least for launch, io is doing the opposite. All of the leaked details so far point toward io developing the quietest, most discreet computing device we’ve ever had. I’m thinking of it as something like the silent conductor to the orchestra of the products we already own before, perhaps, one day doing more. Drafting off the smartphone The in

You couldn’t have missed the news: Jony Ive and Sam Altman have teamed up, after OpenAI acquired Ive’s company io for $6.5 billion. The plan? For Ive, and a sizable team of ex-employees from Apple, it’s to create a series of hardware products for OpenAI.

The news alone dropped shares of Apple by 1.8% as two of the most celebrated software and hardware development teams in the modern era have combined to realize the potential of artificial intelligence and change the way we live. Hopefully for the better.

The first io product, according to The Wall Street Journal, arrives in 2026. It will be a small object “capable of being fully aware of a user’s surroundings and life.” I imagine an environmental (audio, video, etc.) monitor the size of a macaron or iPod shuffle that Ive says accompanies a smartphone and laptop as a third device—which you can carry on your person or put onto the table.

Despite the immensity of the partnership, it’s easy to be skeptical. After all, AI hardware has flopped thus far, due to a lack of vision and a lack of execution. And as wondrous as ChatGPT is, it still hallucinates and requires vast amounts of energy to train and operate. But within these barely explored large language models, there’s still hidden potential that designers have yet to tap.

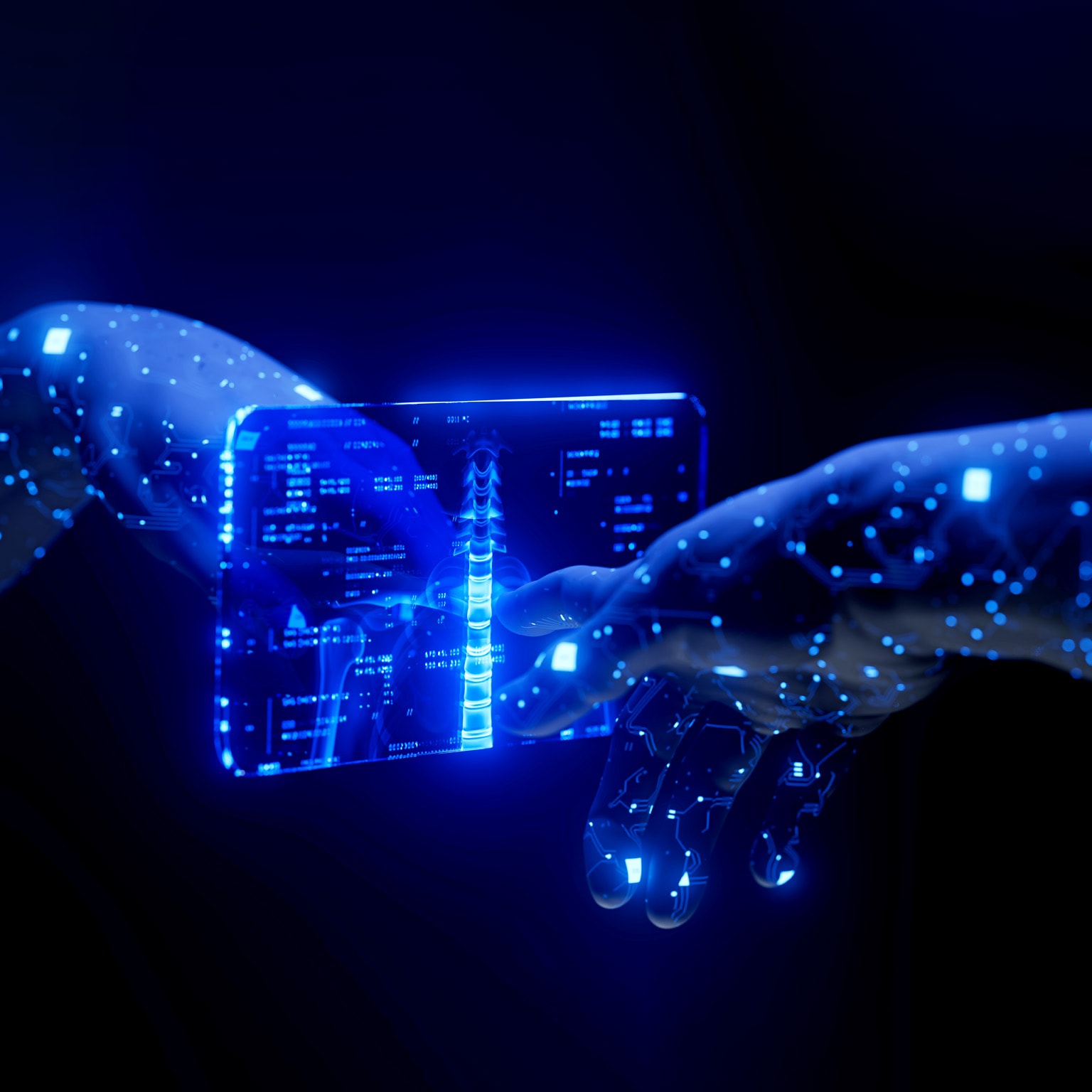

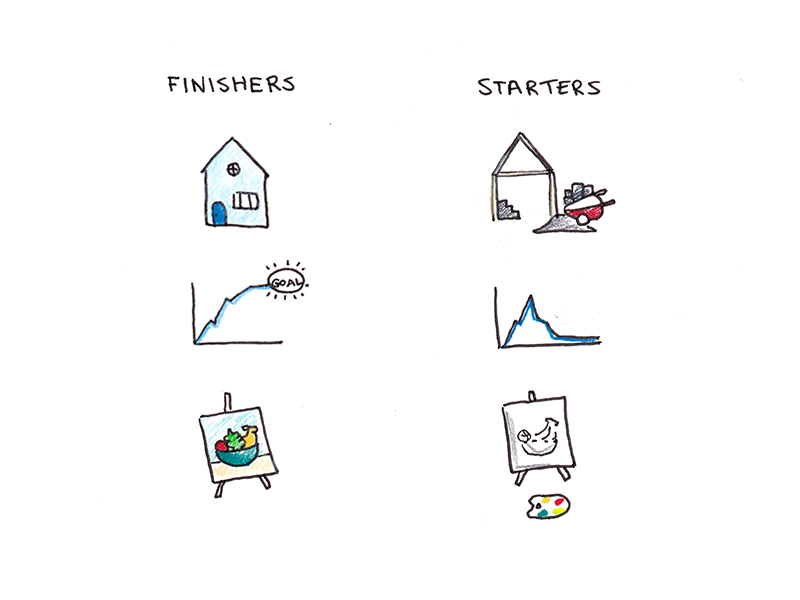

As Ive told me back in 2023, there have been only three significant modalities in the history of computing. After the original command line, we got the graphical user interface (the desktop, folders, and mouse of Xerox, Mac OS, and Windows), then voice (Alexa, Siri), and, finally, with the iPhone, multitouch (not just the ability to tap a screen, but to gesture and receive haptic feedback). When I brought up some other examples, Ive quickly nodded but dismissed them, acknowledging these as “tributaries” of experimentation. Then he said that to him the promise, and excitement, of building new AI hardware was that it might introduce a new breakthrough modality to interacting with a machine. A fourth modality.

Ive’s fourth modality, as I gleaned, was about translating AI intuition into human sensation. And it’s the exact sort of technology we need to introduce ubiquitous computing, also called quiet computing and ambient computing. These are terms coined by the late UX researcher Mark Weiser, who in the 1990s began dreaming of a world that broke us free from our desktop computers to usher in devices that were one with our environment. Weiser did much of this work at Xerox PARC, the same R&D lab that developed the mouse and GUI technology that Steve Jobs would eventually adopt for the Macintosh. (I would also be remiss to ignore that ubiquitous computing is the foundation of the sci-fi film Her, one of Altman’s self-stated goalposts.)

I’ve written about the premise and promise of ubiquitous computing at length, having been privileged enough to have spoken with several of Weiser’s peers. But one idea has stuck with me the most from Weiser’s theories. He often compared the vision of quiet computing to a forest. In a forest, you’re surrounded by information—plants, animals, and weather are all signaling you at once. And yet, despite your senses taking in all this data, you’re never overwhelmed. You never find yourself distracted, or unhappy. (Perhaps it’s disconcerting when the storm clouds roll in, but I’d still take the sound of low rolling thunder over a Microsoft Teams notification any day.)

Ive never described what that fourth approach to interface looked like—he chooses his descriptors carefully and would not pigeonhole a burgeoning idea with limiting words. But for a man who planted 9,000 native trees when designing Apple Park and has expressed his own responsibility for the negative impacts of the smartphone, it’s hard to imagine he feels all that differently from Weiser. And that all comes to mind when analyzing the very little we know about the first io product.

This first machine from io seems to be the input device needed for ubiquitous computing. I imagine the macaron will likely leverage notifications on your phone and audio in your ear to communicate with you. Down the line the partnership has teased “a family of products,” meaning, who knows what other UX possibilities io could dream up.

While we can barely say anything more specific, there does seem to be a fork in the road here philosophically. Whereas it appears that Google, Apple, Meta, and Snap are all betting on smart glasses to introduce the idea of ubiquitous computing—sensors and pixels that sit in your eyes all the time—at least for launch, io is doing the opposite. All of the leaked details so far point toward io developing the quietest, most discreet computing device we’ve ever had. I’m thinking of it as something like the silent conductor to the orchestra of the products we already own before, perhaps, one day doing more.

Drafting off the smartphone

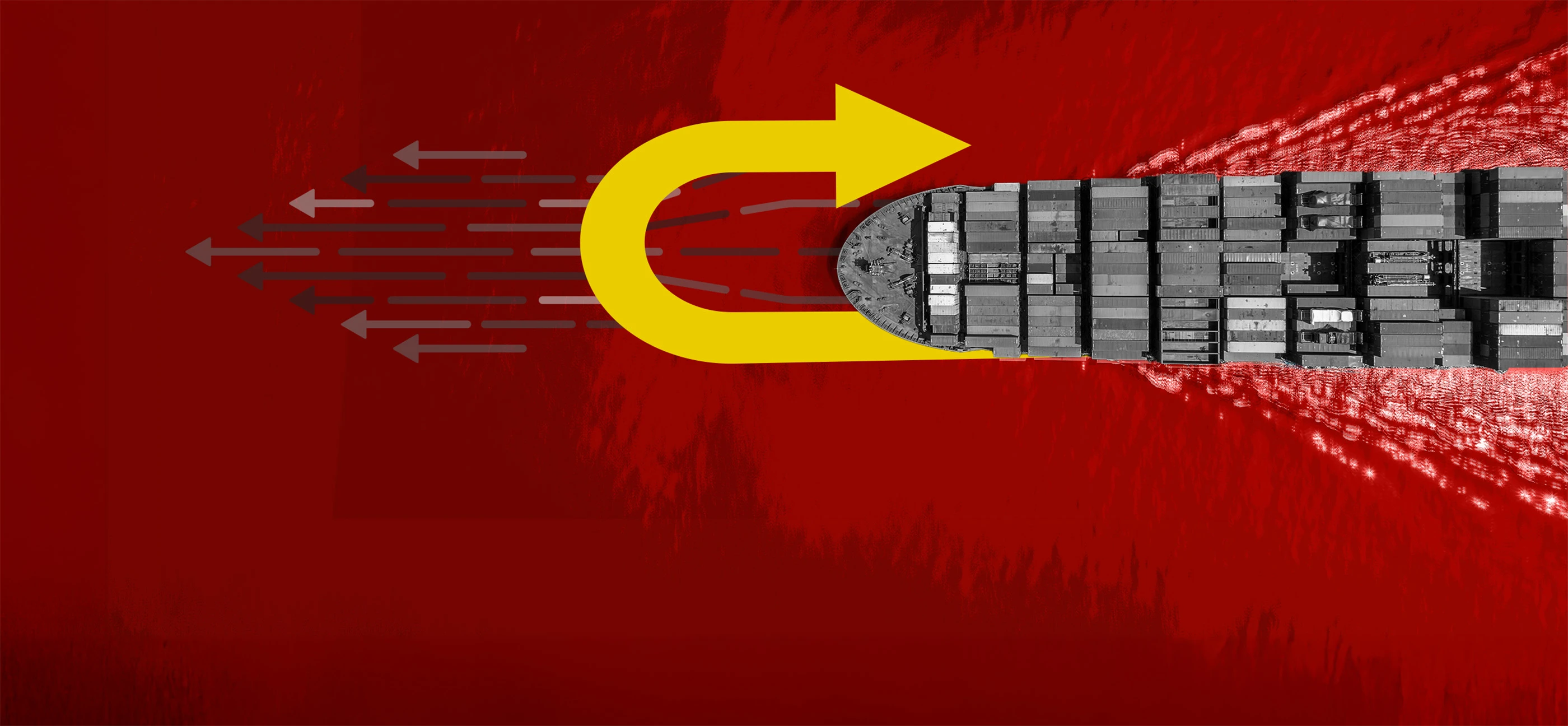

The inconvenient truth for any innovator planning to disrupt consumer hardware is that there’s a lot more competition than there used to be when the Walkman, iPod, or Razr came around. There are 7.21 billion smartphones in the world today—nearly one per person, adding up to a $434 billion hardware industry in 2025, according to IDC. (Do note: Almost all of them, regardless of brand, are still designed along the lines of Ive and his team’s original vision.)

These supercomputing screens aren’t simply ubiquitous; they are essential. From 4K video social media posts that go global in an instant, to turn-by-turn GPS directions navigating us through our lives, to the infrastructure of hailing an Uber or Lyft, to the mindlessness of checking out at stores, to the frictionless experience of riding public transit, to the necessary remote for controlling appliances, to, when all else fails, having the option to makes calls via satellites, we deeply depend on these screens in our pockets. There is no future in which the smartphone suddenly goes extinct, not because it’s perfect for society but because it’s so ingrained in the many disparate parts of our living infrastructure. A world suddenly without working smartphones would plunge us into some level of chaos.

The first error of the Humane Ai Pin—the hyped AI gadget by Apple alum Imran Chaudhri, backed by Altman—was when it claimed it could replace your phone by, more or less, simply removing the screen and sticking it on your shirt. (The second error was when it just didn’t work.)

The first io device seems to acknowledge the phone’s inertia. Instead of presenting itself as a smartphone-killer like the Ai Pin or as a fabled “second screen” like the Apple Watch, it’s been positioned as a third, er, um . . . thing next to your phone and laptop. Yeah, that’s confusing, and perhaps positions the io product as unessential. But it also appears to be a needed strategy: Rather than topple these screened devices, it will attempt to draft off them.

On paper, that is such a vague idea that it’s either dull or exhilarating, depending on your disposition. Personally, it’s so darn hard to realize that I wouldn’t give it much attention if it weren’t for the team building it. Ive founded io with some of the greatest engineering and design talents out of Apple. He also founded his firm LoveFrom with much of his core design team from Apple. These two firms will be tag teaming with OpenAI, the most singular force in kicking off the current AI era.

By comparison, as I write this, a Limitless pendant sits on my desk, fully charged, fully unused. It’s an AI system that will listen to your life to transcribe everything. Designed by Ammunition, the same lauded design firm behind Beats headphones, it’s slick, small, and carefully realized. It clamps right onto any fabric with a magnet. But after a week with it, I still don’t know when or where I should wear it. With my family? Feels weird. At work? I work remotely, so I have Zoom, Teams, Slack, and every other platform already recording me. (And at any job, there’s sensitive stuff you don’t want recorded.) So that’s out. What does that leave? Hanging with friends? The one time in my life as a journalist that’s thoroughly off the record, no thank you.

How deep is too deep into your life?

My concerns for this io device are many, though privacy is top of mind. I can imagine some interesting UX around opting in to being recorded, but will OpenAI go that direction or just brute force itself into our lives? I can’t help but remember that despite Apple’s privacy-first messaging, the iPhone went from a wondrous pocket computer to the ultimate personal advertising tracker—a decision made at the level of its chipset and supportive APIs.

However, my concerns around privacy are perhaps more existential than my personal conversations being monetized. I wonder, if there’s always this AI around, will we be allowed to be private in our own thoughts anymore? Will we be afforded the privacy to draw our own conclusions?

Before these artificial entities, our lives have largely been colored by the people around us. Our family, spouses, friends, and coworkers. A chance encounter with a stranger can make a day or spoil it. It’s so often someone’s take on events that shapes our own—like leaving a movie theater, I almost always agree with the take of my companion.

Let’s assume we all acquiesce to this new wave of technology where an AI companion sits alongside us all the time. Suddenly, every experience we have is being processed by a third party. It’s something that will be analyzing and summarizing our actions and interactions. It will shape what we do next, a vast acceleration of how algorithms shape our experience across social media today.

Even if this io product doesn’t live in our eyes, the extreme subtleties with which it chronicles our lives means it may live in our hearts. If that sounds cheesy, fair! Imagine if ChatGPT summarizes your life with all of the nuance of Apple’s AI of today—Mark, confused by menu, ordered cowboy burger. Nobody will use the thing. A system like this inherently has to go deeper to prove its utility, but in doing so it has to balance the burden of that responsibility.

A subjective computer

Technologists discussing AI today often draw a line between a deterministic and probabilistic interface. A deterministic interface is what we’ve had for the past 50 years. Buttons always lead you through the same preplanned routes to play a song or pull up an email. Every app is essentially a permutation of a calculator that always leads to a perfect end point. With probabilistic AI, however, every question is more like a wheelspin of roulette. ChatGPT spins up its responses like an infinite Rubik’s Cube spinning together every piece of media ever recorded. No one knows exactly what you’ll get, so the argument has been that probabilistic interfaces have to be built to accommodate for the unknown.

If the Star Trek computer, with its clear answers like Alexa or Siri, was a deterministic computer, then the Star Trek holodeck, with its ever-shifting invented characters and worlds, is a probabilistic one.

But I want to challenge that framing as inhuman, and irrelevant for our discussion of intimate, ambient computing. Instead of deterministic versus probabilistic, I think AI is shifting us from objective to subjective. When a Fitbit counts your steps and calories burned, that’s an objective interface. When you ask ChatGPT to gauge the tone of a conversation, or whether you should eat better, that’s a subjective interface. It offers perspective, bias, and, to some extent, personality. It’s not just serving facts; it’s offering interpretation.

The AI companion from io needs to be the first subjective interface. And that makes it as complicated and risky as any other relationship we have.

Years ago when “big data” was all the rage, I asked the question, Should Google tell you if you have cancer? The idea being that it could track search patterns of someone who was sick over time and predict where they would go next. So shouldn’t it intervene when it spots you searching for a known pattern of disease?

The io device, presumably recording and analyzing your whole life, would have a similar remit, but with all sorts of additional questions. If it followed along in a conversation, and you couldn’t think of the name of that book you read . . . should it whisper that name into your ear? If it caught you misremembering, or even lying, should it call you out? Privately or publicly?

When Microsoft built Cortana, it interviewed real executive assistants largely to get the system’s voice right, to know what it might say or not say, and how it could respond to certain questions. That level of sophistication was fine for scheduling a meeting or asking about the weather—conversational calculators—but we are so far beyond that now. Consider that an AI listening in or filming a room can do more than remember where you put your keys. Research is proving that it can spot finite relationships that humans can’t even identify. Archetype AI, for instance, has demonstrated that with nothing more than an A/V feed, AI can predict everything from the swing of a pendulum to whether there might be a workplace accident. An io device will be able to hear so much more than what you say.

It’s why, as nonsensical as this unknown product might seem to critics (I’m supposed to buy this surveillance thingy?!?), I can’t question the potential utility. Executed well (that’s a big caveat!), it is potentially the first step in a realized vision of ubiquitous computing, a little buddy in your pocket that experiences life with you so that you don’t need to explain (or input) what’s going on. And as wild as the alternative other companies are pursuing are—to see holograms floating in front of your face—the vision for a quieter era of computing is about as old as computing itself.

It’s as if we realized, from the earliest days, that our natural world was already a utopia. Now, it will take incredible creativity and restraint from OpenAI, io, and LoveFrom to enhance what’s remaining of this world, rather than seize its last bits.