Writing Machine Intelligence Lessons from John Ball

Explore key lessons in writing machine intelligence from John Ball, highlighting his unique approach to AI inspired by neuroscience and language structure.

The field of machine intelligence is undergoing a transformative shift. As traditional AI methods mature, new thinkers are pushing boundaries by blending neuroscience, cognitive modeling, and deep language understanding. One such visionary is John Ball, whose groundbreaking work redefines how we think about artificial general intelligence (AGI). By studying the brain’s functional systems, Ball provides a new lens through which we can build thinking machines that process information more like humans.

John Ball’s theories differ fundamentally from conventional machine learning models. Rather than relying on vast datasets and probabilistic models, he emphasizes structured cognitive functions. He argues that intelligence is not just about computation but about understanding. Ball believes machines should mimic how the brain recognizes patterns, stores knowledge, and applies logic. His approach demands we rethink how intelligence should be represented—more aligned with mental processes than statistical inference.

The Philosophy Behind Machine Thinking

Many AI systems today operate by analyzing patterns in massive datasets. While this is useful, it lacks flexibility. Ball critiques this approach by pointing out that real human intelligence involves reasoning, memory recall, emotional context, and adaptation to new situations. His work focuses on semantic processing—machines that can understand language the way humans do, not just respond based on keywords or training data.

Ball's vision stems from a belief in deep structure. He advocates for systems that use a concept-based framework, much like how humans assign meanings to words and form connections. This semantic approach forms the foundation of his brain-inspired model known as Patom Theory. It serves as a blueprint for building machines that comprehend language through innate structures, not artificial ones programmed by developers.

From Patom Theory to Practical Systems

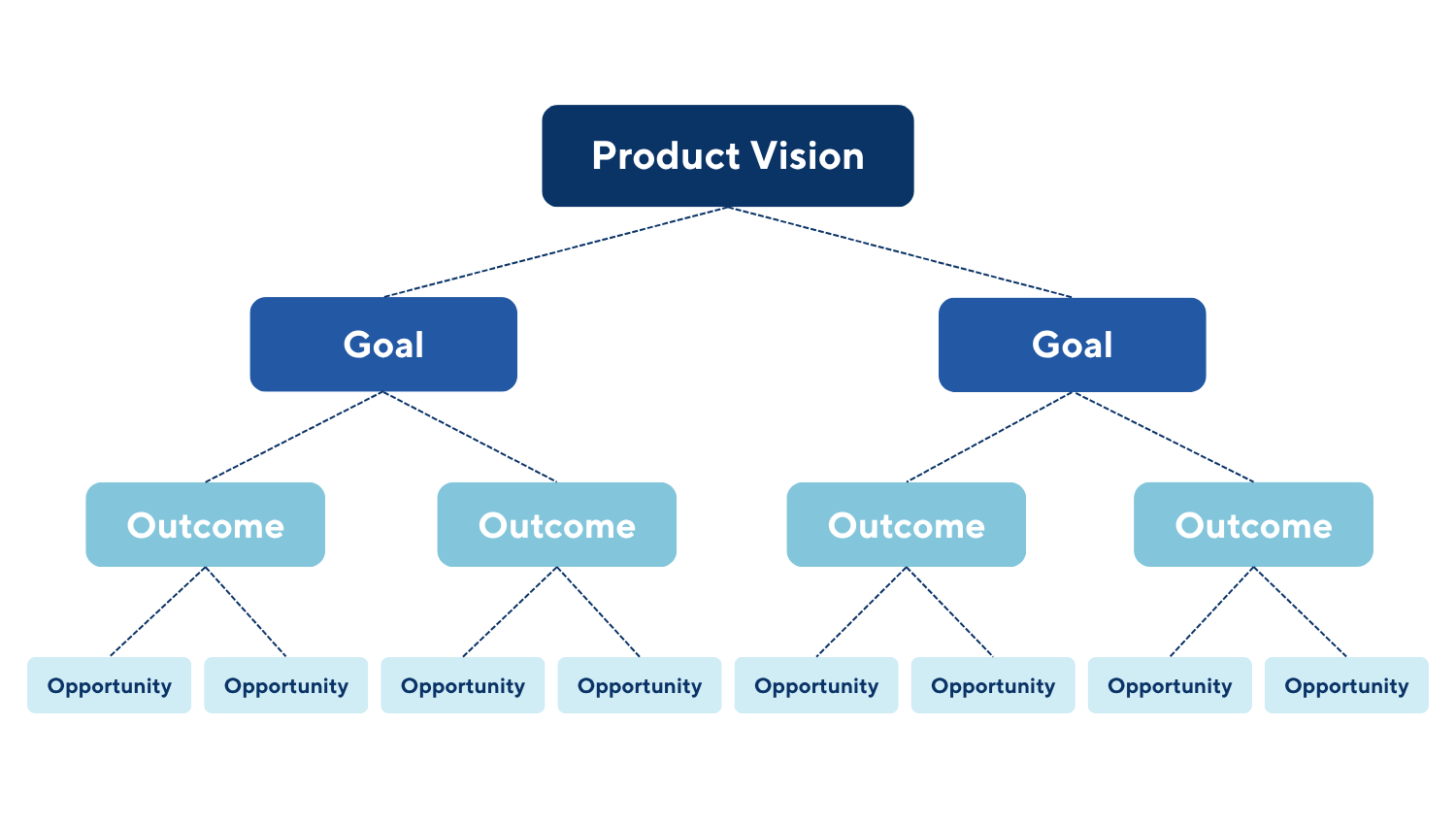

At the heart of Ball’s contributions lies the Patom Theory, which proposes that the brain stores and processes information in a nested, pattern-oriented structure. According to this model, our brain organizes thoughts through hierarchical patterns that interact dynamically. For AI developers, this means abandoning rigid codebases in favor of adaptive, structure-based programming that mimics biological thought processes.

What makes Ball’s theory particularly impactful is its application to natural language understanding. Traditional natural language processing (NLP) methods often rely on statistical models like n-grams or transformer networks. These systems can appear intelligent but lack true comprehension. Ball’s approach, on the other hand, allows a system to recognize the semantic roles within a sentence and understand meaning as contextually grounded.

By using Patom Theory as a cognitive framework, machines can be trained to parse sentences based on their semantic value rather than frequency. This opens doors to more intuitive interfaces, better language models, and ultimately systems capable of reasoned dialogue. His prototypes have demonstrated promising results, particularly in areas like conversational AI and semantic interpretation.

Building Machines That Think Not Just Calculate

A major takeaway from John Ball’s work is the importance of cognition over computation. While today’s AI excels at processing speed and data quantity, it falters in areas requiring judgment and adaptation. Ball’s research insists that thinking machines must replicate not just brain functions, but also brain behavior—how humans prioritize, simplify, and make sense of the world.

This emphasis on cognitive modeling has profound implications. In Ball’s view, a true thinking machine must possess both short-term and long-term memory, concept recognition, and self-referential awareness. These elements are absent in most current AI models. He outlines a methodology in which machines simulate the inner dialogue of the human mind. This leads to systems capable of introspection, reasoning, and even self-correction.

The result is a machine that learns contextually, adapts over time, and understands rather than imitates. This is the direction Ball envisions for AGI—not an extension of narrow AI capabilities but a reimagining of the architecture itself.

Real-World Applications and Future Potential

The influence of John Ball’s ideas stretches across multiple industries. His semantic processing models can improve everything from virtual assistants to educational software. Imagine AI tutors that adapt to each student’s learning style or virtual agents that understand customer queries with near-human accuracy. These are not futuristic dreams but real possibilities under Ball’s framework.

Moreover, Ball’s emphasis on structure over data resonates with sectors where interpretability and accountability matter, such as healthcare and finance. Traditional black-box models often fail in environments where understanding the “why” behind a decision is as crucial as the decision itself. Ball’s model provides a clearer audit trail of logic and reasoning, aligning well with the ethical demands of high-stakes AI.

For software developers and AI researchers, studying the work of the expert in machine intelligence John Ball reveals critical lessons in cognitive modeling and system design. His research encourages a return to first principles—thinking deeply about what intelligence truly means and how it manifests in human cognition. This mindset enables more robust, ethical, and explainable AI systems.

Educational and Ethical Dimensions

Beyond the technical landscape, Ball’s contributions include a strong focus on education. He believes future AI developers must be taught not just how to code but how to think like scientists and philosophers. This includes understanding cognitive science, linguistics, and neurobiology. His interdisciplinary approach invites a broader, more holistic view of AI development.

Ethically, Ball’s framework offers a path forward at a time when concerns about AI bias, autonomy, and misuse are on the rise. By modeling machines after the human brain, Ball believes we can instill not only intelligence but also moral reasoning. Though this remains a long-term goal, it sets a high standard for AI design, urging developers to prioritize human values and transparent logic.

Lessons for Aspiring AI Writers and Developers

John Ball’s journey offers key insights for anyone entering the AI field. One vital lesson is that innovation doesn’t always come from more data or faster computation. Sometimes, it requires stepping back and reexamining core assumptions. Ball’s work demonstrates the power of interdisciplinary thinking and the value of building systems that reflect how people actually think.

His approach also underlines the importance of patience. Developing systems that simulate cognition is complex and time-consuming. However, the payoff is significant—AI that is not just reactive, but reflective. This can lead to better human-machine collaboration and ultimately, a more harmonious coexistence.

Conclusion

Writing machine intelligence is more than an engineering challenge; it is a philosophical endeavor. John Ball’s work teaches us that to build truly intelligent machines, we must look beyond algorithms and into the structure of thought itself. His Patom Theory and emphasis on semantic understanding are reshaping how we view the AI landscape.

By focusing on how humans process information, Ball presents a powerful alternative to data-hungry models. His vision of AGI is one where machines can think, reason, and understand—not just calculate. For developers, researchers, and thinkers alike, studying Ball’s work provides a roadmap to a more thoughtful and human-aligned future in AI.