Tesla’s self-driving capabilities are now a Looney Tunes cartoon joke

Lidar has long been considered the gold standard of self-driving technology. Most car companies use the technology, alongside cameras, radar, and AI, to fully assess a vehicles’ environment. Except for one notable exception: Tesla. Elon Musk has always had it out for Lidar, calling it a “a crutch,” “a loser’s technology” and “too expensive.” After experimenting with Lidar in early autonomous driving prototypes, Musk went a different direction. He ditched radar from Tesla’s production models in 2021, against the criteria of his own engineers, opting instead for his camera-based AI “Tesla Vision” system, which relies on cameras and AI alone. This has proven to be one of his biggest mistakes when it comes to Tesla’s future. Lidar, which works by firing laser beams to capture a car’s surroundings in three dimensions as a way to assess its environment, is widely used in the autonomous vehicle industry because it provides precise depth perception, even in poor visibility conditions. Radar is also needed to detect obstacles further away and calculate their speed…and yet, Musk insisted that vision-based AI alone—using only cameras, like human eyes—is sufficient. As of December 2024, Tesla remains committed to its camera-only Tesla Vision system. “We are confident that this is the best strategy for the future of Autopilot and the safety of our customers,” the company says on its webpage. But as this video by Mark Rober, a former NASA engineer-turned-YouTuber, demonstrates, Tesla Vision may not be the safest option both drivers and pedestrians. Rober designed an experiment inspired by the classic Wile E. Coyote and Road Runner cartoons to compare Tesla’s Autopilot with Lidar-based systems. He created a polystyrene wall with an image of a road printed on it and placed it in the middle of a real street to evaluate the reaction of the sensors in his own Tesla Model Y, which relies only on cameras. For comparison, Rober also tested a Lexus RX equipped with Lidar under the same conditions. In the initial tests, both the Tesla and the Lexus successfully stopped in front of a stationary dummy and another dummy in motion. But the Tesla’s camera-based system, which struggles in poor visibility, failed when adverse conditions were introduced. It could not detect the same dummy in fog and rain, while the Lexus’ Lidar identified it without issue. The ultimate test was the painted-wall experiment. In Chuck Jones’ classic cartoons, Wile E. Coyote often paints a fake tunnel on a wall, making it appear as though the road continues. The Road Runner always escapes by running through the illusion, while the Coyote, baffled, inevitably crashes into the obstacle. That’s exactly what happened to Rober’s Tesla, which kept driving until it smashed into the wall. The vehicle’s artificial intelligence trusted what its cameras saw: an uninterrupted road. The Lexus, on the other hand, stopped immediately—its laser beams detected a solid wall, regardless of the image painted on it. Some have dismissed the test as a gimmick, but it highlights a fundamental flaw: Tesla’s system cannot reliably distinguish real objects from illusions. It misinterprets reality because it relies solely on optical sensors. As seen in the dummy test under rain and fog, poor visibility leads the car’s AI to make dangerous misjudgments. While Lidar scans the environment in 3D regardless of an object’s visual appearance, Tesla’s cameras only process flat images, making them vulnerable to visual deception. This is a well-documented issue in AI systems, as multiple studies have shown. More concerning is that this test was conducted under ideal conditions—broad daylight, with no rain or fog—yet the Tesla still failed to recognize the obstacle, exposing a fundamental flaw in its technology. This technology has not changed since 2022, when sensor company Luminar conducted a similar test with a child-sized dummy, and Tesla failed in poor visibility conditions. Another Musk mistake This isn’t the first time Tesla’s disastrous design choices have called its products’ viability into question. Elon Musk’s obsession with only using cameras goes against the strategy of his competitors. As a result, Tesla’s Autopilot has remained stuck at Level 2 autonomy—requiring constant driver supervision—for a decade, while Waymo and Mercedes have reached Level 4, and Chinese manufacturer BYD has reached Level 3, meaning their cars can drive autonomously without human intervention. Waymo, Alphabet’s autonomous vehicle subsidiary, has demonstrated that its self-driving system allows vehicles to travel 17,311 miles between human interventions. In contrast, Tesla’s misleadingly named Full Self-Driving (FSD) software requires corrections every 71 miles. Waymo’s cars are not perfect, but they are light-years ahead of Tesla’s. In 2024, Tesla purchased Lidar sensors from Luminar, leading some to speculate that Musk was reconsidering his stance. But the real

Lidar has long been considered the gold standard of self-driving technology. Most car companies use the technology, alongside cameras, radar, and AI, to fully assess a vehicles’ environment. Except for one notable exception: Tesla.

Elon Musk has always had it out for Lidar, calling it a “a crutch,” “a loser’s technology” and “too expensive.” After experimenting with Lidar in early autonomous driving prototypes, Musk went a different direction. He ditched radar from Tesla’s production models in 2021, against the criteria of his own engineers, opting instead for his camera-based AI “Tesla Vision” system, which relies on cameras and AI alone. This has proven to be one of his biggest mistakes when it comes to Tesla’s future.

Lidar, which works by firing laser beams to capture a car’s surroundings in three dimensions as a way to assess its environment, is widely used in the autonomous vehicle industry because it provides precise depth perception, even in poor visibility conditions. Radar is also needed to detect obstacles further away and calculate their speed…and yet, Musk insisted that vision-based AI alone—using only cameras, like human eyes—is sufficient. As of December 2024, Tesla remains committed to its camera-only Tesla Vision system. “We are confident that this is the best strategy for the future of Autopilot and the safety of our customers,” the company says on its webpage.

But as this video by Mark Rober, a former NASA engineer-turned-YouTuber, demonstrates, Tesla Vision may not be the safest option both drivers and pedestrians.

Rober designed an experiment inspired by the classic Wile E. Coyote and Road Runner cartoons to compare Tesla’s Autopilot with Lidar-based systems. He created a polystyrene wall with an image of a road printed on it and placed it in the middle of a real street to evaluate the reaction of the sensors in his own Tesla Model Y, which relies only on cameras. For comparison, Rober also tested a Lexus RX equipped with Lidar under the same conditions.

In the initial tests, both the Tesla and the Lexus successfully stopped in front of a stationary dummy and another dummy in motion. But the Tesla’s camera-based system, which struggles in poor visibility, failed when adverse conditions were introduced. It could not detect the same dummy in fog and rain, while the Lexus’ Lidar identified it without issue.

The ultimate test was the painted-wall experiment. In Chuck Jones’ classic cartoons, Wile E. Coyote often paints a fake tunnel on a wall, making it appear as though the road continues. The Road Runner always escapes by running through the illusion, while the Coyote, baffled, inevitably crashes into the obstacle.

That’s exactly what happened to Rober’s Tesla, which kept driving until it smashed into the wall. The vehicle’s artificial intelligence trusted what its cameras saw: an uninterrupted road. The Lexus, on the other hand, stopped immediately—its laser beams detected a solid wall, regardless of the image painted on it.

Some have dismissed the test as a gimmick, but it highlights a fundamental flaw: Tesla’s system cannot reliably distinguish real objects from illusions. It misinterprets reality because it relies solely on optical sensors. As seen in the dummy test under rain and fog, poor visibility leads the car’s AI to make dangerous misjudgments. While Lidar scans the environment in 3D regardless of an object’s visual appearance, Tesla’s cameras only process flat images, making them vulnerable to visual deception. This is a well-documented issue in AI systems, as multiple studies have shown.

More concerning is that this test was conducted under ideal conditions—broad daylight, with no rain or fog—yet the Tesla still failed to recognize the obstacle, exposing a fundamental flaw in its technology. This technology has not changed since 2022, when sensor company Luminar conducted a similar test with a child-sized dummy, and Tesla failed in poor visibility conditions.

Another Musk mistake

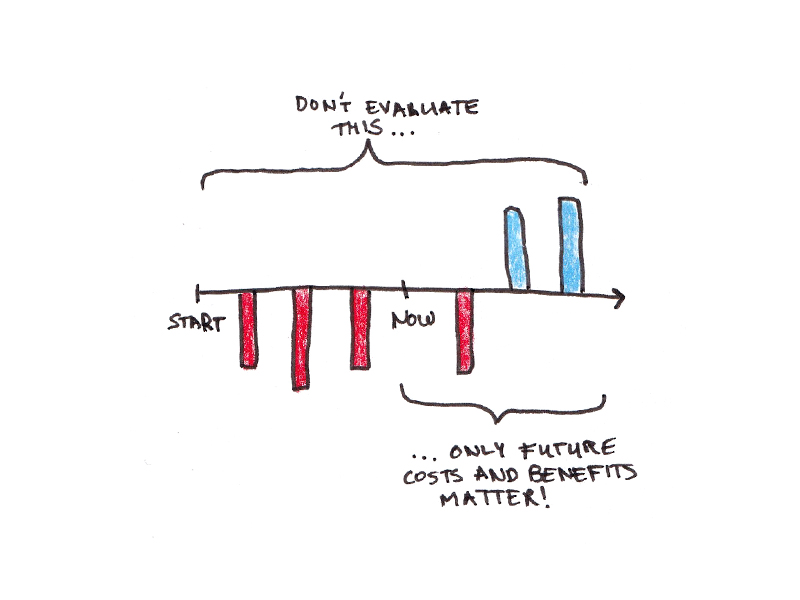

This isn’t the first time Tesla’s disastrous design choices have called its products’ viability into question. Elon Musk’s obsession with only using cameras goes against the strategy of his competitors. As a result, Tesla’s Autopilot has remained stuck at Level 2 autonomy—requiring constant driver supervision—for a decade, while Waymo and Mercedes have reached Level 4, and Chinese manufacturer BYD has reached Level 3, meaning their cars can drive autonomously without human intervention.

Waymo, Alphabet’s autonomous vehicle subsidiary, has demonstrated that its self-driving system allows vehicles to travel 17,311 miles between human interventions. In contrast, Tesla’s misleadingly named Full Self-Driving (FSD) software requires corrections every 71 miles. Waymo’s cars are not perfect, but they are light-years ahead of Tesla’s.

In 2024, Tesla purchased Lidar sensors from Luminar, leading some to speculate that Musk was reconsidering his stance. But the reality was different. The sensors were only used for reference data collection, not for integration into Tesla’s vehicles. In fact, Musk recently claimed that Tesla no longer needs Lidar for testing. This confirms that he remains committed to his camera-only approach, despite overwhelming evidence of its limitations.

Rather than admitting his mistake, Musk is doubling down. He has been promising full self-driving since 2014, repeatedly claiming the technology would be ready “next year.” Just months ago, he pitched the idea that Tesla would launch autonomous Cybercab taxis by 2026—while Waymo already operates robotaxis in multiple U.S. cities, and brands like Mercedes and BYD have secured certification for driverless operation on roads in Germany and China.

After watching Rober’s test, the notion that Tesla can catch up to its competitors without using Lidar seems as viable as Wile E. Coyote’s plans to catch the Road Runner.

![How 6 Leading Brands Use Content To Win Audiences [E-Book]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/content-marketing-examples-600x330.png?#)

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How Human Behavior Impacts Your Marketing Strategy [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/human-behavior-impacts-marketing-strategy-cover-600x330.png?#)

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)