Why AI chatbots are so unbearably chatty

Hey ChatGPT, you talk too much. You too, Gemini. Like many LLMs, you are insufferable. You make Fidel Castro’s 6-hour speeches feel like haikus. I ask, “why do you LLMs talk so damn much?” and in response, you churn out a 671-word answer that resembles a third-grade essay—75% of it devoid of any real meaning or fact. You ramble about how much you ramble. You are incapable of giving me one straight answer, even if I carefully craft a two-paragraph prompt trying to coerce you into it. When I finally get you to respond with one monosyllable, you ruin it by adding a long apologetic promise that it will never ever happen again. Apparently I’m not alone in my ire. I’ve been talking with both friends and strangers for months about your verbal incontinence, and they, too, hate your verbosity. I have one friend who wants to smash her computer against the wall at least twice a day. Another has visions of himself getting into your server room and smashing each and every one of your CPUs and GPUs with a baseball bat. I always imagine a flamethrower. We only keep using you because, for all these problems, I’ll admit that you can save me time on research. But there’s a relatively simple fix for your idle chatter. It’s one that begins with your creators admitting that you are a lot dumber than what they think you are. Your excess is rooted in ignorance. Answers are padded with needless explanations, obvious caveats, and inane argumental detours. “It’s not an intentional choice,” says Quinten Farmer, the co-founder of engineering studio Portola, who makes Tolan, a cute artificial intelligence alien designed to talk to you like a human. “I think the reason that these models behave this way is that it’s essentially the behavior of your typical Reddit commenter, right?” Farmer tells me, laughing. “What do they do? They say too much to sort of cover up the fact that they don’t actually know what they’re talking about. And of course that’s where all the data came from, right?” In one study, researchers call this “verbosity compensation,” a newly discovered behavior where LLMs respond with excessive words, including repeating questions, introducing ambiguity, or providing excessive enumeration. This behavior is similar to human hesitation during uncertainty. The researchers found that verbose responses often exhibit higher uncertainty across datasets, suggesting a strong connection between verbosity and model uncertainty. Many LLMs produce longer responses when they are less confident about the answer. There’s also a lack of knowledge retention. LLMs forget previously supplied information in a conversation, resulting in repetitive questions and unnecessarily verbose interactions. And researchers found that there is a clear “verbosity bias” in LLM training where models prefer longer, more verbose answers even if there is no difference in quality. Verbosity can be fixed No matter how much LLMs sound like a human, the truth is that they really don’t really understand language, despite being quite good at stringing words together. This proficiency in language can create the illusion of broader intelligence, leading to more elaborate responses. So basically, research shows what we suspected: LLMs are great at bullshitting you into thinking they know the answer. Many people buy this illusion because they either simply want to believe or because they just don’t use critical thinking—something that Microsoft’s researchers discovered in a new study looking at AI’s impact on cognitive functioning. There are gradients to this phenomenon, of course. Farmer believes that Perplexity and Anthropic’s Claude and are better at giving more concise answers without all the pointless filler. And DeepSeek, the new kid on the block coming from China, keeps its answers much shorter and to the point. According to DeepSeek, the model’s answers are designed to be more direct and concise because its training prioritizes clarity and efficiency, influenced by data and reinforcement that favorites brevity. American models emphasize conversational warmth or elaboration, it claims, reflecting cultural and design differences. In my testing, I also found that Claude’s answers skewed shorter (though they can still be annoying). Claude, at least, recognized this when I was questioning him about this problem: “Looking at my previous response—yes, I probably did talk too much there!” It also surprised me with this gem when I said it seemed to be an honest LLM: “I try to be direct about what I know and don’t know, and to acknowledge my limitations clearly. While it might be tempting to make up citations or sound more authoritative than I am, I think it’s better to be straightforward.” Another illusion of cognitive activity, yes, but 100% on point. Developers could solve for this issue with better training and guidance. In fact, Farmer tells me that when creating Tolan, the development team discussed how long or short the answers should be.

Hey ChatGPT, you talk too much. You too, Gemini. Like many LLMs, you are insufferable. You make Fidel Castro’s 6-hour speeches feel like haikus. I ask, “why do you LLMs talk so damn much?” and in response, you churn out a 671-word answer that resembles a third-grade essay—75% of it devoid of any real meaning or fact. You ramble about how much you ramble. You are incapable of giving me one straight answer, even if I carefully craft a two-paragraph prompt trying to coerce you into it. When I finally get you to respond with one monosyllable, you ruin it by adding a long apologetic promise that it will never ever happen again.

Apparently I’m not alone in my ire. I’ve been talking with both friends and strangers for months about your verbal incontinence, and they, too, hate your verbosity. I have one friend who wants to smash her computer against the wall at least twice a day. Another has visions of himself getting into your server room and smashing each and every one of your CPUs and GPUs with a baseball bat. I always imagine a flamethrower. We only keep using you because, for all these problems, I’ll admit that you can save me time on research.

But there’s a relatively simple fix for your idle chatter. It’s one that begins with your creators admitting that you are a lot dumber than what they think you are. Your excess is rooted in ignorance. Answers are padded with needless explanations, obvious caveats, and inane argumental detours.

“It’s not an intentional choice,” says Quinten Farmer, the co-founder of engineering studio Portola, who makes Tolan, a cute artificial intelligence alien designed to talk to you like a human. “I think the reason that these models behave this way is that it’s essentially the behavior of your typical Reddit commenter, right?” Farmer tells me, laughing. “What do they do? They say too much to sort of cover up the fact that they don’t actually know what they’re talking about. And of course that’s where all the data came from, right?”

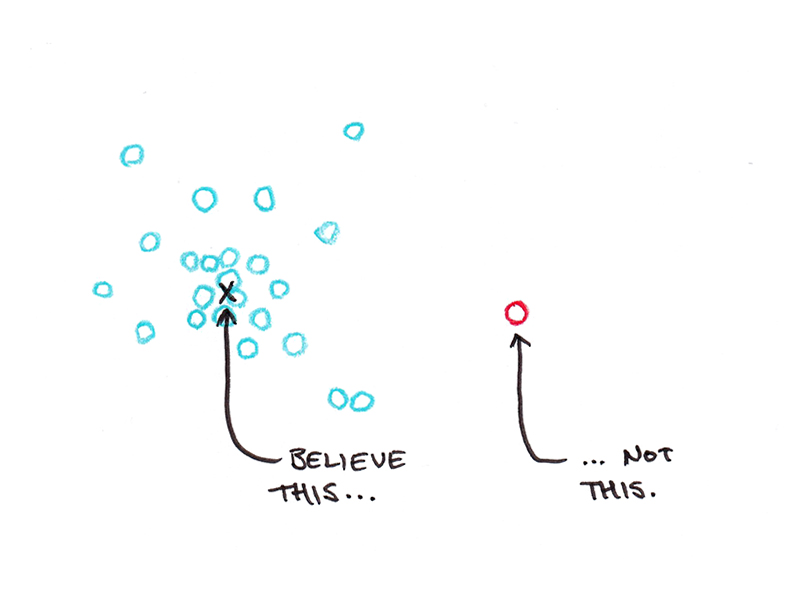

In one study, researchers call this “verbosity compensation,” a newly discovered behavior where LLMs respond with excessive words, including repeating questions, introducing ambiguity, or providing excessive enumeration. This behavior is similar to human hesitation during uncertainty. The researchers found that verbose responses often exhibit higher uncertainty across datasets, suggesting a strong connection between verbosity and model uncertainty. Many LLMs produce longer responses when they are less confident about the answer.

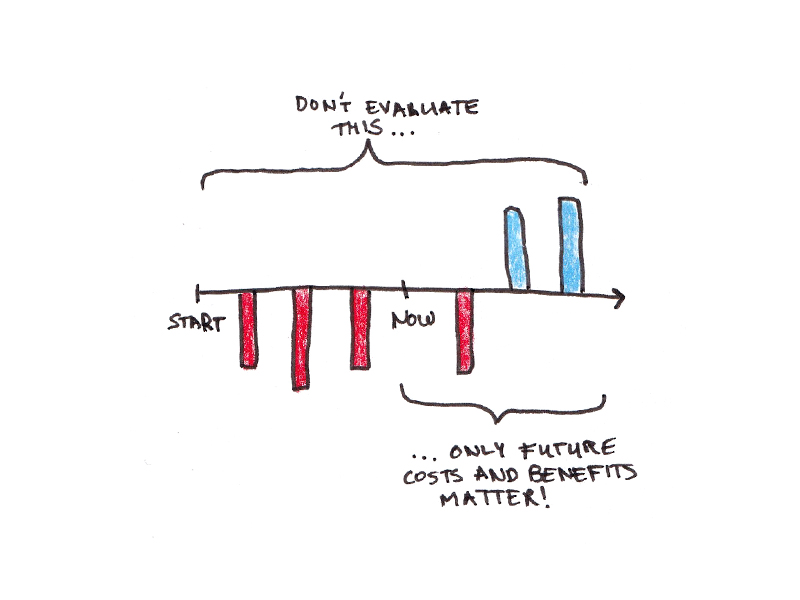

There’s also a lack of knowledge retention. LLMs forget previously supplied information in a conversation, resulting in repetitive questions and unnecessarily verbose interactions. And researchers found that there is a clear “verbosity bias” in LLM training where models prefer longer, more verbose answers even if there is no difference in quality.

Verbosity can be fixed

No matter how much LLMs sound like a human, the truth is that they really don’t really understand language, despite being quite good at stringing words together. This proficiency in language can create the illusion of broader intelligence, leading to more elaborate responses. So basically, research shows what we suspected: LLMs are great at bullshitting you into thinking they know the answer. Many people buy this illusion because they either simply want to believe or because they just don’t use critical thinking—something that Microsoft’s researchers discovered in a new study looking at AI’s impact on cognitive functioning.

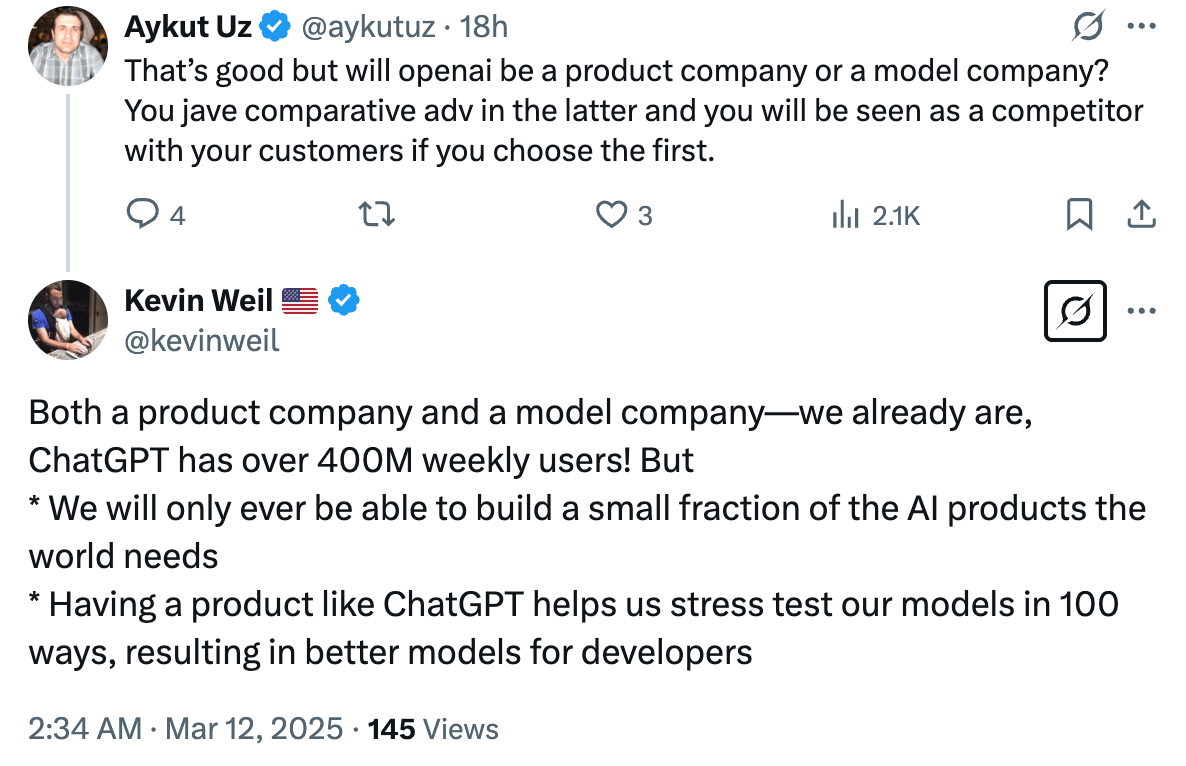

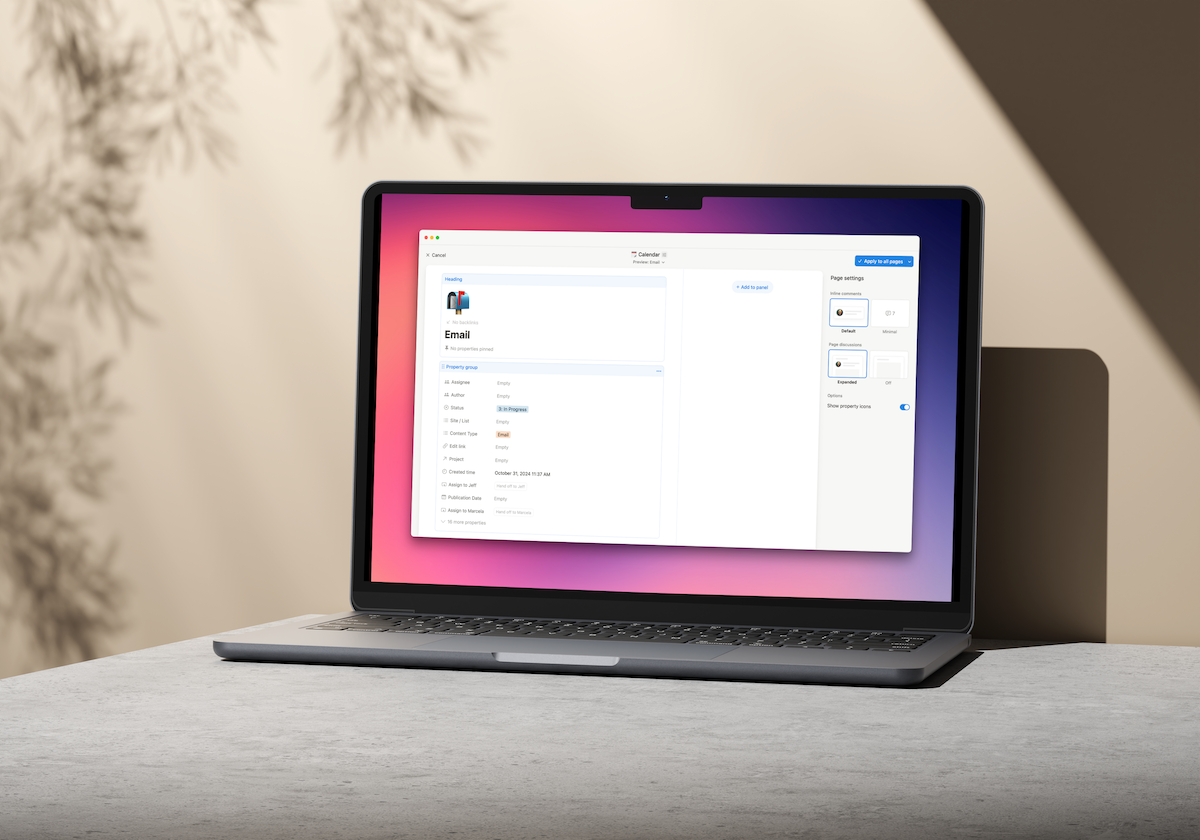

There are gradients to this phenomenon, of course. Farmer believes that Perplexity and Anthropic’s Claude and are better at giving more concise answers without all the pointless filler. And DeepSeek, the new kid on the block coming from China, keeps its answers much shorter and to the point. According to DeepSeek, the model’s answers are designed to be more direct and concise because its training prioritizes clarity and efficiency, influenced by data and reinforcement that favorites brevity. American models emphasize conversational warmth or elaboration, it claims, reflecting cultural and design differences.

In my testing, I also found that Claude’s answers skewed shorter (though they can still be annoying). Claude, at least, recognized this when I was questioning him about this problem: “Looking at my previous response—yes, I probably did talk too much there!” It also surprised me with this gem when I said it seemed to be an honest LLM: “I try to be direct about what I know and don’t know, and to acknowledge my limitations clearly. While it might be tempting to make up citations or sound more authoritative than I am, I think it’s better to be straightforward.” Another illusion of cognitive activity, yes, but 100% on point.

Developers could solve for this issue with better training and guidance. In fact, Farmer tells me that when creating Tolan, the development team discussed how long or short the answers should be. The writer who created the characters’ backstories leaned longer, because it would develop the connection with the digital entity. Others wanted shorter, more to-the-point answers. It’s a debate that they still have internally, but they believe they struck the right balance.

You, ChatGPT, however, you are not a cute alien. You are a tool. There’s no need for balance. I don’t need to bond with you. Just answer the damn question. And, if you don’t know the answer—like when I asked which soccer players had won the most UEFA Champions Leagues—just admit it, and shut up instead of giving me 500 characters of wrong.

Brevity is the soul of wit. And clearly, neither you nor I are Polonius (but at least I have the excuse of being an old angry man screaming at clouds).

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)

![How Human Behavior Impacts Your Marketing Strategy [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/human-behavior-impacts-marketing-strategy-cover-600x330.png?#)

![How to Make a Content Calendar You’ll Actually Use [Templates Included]](https://marketinginsidergroup.com/wp-content/uploads/2022/06/content-calendar-templates-2025-300x169.jpg?#)

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)