An Interview with Google Cloud Platform CEO Thomas Kurian About Building an Enterprise Culture

An interview with Google Cloud Platform CEO Thomas Kurian about Google's infrastructure advantage and building and enterprise service culture.

Good morning,

This week’s Stratechery Interview is with Google Cloud CEO Thomas Kurian. Kurian joined Google to lead the company’s cloud division in 2018; prior to that he was President of Product Development at Oracle, where he worked for 22 years. I previously spoke to Kurian in March 2021 and April 2024.

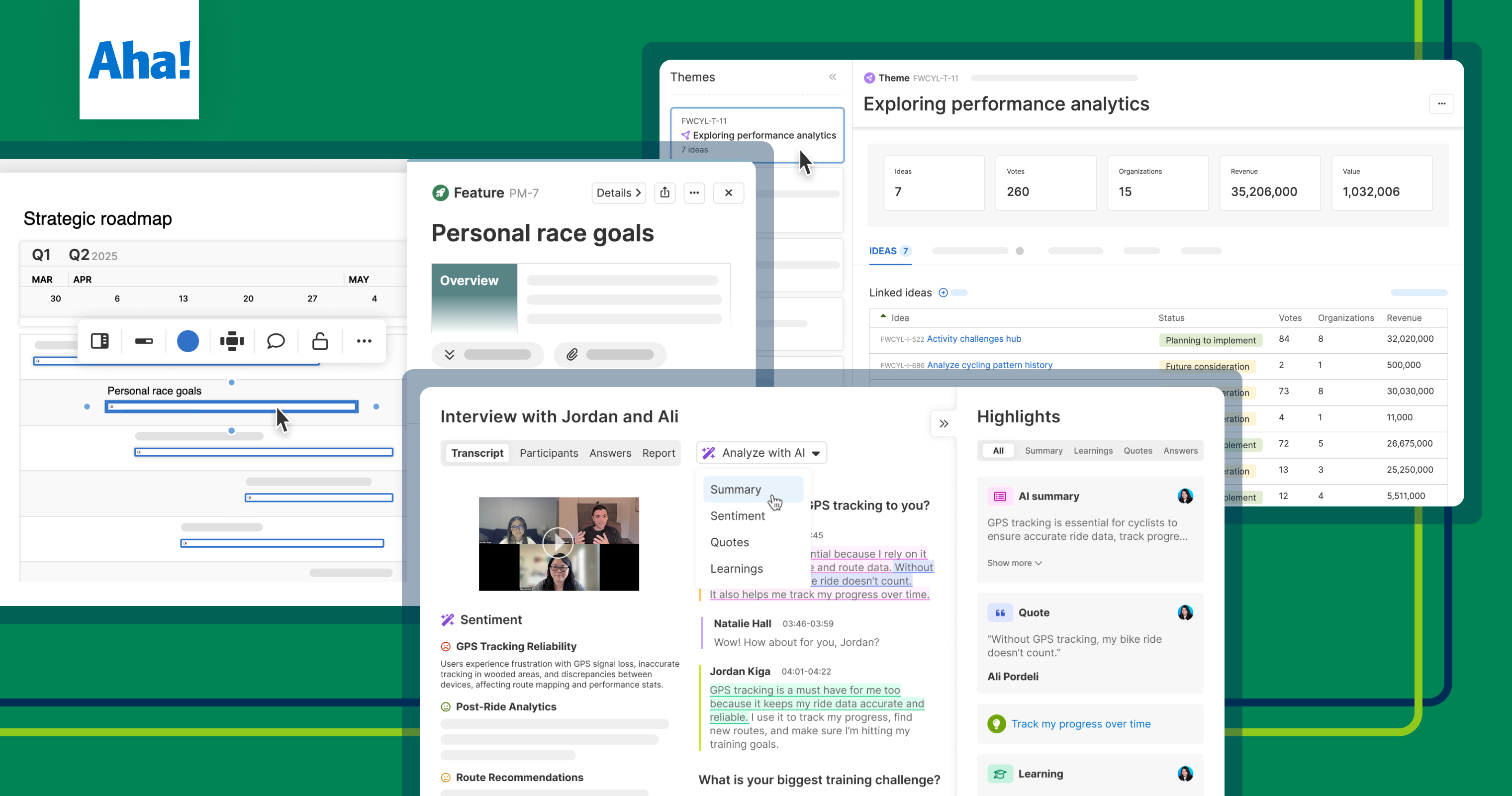

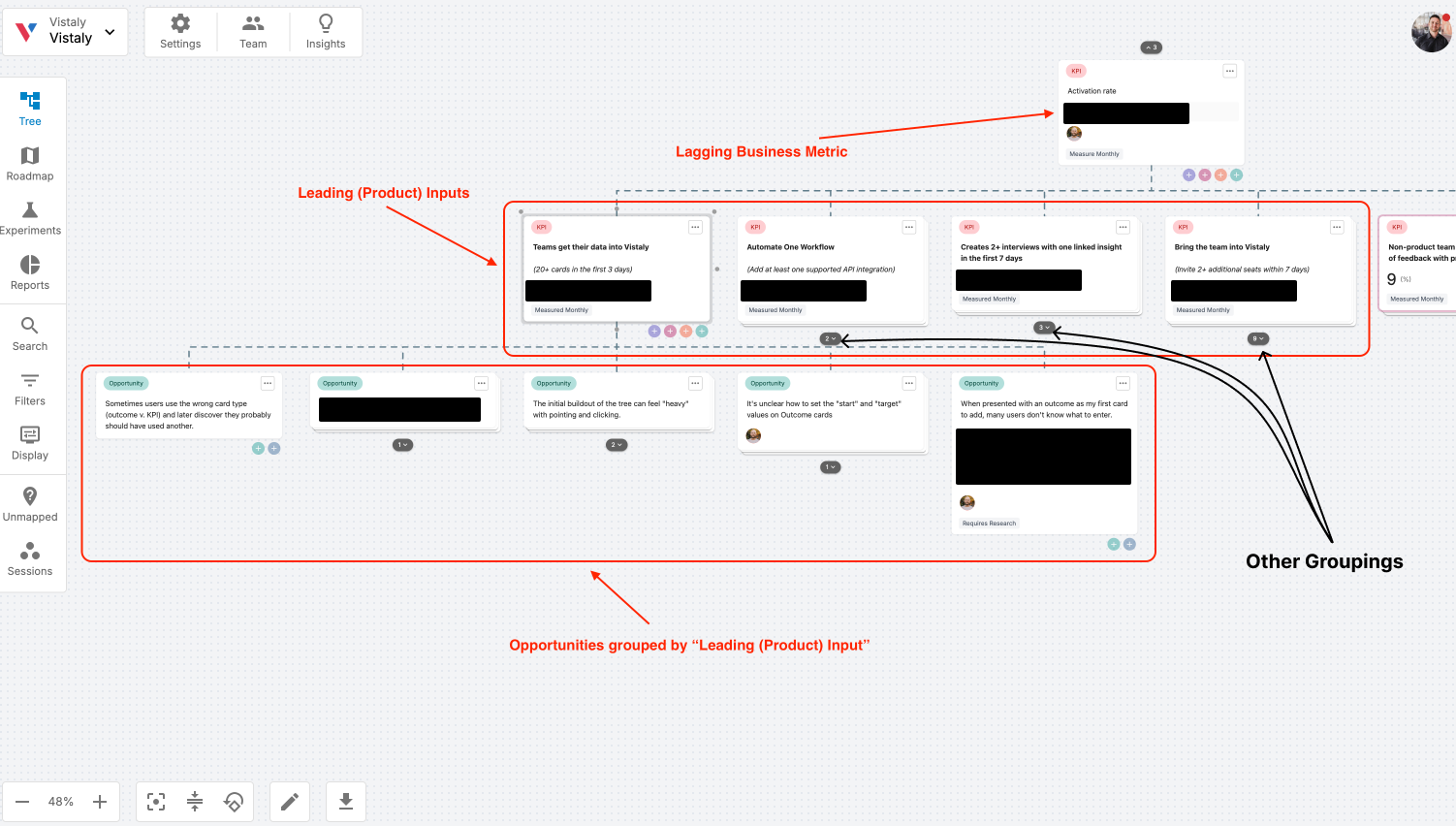

The occasion for this Interview was Kurian’s Google Cloud Next conference keynote. While this interview was conducted before the keynote, I did have a preview of the announcements: as I expected the overarching frame was Google infrastructure (although Google CEO Sundar Pichai did the actual announcements at the top). I think, as I wrote last year, this is a legitimate advantage, and a reason to believe in Google Cloud in particular: it’s the most compelling way to bring Google’s AI innovations to market given it is complementary — not disruptive — to the company’s core consumer business. To accomplish, however, requires an enterprise service culture; what has impressed me about Kurian’s tenure is the progress he has made in building exactly this, and I see the recent acquisition of Wiz as evidence that he has the wind in his sails in terms of corporate support.

We get into these questions, along with a general overview of the announcements, in this interview. Unfortunately the interview is shorter than expected; in an extremely embarrassing turn of events, I neglected to record the first 15 minutes of our conversation, and had to start the interview over. My apologies to Kurian and to you, and my gratitude for his graciousness and unflappability.

As a reminder, all Stratechery content, including interviews, is available as a podcast; click the link at the top of this email to add Stratechery to your podcast player.

On to the Interview:

An Interview with Google Cloud Platform CEO Thomas Kurian About Building an Enterprise Culture

This interview is lightly edited for clarity.

Infrastructure

Thomas Kurian. Welcome back to Stratechery, not just for the third time in a few years, but the second time today.

Thomas Kurian: Thanks for having me, Ben.

I have not forget to hit record in a very long time, I forgot to hit record today, what a disaster! It’s particularly unfortunate because you’re giving me time ahead of your Google Cloud Next keynote, which I appreciate. This is going to post afterwards. I’m also a little sad, I like to see the first five minutes. What’s the framing? What’s the overall, there’s going to be a list of announcements, I get that, but what’s the frame that the executive puts around this series of announcements? And so I’m going to ask you for a sneak preview. What’s your frame of the announcement?

TK: The frame of the announcement is we’re bringing three important collections of things to help companies adopt AI in the core of their company. So the first part is in order to do AI well, you have to have world-class infrastructure both for training models, but increasingly for inferencing, and we are delivering a variety of new products to help people inference efficiently, reliably, and performantly. Those include a new TPU called Trillium v7, new Nvidia GPUs, super fast storage systems, as well as people want to connect different parts of the world that they’re using for inferencing so they can use our network backbone, we have introduced new product called Cloud Wide Area Network [WAN], where they can run their distributed network over our infrastructure.

On top of that infrastructure we are delivering a number of world leading models. So Gemini Pro 2.5, which is the world-leading model, generative AI model right now in many, many, many different dimensions. A whole suite of media models, Imagen 3, Veo 2 for video, Lyria, Chirp — all these can be used in a variety of different ways and we’re super excited because we’ve got some great customer stories as well. Third, we also, along with those models, we’re introducing new models from partners. Llama 4, for example, is available and we’ve added new capabilities in our development tool for text to help people do a variety of things with these models.

Third, people have always told us they want to build agents to automate multiple steps of a process flow within their organization and so we’re introducing three new things for agents. One is an Agent Development Kit, which is an open source, open development kit supported by Google and 60+ partners that lets you define an agent and connect it to use different tools and also to interact with other agents. Second, we also are providing a single place for employees in a company to go search for information from the different enterprise systems, have a conversational chat with these systems to summarize and refine this information and use agents both from Google but also third parties to automate tasks, this new product is called Agentspace, it’s our fastest growing enterprise product. And lastly, we’re also building a suite of agents with this platform agents for data science, for data analytics, for what we call Deep Research for coding, for cyber security, for customer service, customer engagement. So there’s a lot of new agents that we’re delivering.

Finally, for us, an event like Cloud Next is always at the end of the year, you think about having worked hard to introduce 3000+ new capabilities in our portfolio. The event is still about what customers are doing with it and we are super proud, we have 500+ customers talking about all this stuff they’re doing with it and what value they’re getting from it. So it’s a big event, exciting event for us.

You did a great job since I made you summarize it twice. Thank you, I appreciate it, I’m still blushing over here.

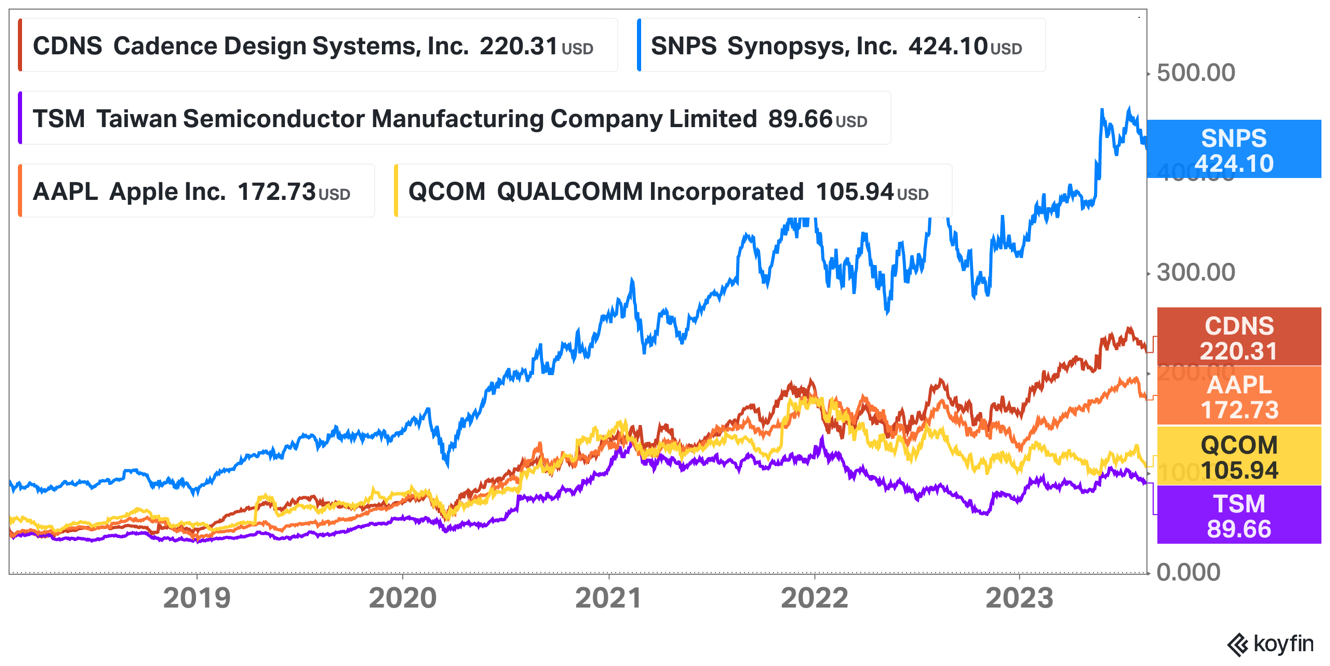

What jumps out to me — and you now know this is coming — is you led with infrastructure, that was your first point, and I just want to zoom out: it’s becoming accepted wisdom that models are going to be a commodity, I think particularly after DeepSeek. But if you go back to Google, a recent emailer to me made this point, “People used to say search was going to be a commodity, that’s why no one wanted to invest in it, and it turned out it was not a commodity”, but was it not a commodity because Google was so much better, or at what point did it matter that Google’s infrastructure also became so much better and that was really the point of integration that mattered? Are you saying this is Google Search all over again? It’s not just that you have the upfront model, it’s that you need all the stuff to back it up, and “We’re the only ones that can deliver it”?

TK: I think it’s a combination, Ben, first of all, for us, the infrastructure. Take an example, people want to do inference. Inference has a variety of important characteristics. It is part of the cost of goods sold of the product that you’re building on top of the model. So being efficient, meaning cost-efficient, is super important.

Has that been hard for people to think about because compute — we’ve gotten to the point where people treat it as free at scale, it gets very large. Are people getting really granular on a per-job basis to cost these out?

TK: Yes, because with traditional CPUs it was quite easy to predict how much time a program would take, because you you could model single-thread performance of a processor with an application. With AI you are asking the model to process a collection of tokens and give you back an answer, and you don’t know how long that token processing may take, and so people are really focused on optimizing that.

I also think we have advantages in performance latency, reliability. Reliability for example, if you’re running a model, and particularly if you’re doing thinking models where you think, it has think time, you can’t have the model just crash periodically because your uptime of the application which you’ve delivered it will be problematic. So all of these factors, we co-optimize the model with the infrastructure, and when I say co-optimize the model with the infrastructure, the infrastructure gives you ultra-low latency, super scalability, the ability to do distributed or disaggregated serving in a way that manages state efficiently.

So there are many, many things the infrastructure gives you and that you then can optimize with the model and when I say with the model, the capabilities of the model, for example, if you’re asking a model, take a practical example, we have customers in financial services using our customer engagement suite, which is used for customer service sales, all of these functions. Now one computation they have to do is determine your identity and determine if you’re doing fraudulent activity. So a key question is how smart is a model in understanding a set of questions that it asks you and summarizing the answer for itself, and then comparing that answer determine if you’re fraudulent or not. However, you also have to reason fast because it’s in the transaction flow so it can’t take infinite time, and the faster the model can reason, the more efficient the algorithm can be to look at a broader surface area to determine if you’re actually doing fraud so it can process more data to be more accurate in determining fraud. So these are examples of things, models plus the infrastructure are things that people want from us.

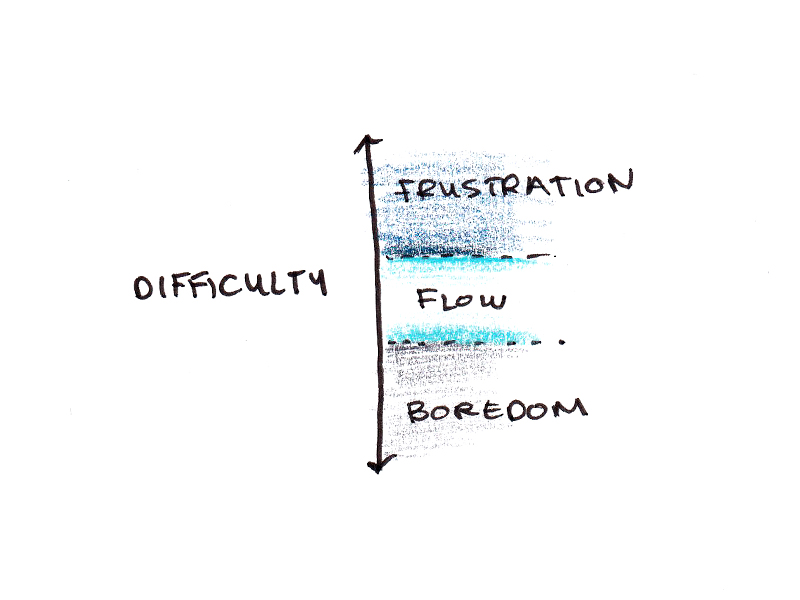

One thing that does strike me about this framing and basically saying, “Look, Google has this incredible infrastructure, we’re opening it up”, you had the Cloud WAN thing that you mentioned, right? You go back historically, Google buying up all the dark fiber, the foundation of this. This stuff’s going to be connected really, really rapidly. However, I think the critique of Google, and I was talking to the CEO of Tailscale, a former Google employee, a few weeks ago, and his point was, “Look, it is so hard to start stuff at Google because it’s all built for massive scale”, and I guess that’s the point of the name of his company, which is they’re building for the long tail. Does that mean though, for Google Cloud, I think this is a very compelling offering, I wrote about this last year too, like look, this makes a lot of sense, this makes sense for Google, but does that mean your customer baseis going to entail going big game hunting because you are creating an offering that is really compelling to very large organizations who have the capabilities of implementing it and see the value of it, and so that’s going to be your primary target?

TK: Here’s the thing, I think the statistics speak for themselves. We’ve said publicly that well north of 60% of all AI startups are running on Google Cloud, 90% of AI unicorns are running on Google Cloud and use our AI tools, a huge number of small businesses run on our cloud. Millions of small businesses run on our cloud and do their business on our cloud. All that is because we’ve made it easier and easier for people to use it and so a big part of our focus has been, “How do we simplify the platform so that people can access and build their business?” — some of them can start small and stay small and some of them can start small and grow and it’s largely in their hands what aspirations they have, and we have people from all over the world. Been building on our stuff, starting from a garage with a credit card and it’s always been the case that we are focused not just on what they call the head but the torso and tail as well.

But do you think that some of these pitches that you have are particularly attractive to a large organizations, just because they’ve run into and encountered those needs and they see, “Yeah, actually the Cloud WAN really matters, the three levels of high speed disk access that we’re offering is meaningful, we understand those numbers”?

TK: Some of those are definitely more optimized for large enterprises, but just as here’s the practical example, if you’re building an inferencing model, smaller companies don’t want to build their own model, they just want to use a model, and if you look at the amount of capability we’ve added, just as a very small example, Chirp is our speech model. It used to be hundreds of thousands of words to make Chirp speak like you, it’s a fraction of that so that a small business, if it wants to be a restaurant and say, “Hey, I want to build a welcoming, when you call my phone number, I can say, ‘Welcome, it’s XYZ restaurant'”.

Now you can just tune it by just speaking with it, so there’s a lot of simplification of the technology also that happens to make the reach of it much easier for people, and then by integrating it into different products so that you don’t have to, for example, a customer engagement suite, allows you to train a model to handle customer questions by teaching it with the same language that you would teach a human agent. You can give it instructions in English and it can teach the model how to behave just like a human does. So there’s many, many things we’re doing not just to add the sophistication for the large enterprises, the performance and speed, but also simplify the abstraction so that someone smaller can use it without worrying about the complexity.

GCP’s Culture Change

One of the tensions I’m really interested in is the importance of model accuracy and the importance of also you’ve talked about access. Which data do you have access to, which do you not have access to, all these sorts of things, and it seems to me this is a problem that’s more easily solved if you control everything. If everything’s in Google, you can deliver on this promise more effectively. But Google Cloud is very much presenting itself as we’re embracing multi-cloud, we understand you have data and things in different places and part of some of this networking offering is, “We’re going to help you connect that in an effective way”. Is there a tension there? Where can you deliver on those promises when you’re trying to pull data from other cloud providers or other service providers at the past level or whatever it might be?

TK: Two points to respond to that. When we first said multi-cloud in 2019, people said at that time if you went back, 90% of enterprises were single cloud and it was not Google, and today I think the public numbers show over 85% of enterprises are using at least two if not three clouds, and our own growth reflects it.

Just one example of something we said early on, Ben, was people will want to analyze data across all the clouds in which they have data without copying all of it. Today we have a product called BigQuery. BigQuery is four times larger than the number two data cloud, seven times larger than the number three, four and five, and 90% plus of BigQuery users are moving data to it from AWS, Azure and other clouds. So that was proven.

Now if you look at AI, the heart of AI in a company is, “Can you connect AI to my core systems?”, and so if you’re building an employee benefit system like Home Depot was, you’d want to connect it to your HR system. If you want to build a customer service application, you need to connect to a Salesforce or a ServiceNow. So we built connectors against Microsoft Office, Adobe Acrobat, OneDrive, SharePoint, Jira, Salesforce, Workday — there’s 600 of these that we’ve delivered already and there’s another 200 under development that will allow the model to understand the data model of, for example, a CRM system. It understands, “What’s an account?”, “What’s an opportunity?”, “What’s a product?”, and so we teach the model how to understand these different data elements and also when it accesses it, how it maintains my permissions, I only get to see what I’m authorized to see.

What’s interesting about this, what you’re pitching here with these connectors and being able to pull this in effectively, is my longstanding critique of Google Workspace, or back when it was Google Docs or the various names over the years, is I feel Google really missed the opportunity to bring together the best SaaS apps of Silicon Valley, and basically they were the ones that could unify and have an effective response to the Microsoft suite, where every individual product may be mediocre, but at least they work together. This connector strategy feels like a very direct response, “We’re not going to screw that up again, we’re going to try to link everything together”. I’m almost more curious, number one, is that correct? But then number two, has this been an internal culture change you’ve had to help institute where “Yes, we are used to doing everything ourselves, but we have to develop this capability to partner effectively”?

TK: I think the reality is if you look at our platform, AI is going to be a platform game, whoever’s the best platform is going to do well. To do a platform for companies, it has to coexist with a heterogeneity of a company, you cannot go into a company and say we want everything you’ve got.

So we’ve done three things. One, built a product called Agentspace, it allows users to do the three things they really want, search and find information, chat with a conversational AI system that helps them summarize, find and research that information, and then use agents to do tasks and automated processes. Number two, that product can then interoperate because we’ve introduced an open AI Agent Kit with support from 60 plus vendors for an Agent2Agent [A2A] protocol, so our agents can talk to other agents whether they were built by us or not. So for example, our agents can talk to a Salesforce agent, or a Workday agent or a ServiceNow agent or any other agent somebody happened to build. Third, by simplifying this, we can then make it easy for a company to have a single point of control for all their AI stuff within their organization and so we are bringing more of this capability to help people have a way of introducing AI into their company without losing control.

But was this hard? I get the offering, I’m just curious. Internally, as you know, I think you’ve done an amazing job, I think that the way Google Cloud has developed has been impressive, and I really am curious on this culture point. I think to give an example, I mean one of the reasons I am bullish about about GCP in general is it feels like it’s the cleanest way for Google to expose its tremendous capabilities in a way that’s fundamentally not disruptive to its core business. There’s lots of questions and challenges in the consumer space, the reality is Google has amazing infrastructure, it has amazing models that are co-developed as you said, and this is a very clean way to expose them.

The problem is the enterprise game, its partnerships, its pragmatism, it’s, “Okay, you really should do it this way, but we’ll accommodate doing that way”. And to me, one of the most interesting things I want to know from the outside is, has that been an internal struggle for you to help people understand we can win here, we just have to be more pragmatic?

TK: It’s taken a few years, but every step on the journey has helped people understand it. I’ll give you an example. Early on when we said “We should put our should make BigQuery analyze data no matter where it sits and we call that federated query access”, people were like, “Are you serious? We should ask people to copy the data over!”. The success of it, and the fact that we were able to see how many customers were adopting it helped the engineers think that’s great.

The next step, we introduced our Kubernetes stack and our most importantly our database offering something called AlloyDB, and we said, “Hey, customers love AlloyDB, it’s a super fast relational database, but they want to be able to run a single database across all their environments, VMware on-premise, Google Cloud and other places”. That drove a lot of adoption, so that got our engineers interested.

So when we came into AI and we said, look, people want a platform and the platform has three characteristics: it needs to support models from multiple places, not just Google — so today we have over 200 models. Number two, it needs to interoperate with enterprise systems, and number three, when you build an Agent Kit for example, you need to have the ecosystem support it, and we have work going on actively with companies to do that.

We’ve been through these last three, four years, Ben, I would say, and the success that it has driven based on customers telling our guys, “Hey, that was the best thing you guys did to make this work”, I think it’s been easier now for the organization to understand this.

Wiz and Nvidia

Yeah, that makes a lot of sense to me. That’s my sense is you now have people doing the work to take these tremendous internal technology and actually make it useful to people on the outside, that’s step number one. I think it’s interesting to put this also in the context of the recent Wiz acquisition: Wiz on its face of it is a multi-cloud solution and people ask, “Why does Google buy instead of build?” — my interpretation is, well Google, you’ve done a good job getting Google to make their internal systems federatable, I don’t know if that’s a word, broadly accessible. They’re not necessarily going to build a multi-cloud product, but you’ve gained so much credibility internally that you can go out and buy the extra pieces that make sense that do fit into that framework, is that a good interpretation?

TK: It’s a good interpretation.

I’ll give you a very simple picture of what we have been trying to do with cyber. What people really want from cyber is a combination in a software platform of three important things. One, can you collect all the threats that are happening around the world? Can you prioritize them so I can see which threats are the most active and which ones may affect me? That’s basically the rationale behind what we’ve done with Mandiant, they bring the best threat team in the world. We’ve taken their findings and put it along with Google’s own intelligence in a product called Google Threat Intelligence.

The second step, I want to understand all my enterprise systems, whether they’re in Google Cloud or in other clouds or on-premise, whether they’ve been compromised and I want to do analysis to see one, if they were compromised, how did the compromise occur? And then to remediate it and to test that I’ve remediated it. That’s a product that we built called Google Security Operations, and that has been under development and it supports the multi-cloud.

And the third thing that came along was when we were looking at it, we said it would also be really valuable if you could look at the configuration of your cloud, the configuration of your users accessing the cloud, what their permissions were and the software supply chain that was pushing changes to your cloud and to the applications running on your cloud so you could understand how did your environment get into that state in the first place? That’s where we felt Wiz was the leader and that’s why we’ve made an offer to acquire them, and all along it’s been this belief that enterprises want a single point to control the security of all of their environments. They want to be able to analyze across all these environments and protect themselves, and we publicly said, just like Google Security operations at Mandiant supports other clouds and even on-premise environments, we will do the same with Wiz.

You did announce a new TPU architecture, but you still take care to highlight your Nvidia offerings. I also noted that on earnings last time they said Google Cloud, your growth was limited by supply, not demand and I think if you look at the numbers, I agree with that, I think that was clearly the case. Was this running out of Nvidia GPUs specifically? What’s the balance? Of course you’re leading with TPUs, but is that really just an internal Google thing or are you having success in getting external customers to use them?

TK: We have a lot of customers using TPUs and Nvidia GPUs, some of them use a mixture of them, for example, some of them train on one inference on the other, others train on GPUs and inference on TPUs, so we have both combinations going on. We have a lot of demand coming in. If you looked at the quarter prior to the last quarter, we grew very, very fast, we grew 35% and so we’re managing supply chain operations, environments, data centers, all of these things.

If you look at your costs, it was totally validated in this case, it was very clear you just didn’t have enough capacity.

TK: That’s right. And so we are working on addressing it and we expect to have it resolved shortly.

Is this a bifurcation? I can’t remember if this was on our non-recorded part or the recorded part, but I was asking you about if you have Google infrastructure, big iron, one of the critiques is, well, it’s hard to get started on it and to just spin something up and to iterate and it feels like you leading with infrastructure. This is, “We’re going big game hunting”, this is going to make more sense to our enterprises. Multi-cloud makes more sense to large enterprises, but you also have your pitch, “Look, lots of AI startups are on Google”. Is this a split point here where the larger you are actually, the more you are leaning into these innate Google advantages because you can calculate them out and if you’re smaller, “Look, I just need Nvidia GPUs, I can hire a CUDA engineer”, is that a bifurcation?

TK: It isn’t necessarily exactly like that, there are some people who started with PyTorch and CUDA and others who have started with JAX, and so that community tends to be largely the place where people start from. At the same time, there also details like, “Are you building a dense model??, “Are you building a mixture of experts?”, “Are you building a small, what they call sparse model?”, and depending on the characteristics, people underestimate.

Ben, we have, although we call it TPU and GPU — GPU for example, we have 13 different flavors, and so it’s not just one, it’s because there’s a range of these things that people want. If you follow me, we’ve also done a lot of work with [Nvidia CEO] Jensen [Huang] and team to take JAX and make it super efficient on GPUs. We’ve taken some of the technology that was built at Google called Pathways, which is great for inference, and now made it available on our cloud for everybody. So there’s a lot of things we’re doing to bring an overall portfolio across TPU and GPU, we let customers choose what might be the best one because for example, even TPU, we have Trillium v6 and v7. They’re optimized for different things because some people want something just for lightweight inference, some people want a much denser model and there’s a lot of configuration elements to choose from and our goal has always been increase the breadth so that people can choose exactly what makes sense for them.

That’s the enterprise pragmatism that I expect. But is there a reality that, “Look, there actually is long-term — to circle back to the beginning — there is long-term differentiation here, models are not a commodity, you actually want a full stack, you should be using Gemini on TPUs, it’s going to actually be better in the long run” — is that your ultimate pitch? We’ll accommodate you, but you’re going to end up here because it’s better.

TK: For a set of tasks, definitely Gemini is the best in the world, our numbers show it. Is it truly the best for everything a person wants to do? Remember, there are many, many places, Ben, that are working with models that aren’t even generative models. I mean, we have people building scientific models, we have Ginkgo Bioworks for example, building a biological model. There’s people building — Ford Motor Company is doing their wind tunnel testing using an AI model to simulate wind rather than put the cars inside a physical wind tunnel exclusively. So there are many, many things like that that people are doing with it, we are obviously investing in the infrastructure to support everybody.

At the same time, we’re also focused on making sure our models in the places where we will deliver models, are world-class. And one example is Intuit, Intuit has a great partnership with us, they use a variety of our models, but they also are using open source models, and the reason they’re using open source models, they’re taxation, they build tax applications, TurboTax, variety of other things and in some of those they have tuned their own models on open source and we see the value of that because they’ve got their own unique data assets and they can tune a model just for what they need.

Media Models and Advertising

Is the focus on all the media models, is there a really important market for you? Is that tied to advertising? You need to generate a lot of media for ads and Google is an advertising business, is there a direct connection there?

TK: There are three or four different industries that are using media. There are people, for example, Kraft Heinz and Agoda, fall in the place where you’re talking about with advertising, and there’s a whole range of them doing it, WPP, etc, so that’s for advertising. And there are many things you’d want in an advertising in a model that you’re optimizing for advertising like control of the brand style, control of the layout. If I’m writing Coca-Cola, “On the can make it look like Coca-Cola script so I can superimpose my stuff on it”, etc., that’s one group.

Second group is media companies that are building frankly movies and things of that nature. I mentioned one example, the Sphere and what we’re doing with them on bringing The Wizard of Oz to life. That’s a really cool, exciting project — so that’s the second.

Third is creators, and creators are people who want to use the model to build their own content, and there are many, many examples. There’s people who want to build their own content, there’s people who want to dub it in all the languages, there’s people who want to create a composition and then add background music to it, there’s an infinite number of variants of these.

And then lastly, there are companies, there are companies who are using frankly some of these for training materials, teaching and a variety of internal scenarios. So it’s all four of these that we’re focused on with these models. Obviously if you can solve, I mean just to give you an example of the Sphere and The Wizard of Oz, have you ever been to the Sphere, Ben?**

I’ve only been to the outside, I’ve not been inside.

TK: Inside is totally mind blowing.

Yeah, I know. I’m regretting it, I can’t believe I haven’t been there, I feel ashamed answering your question.

TK: It’s the most high-tech, super-resolution camera in the world now. What we did with AI, working with them, and we’re not yet done, we’re in the middle of making the movie, is to bring The Wizard of Oz to life. And when I say to bring it to life, you feel like you are on set and you can experience it with all the senses. So you can feel it, you can touch it, you can hear it, you can see it and it’s not like watching a 2D movie. Now to get a model to do what we did with them, a collection of models, you really have to have a state of the art. So if it’s good enough for them, it’s definitely good enough for somebody who wants to build a short video explaining how to repair their product.

Do you feel the wind behind your back? You’ve internally, everyone is now on board, “GCP is the horse we’re riding, we understand we need to be multi-cloud, we need to serve all these different folks”, and now you feel empowered to go out. Is the ground laid?

TK: People are excited about the growth we have. I’ve always said from the very start: we are focused on strategy and we need to execute and our results are always, we prove our results on the game, we leave our results on the floor, if you will. Our numbers have shown that we are very competitive and very capable, and it’s a credit to the team that we have that’s resilient, been through many, many challenges, but have got us here. I think that’s success.

For example, the way we work with [DeeMind CEO] Demis [Hassabis] and our DeepMind team, from the time a model is ready to the time our customers have it is six hours, so they’re getting the latest models from us. It’s super exciting for them to be able to test it, use it, and get feedback on it. I mean three, four weeks ago we introduced a free model of our coding tool from Gemini and literally in three days it went from zero to a hundred thousand users. And the feedback that it gives the Gemini team, meaning Demis’ team, that’s helped improve the model in so many different ways and so we are very grateful for all those teams that Google help us, particularly DeepMind.

I think those teams at Google are grateful for a distribution channel where they don’t need to depend on social media saying, “Gemini 2.5 is great”, you’ll call up a company executive and say, “You’ve got to try this, it’s pretty good”.

TK: And we definitely are seeing that it’s that combination of the reach we have through our consumer properties, but also the strength we have in enterprise that give us resilience as a company.

Well, we went long in real time. Short on the podcast, my big mistake, but it was great to catch up and I look forward to seeing your first five minutes.

TK: Thanks so much, Ben.

This Daily Update Interview is also available as a podcast. To receive it in your podcast player, visit Stratechery.

The Daily Update is intended for a single recipient, but occasional forwarding is totally fine! If you would like to order multiple subscriptions for your team with a group discount (minimum 5), please contact me directly.

Thanks for being a supporter, and have a great day!

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How One Brand Solved the Marketing Attribution Puzzle [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/marketing-attribution-model-600x338.png?#)