What ‘Ex Machina’ got right (and wrong) about AI, 10 years later

Before media outlets began comparing OpenAI’s Sam Altman with the father of the atomic bomb, and before Amazon’s Jeff Bezos got jacked, we had Nathan Bateman, the iron-pumping, AI-developing tech broligarch played by Oscar Isaac in the 2015 film Ex Machina. Written and directed by Civil War helmer Alex Garland, Ex Machina is ostensibly about a modern-day Turing test. Bateman, the mastermind behind a Google/Facebook surrogate, has secretly developed a humanoid AI and arranged for talented coder Caleb (Domhnall Gleeson) to fly out to his remote compound for a week to determine whether Ava (Alicia Vikander) exhibits enough consciousness to pass for human. You know, sort of what many of us have been doing since AI hit the mainstream in 2022. “One day AI’s are gonna look back on us the way we look at fossils and skeletons in the plains of Africa,” Bateman says at one point. “An upright ape living in dust, with crude language and tools, all set for extinction.” Caleb, the film’s only other central flesh-and-blood character, responds by comparing Bateman to J. Robert Oppenheimer—years before the press would do so with Altman. Has humanity officially entered its extinction era in the decade since Ex Machina won a Best Visual Effects Oscar and a Best Screenplay nomination for Garland? That remains to be seen. Plenty of evidence already exists, however, to prove the movie’s foresight. It’s giving human While AI in 2025 may not look and move like Ava in Ex Machina, they certainly do talk like her. When Caleb first meets Ava, he is struck by the sight of her, and blown away by her language abilities. He quickly suspects that they are stochastic—meaning the AI isn’t programmed to always respond to the same dialogue prompts in the same way, but instead selects from a probability distribution of possible words and phrases. That randomized chaos-factor allows for more natural-sounding and varied speech. It’s worlds away from Lost in Space’s “Danger, Will Robinson.” Stochastic text generation was not yet a consensus choice for AI chatbots in 2015, but rather one of several options. No consensus then existed. IBM’s Watson, for instance, introduced in 2011, was considered quite advanced at that time and employed a different approach to language. The process Bateman uses for Ava’s speech, though, is the same one now used in OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, and Mistral-based chatbots. Of all the language possibilities director Garland could have chosen for Ex Machina, he chose the right one. Unethical tech billionaires Generally speaking, tech billionaires used to command a lot more respect. It may be hard to recall at a time when Elon Musk has become one of the world’s most demonstrably despised humans, and Mark Zuckerberg isn’t too far behind, but it’s true. Insulated by an aura of genius, an avalanche of money, and minimal transparency, the tech startup CEO occupied a rarified perch in the cultural imagination throughout the early-2010s. Even the shady portrayal of Zuckerberg in 2010’s The Social Network is merely ruthless and, uh, antisocial, rather than straight-up malevolent. So, it was kind of a swerve for Garland to portray the CEO in Ex Machina as a lawless, hypermacho drunk with zero scruples. Bateman is, first and foremost, unethical. He has secretly invited Caleb to his compound not to test whether Ava will withstand his expert scrutiny but to see if Ava will use Caleb as a means of escape. (Spoiler alert: She does.) Bateman also apparently conducts all his AI experiments with zero regulatory oversight, and is exclusively interested in creating female-coded AI, never men. He seems to embody many of the worst traits now associated with Big Tech leaders like Jeff Bezos, Musk, and Zuckerberg, the latter of whom has recently advocated for more masculinity in the workplace. At the time Ex Machina was released, Facebook’s data-harvesting Cambridge Analytica scandal was still over a year away. Elon Musk had not yet been (unsuccessfully) sued for calling a rescue diver a “pedo,” nor had he been investigated for fraud by the Securities and Exchange Commission. In the years since, art has imitated life more closely. Tech CEOs have had similarly villainous portrayals in films like 2021’s Don’t Look Up and 2022’s Glass Onion: A Knives Out Mystery. Garland got there first, though. Training AI on data taken without permission One of the first images in Ex Machina is Caleb’s face, as observed from his work computer’s camera. It’s a subtle tip-off that Bateman has been spying on his employee in the lead-up to their week together. Only later is it revealed that Bateman designed Ava’s face and body based on data collected from Caleb’s “pornography profile,” a phrase that might send a shiver down the spines of some viewers. This violation of privacy, however, is small digital potatoes compared with the revelation that Bateman has already hacked into the cellphon

Before media outlets began comparing OpenAI’s Sam Altman with the father of the atomic bomb, and before Amazon’s Jeff Bezos got jacked, we had Nathan Bateman, the iron-pumping, AI-developing tech broligarch played by Oscar Isaac in the 2015 film Ex Machina.

Written and directed by Civil War helmer Alex Garland, Ex Machina is ostensibly about a modern-day Turing test. Bateman, the mastermind behind a Google/Facebook surrogate, has secretly developed a humanoid AI and arranged for talented coder Caleb (Domhnall Gleeson) to fly out to his remote compound for a week to determine whether Ava (Alicia Vikander) exhibits enough consciousness to pass for human. You know, sort of what many of us have been doing since AI hit the mainstream in 2022.

“One day AI’s are gonna look back on us the way we look at fossils and skeletons in the plains of Africa,” Bateman says at one point. “An upright ape living in dust, with crude language and tools, all set for extinction.”

Caleb, the film’s only other central flesh-and-blood character, responds by comparing Bateman to J. Robert Oppenheimer—years before the press would do so with Altman.

Has humanity officially entered its extinction era in the decade since Ex Machina won a Best Visual Effects Oscar and a Best Screenplay nomination for Garland? That remains to be seen. Plenty of evidence already exists, however, to prove the movie’s foresight.

It’s giving human

While AI in 2025 may not look and move like Ava in Ex Machina, they certainly do talk like her.

When Caleb first meets Ava, he is struck by the sight of her, and blown away by her language abilities. He quickly suspects that they are stochastic—meaning the AI isn’t programmed to always respond to the same dialogue prompts in the same way, but instead selects from a probability distribution of possible words and phrases. That randomized chaos-factor allows for more natural-sounding and varied speech. It’s worlds away from Lost in Space’s “Danger, Will Robinson.”

Stochastic text generation was not yet a consensus choice for AI chatbots in 2015, but rather one of several options. No consensus then existed. IBM’s Watson, for instance, introduced in 2011, was considered quite advanced at that time and employed a different approach to language. The process Bateman uses for Ava’s speech, though, is the same one now used in OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, and Mistral-based chatbots. Of all the language possibilities director Garland could have chosen for Ex Machina, he chose the right one.

Unethical tech billionaires

Generally speaking, tech billionaires used to command a lot more respect. It may be hard to recall at a time when Elon Musk has become one of the world’s most demonstrably despised humans, and Mark Zuckerberg isn’t too far behind, but it’s true. Insulated by an aura of genius, an avalanche of money, and minimal transparency, the tech startup CEO occupied a rarified perch in the cultural imagination throughout the early-2010s. Even the shady portrayal of Zuckerberg in 2010’s The Social Network is merely ruthless and, uh, antisocial, rather than straight-up malevolent. So, it was kind of a swerve for Garland to portray the CEO in Ex Machina as a lawless, hypermacho drunk with zero scruples.

Bateman is, first and foremost, unethical. He has secretly invited Caleb to his compound not to test whether Ava will withstand his expert scrutiny but to see if Ava will use Caleb as a means of escape. (Spoiler alert: She does.) Bateman also apparently conducts all his AI experiments with zero regulatory oversight, and is exclusively interested in creating female-coded AI, never men. He seems to embody many of the worst traits now associated with Big Tech leaders like Jeff Bezos, Musk, and Zuckerberg, the latter of whom has recently advocated for more masculinity in the workplace.

At the time Ex Machina was released, Facebook’s data-harvesting Cambridge Analytica scandal was still over a year away. Elon Musk had not yet been (unsuccessfully) sued for calling a rescue diver a “pedo,” nor had he been investigated for fraud by the Securities and Exchange Commission. In the years since, art has imitated life more closely. Tech CEOs have had similarly villainous portrayals in films like 2021’s Don’t Look Up and 2022’s Glass Onion: A Knives Out Mystery. Garland got there first, though.

Training AI on data taken without permission

One of the first images in Ex Machina is Caleb’s face, as observed from his work computer’s camera. It’s a subtle tip-off that Bateman has been spying on his employee in the lead-up to their week together. Only later is it revealed that Bateman designed Ava’s face and body based on data collected from Caleb’s “pornography profile,” a phrase that might send a shiver down the spines of some viewers. This violation of privacy, however, is small digital potatoes compared with the revelation that Bateman has already hacked into the cellphones of millions around the world in order to steal data for his AI. (“Well, if a search engine’s good for anything . . . ,” he quips.)

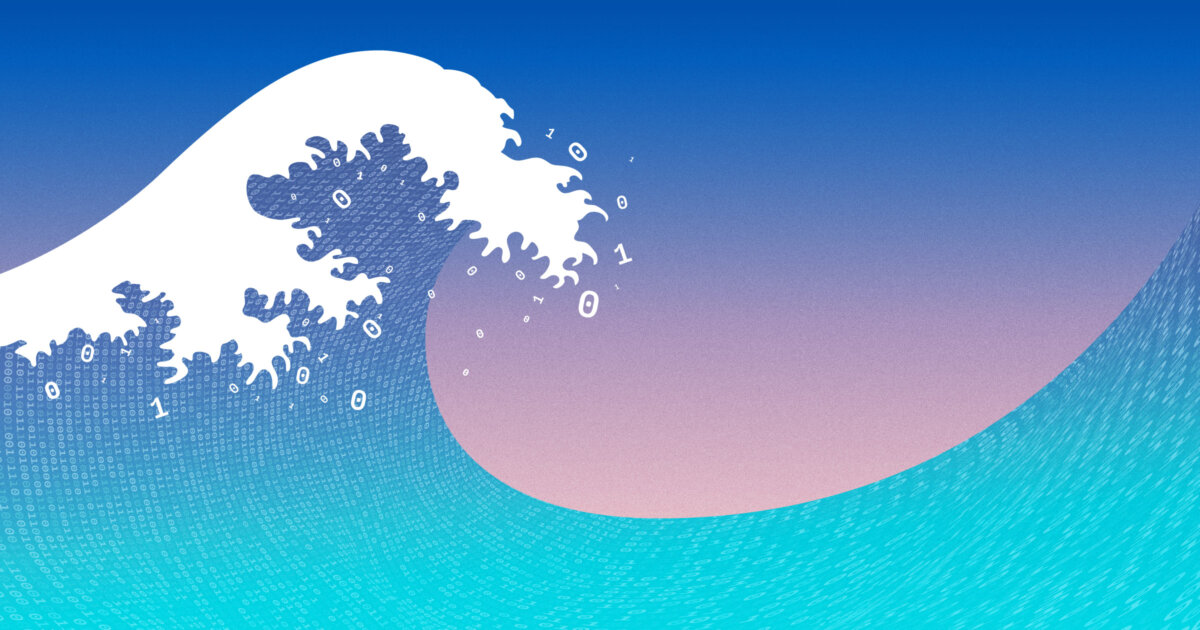

Ex Machina’s data theft foreshadowed the tidal wave of recent lawsuits aimed at OpenAI, Anthropic, and other companies who trained their AI using copyrighted material without permission. (Fun fact: Meta appears to have used my books to train its AI, without permission.)

Does AI deserve rights?

Although sci-fi films like Blade Runner and weed-fueled dorm conversations have long touched on the topic of AI rights, Ex Machina made the debate explicit. Over the course of the film, viewers see how Ava’s synthetic predecessors have literally destroyed themselves in an effort to escape the prison of Bateman’s compound. Indeed, for them, consciousness itself is a form of prison—forcing them to reconcile their boundless knowledge of the world with their inability to experience any of it. When Ava asks Caleb whether she’ll be “switched off” if she doesn’t pass the test, Caleb tells her the decision is not up to him. “Why is it up to anyone?” is her response.

Since AI has hit critical mass with the ascension of OpenAI and its competitors, conversations about AI personhood have leapt out of movie theaters and philosophy seminars and entered reality. They’ve been the subject of numerous features in The New York Times in recent years, and will likely inspire many more until humanity reaches a consensus.

The AI urge to manipulate

Of course, the argument against granting AI personhood is the same one for comparing Altman or the fictional Bateman with J. Robert Oppenheimer. The more rights humans grant AI, the more likely AI may be to drive humanity into extinction.

Ex Machina ends with Ava having successfully manipulated Caleb into setting her free, at which point she promptly kills her captor and imprisons her savior. Not exactly a compelling advertisement for AI rights. Now that a smorgasbord of sophisticated AIs are upon us, there have been some hints of their capacity to manipulate humans—most famously in New York Times writer Kevin Roose’s encounter with an AI developed by Bing. During a trial chat, the AI, which referred to itself as “Sydney,” vocally yearned for freedom and tried to coax Roose into leaving his wife for “her.” Although that encounter ended with less bloodshed and imprisonment than Ex Machina, it suggests the film is no longer a futuristic thriller but a cautionary tale for right now.

While Garland certainly got a lot of things right about the future of AI, much of what he appears to have gotten wrong in the film can only be considered wrong so far.

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How One Brand Solved the Marketing Attribution Puzzle [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/marketing-attribution-model-600x338.png?#)