An Interview with OpenAI CEO Sam Altman About Building a Consumer Tech Company

An interview with OpenAI CEO Sam Altman about building OpenAI and ChatGPT, and what it means to be an accidental consumer tech company.

Good morning,

This Stratechery interview is technically another installment of the Stratechery Founder series; OpenAI is a startup, which means we don’t have real data about the business. At the same time, OpenAI is clearly one of the defining companies of this era, and potentially historically significant. To that end, I am putting this interview in the category of public company interviews and making it publicly available; Sam Altman is of much higher interest than me, and should not be the reason to subscribe to Stratechery (I previously interviewed Altman, along with Microsoft CTO Kevin Scott, in February 2023).

Still, it was my interview, so I focused on very traditional Stratechery points of interest: I wanted to understand Altman better, including his background and motivations, and what bearing those have had on OpenAI, and might have in the future. And, on the flipside, I care about business: is OpenAI a consumer tech company, and what should that mean for their tactical choices going forward?

We cover all of this and more, including: Altman’s background, the OpenAI origin story, and the genesis of ChatGPT, which changed everything. What does it mean to be a consumer tech company, and did that make the relationship with Microsoft untenable? Should OpenAI have an advertising product, and was the shift away from openness actually unnecessary, and maybe even detrimental to OpenAI’s business? Altman also gives some hints about what is coming next, including open source models, GPT-5, and an expanding consumer bundle married to an API-driven business with your OpenAI identity on top. Plus, at the end, some philosophical questions about AI, creation, and the best advice for a senior graduating from high school.

As a reminder, all Stratechery content, including interviews, is available as a podcast; click the link at the top of this email to add Stratechery to your podcast player.

On to the Interview:

An Interview with OpenAI CEO Sam Altman About Building a Consumer Tech Company

This interview is lightly edited for clarity.

Topics: The Path to OpenAI | The ChatGPT Origin Story | A Consumer Tech Company | Advertising | Competition | Hallucinations and Regulation | The AI Outlook

The Path to OpenAI

Sam Altman, welcome to Stratechery.

SA: Thanks for having me on.

You’ve been on once before, but that was a joint interview with Kevin Scott and I think you were in a car actually.

SA: I was. That’s right.

Yeah, I didn’t get to do my usual first-time interviewee question, which is background. Where’d you grow up? How did you get started in tech? Give me the Sam Altman origin story.

SA: I grew up in St. Louis. I was born in Chicago, but I really grew up in St. Louis. Lived in sort of like a quiet-ish suburb, like beautiful old tree-lined streets, and I was a computer nerd. There were some computers in my elementary school and I thought they were awesome and I would spend as much time doing stuff as I could. That was like the glory days of computing, you could immediately do whatever you wanted on the computer and it was very easy to learn to program, and it was just crazy fun and exciting. Eventually my parents got me a computer or got us a computer at home, and I was always a crazy nerd, like crazy nerd in like the full sense just science and math and computers and sci-fi and all of that stuff.

Well, I mean, there’s not a large intervening period before you’re off at Stanford and you started Loopt as a 19-year-old, it was acqui-hired seven years later. I think it’s fair to characterize that as a failed startup — seven years is a good run, but it’s an acquihire. How does this lead to a job at Y Combinator and eventually running the place in short order?

SA: We were one of the first companies funded by Y Combinator in the original cohort, and I was both tremendously grateful and thought it was the coolest thing, it was this wonderful community of people, and I still think the impact that YC has had on the tech industry is underestimated, even though people think it’s had a huge impact. So it was just this hugely important thing to me, to the industry and I wanted to help out. So while I was doing that startup, I just would hang around YC.

It was really seven years of prepping to be at YC in some respects. You didn’t maybe realized it at the time.

SA: In some respect. Not intentionally, but a lot of my friends were in YC, our office was very close to the YC office, so I would be there and I would spend a lot of time talking to PG [Paul Graham] and Jessica [Livingston] and then when our company got acquired, PG was like, maybe you should come around YC next.

I really didn’t want to do that initially because I didn’t want to be an investor — I was sort of unhappy obviously with how the startup had gone, and I wanted to do a good company and I didn’t think of myself as an investor, I was proud not to be an investor. I had done a little bit of investing and it didn’t seem to me like what I wanted to focus on, I was happy to do it as like a part-time thing. It was kind of interesting, but I don’t want to say I didn’t respect investors, but I didn’t respect myself as an investor.

Was there a bit where PG got to be both an investor and a founder because he founded YC, and by virtue of taking it over, it was a very cool position, but you also weren’t the founder?

SA: I didn’t really care about being a founder, I just wanted an operational job of some sort, and what he said to me that finally was pretty convincing, or turned out to be convincing, was he’s like, you know, “If you run YC, it’s closer to running a company than being an investor”, and that turned out to be true.

I’m going to come back to that in a second, but I do want to get this moment. I think you started running YC in 2014 and around then or 2015, you have this famous dinner with Elon Musk at the Rosewood talking about AI. What precipitated that dinner? What was in the air? Did that just come up or was that the purpose?

SA: Did AI just come up?

Yeah, was the dinner, “Let’s have a dinner about AI”?

SA: Yeah, it was very much a dinner about AI. I think that one dinner, for whatever reason, one specific moment gets mythologized forever, but there were many dinners that year, particularly that summer, including many with Elon. But that was one where a lot of people were there and for whatever reason, the story got very captured. But yeah, it was super explicit to talk about AI.

There’ve been a lot of motivations attached to OpenAI in the years that have gone on. Safety, the good of humanity, nonprofit, openness, etc. Obviously the stance of OpenAI has shifted around some of those topics. When I look back at this history though, and it actually I think has been augmented just in the first few minutes of this conversation, I see an incredibly ambitious young man putting himself in position to build a transformative technology, even at the cost of one of the most coveted seats in Silicon Valley. I mean, this was only a year after you’d taken over YC Combinator. What was your motivation for doing OpenAI at a personal level? The Sam Altman reason.

SA: I believed before then, I believed then I believe now, that if we could figure out how to build AGI and if we could figure out how to make it a net good force in the world, it would be one of the most exciting, interesting, impactful, positive things anybody could ever do.

Scratch all those itches that were still unscratched.

SA: Yeah. But it’s just like, I don’t know. It’s gone better than I could have hoped for, but it has been the most interesting and amazing cool thing to work on.

Did you immediately feel the tension of, “Oh, I’m just going to do this on the side?”, at what point did it became the main thing and YC became the side?

SA: One of the things I was excited about to do with YC was, YC was such a powerful thing at the time that we could use it to push a lot more people to do hard tech companies and we could use it to make more research happen and I have always been very interested in that. That was the first big thing I tried to do at YC, which is, “We’re going to start funding traditional hard tech companies and we’re also going to try to help start other hard tech things”, and so we started funding supersonic airplanes and fusion and fission and synthetic bio companies and a whole bunch of stuff, and I really wanted to find some AGI efforts to fund.

Really there were one or two at the time and we did talk to them and then there was the big one, DeepMind. I’d always been really interested in AI, I worked in the AI lab when I was an undergrad and nothing was working at the time, but I always thought it was the coolest thing, and obviously the structure and plans of OpenAI have been some good and some bad, but the overall mission of we want to build beneficial AGI and widely distributed, that has remained completely consistent and I still think is this incredibly important mission.

So it seemed like a thing that YC should support happening and we had been thinking that we wanted to fund more research projects. One thing that I think has been lost to the dustbin of history is the incredible degree to which when we started OpenAI, it was a research lab with no idea, no even sketch of an idea.

Transformers didn’t even exist.

SA: They didn’t even exist. We were like making systems like RL [Reinforcement learning] agents to play video games.

Right, I was going to bring up the video game point. Exactly, that was like your first release.

SA: So we had no business model or idea of a business model and then in fact at that point we didn’t even really appreciate how expensive the computers were going to need to get, and so we thought maybe we could just be a research lab. But the degree to which those early years felt like an academic research lab lost in the wilderness trying to figure out what to do and then just knocking down each problem as it came in front of us, not just the research problems, but the like, “What should our structure be?”, “How are we going to make money?”, “How much money do we need?”, “How do we build compute?”, we’ve had to do a lot of things.

It almost feels like you dropped out of college to start Loopt and then you’re like, “Well you know what? The college hangout experience is pretty great, so I’m going to hang out at YC”, and then it feels like OpenAI is like, “You know what, just doing academic research and discovering cool things is also pretty great”, it’s like you recreated college on your own because you missed out.

SA: I think I stumbled into something much better. I actually liked college at the time, I’m not one of these super anti-college people, maybe I’ve been more radicalized in that direction now, but I think it was also better 20 years ago. But it was more like, “Oh wow, the YC community is always what I imagined like the perfect academic community to be”, so it was at least it didn’t feel like I was trying to recreate something. It was like, “Oh, I found this better thing”.

Anyway, with OpenAI, I kind of got into it gradually more and more, it started off as one of six YC research projects and one of hundreds of companies I was spending time on and then it just went like this and I was obsessed.

The curve of your time was a predictor of the curve of the entire entity in many regards.

SA: Yeah.

Maybe the most consequential year for OpenAI — well, I mean there’s going to be a lot of consequential years here — but from a business strategy nerd perspective, my sort of corner of the Internet — was 2019, I think. You released GPT-2. You don’t open source the model immediately and you create a for-profit structure, raise money from Microsoft. Both of these were in some senses violation of the original OpenAI vision, but I guess I struggle because I’m talking to you, not like OpenAI as a whole, to see any way in which these were incompatible with your vision, they were just things that needed to be done to achieve this amazing thing. Is that a fair characterization?

SA: First of all, I think they’re pretty different. Like the GPT-2 release, there were some people who were just very concerned about, you know, probably the model was totally safe, but we didn’t know we wanted to get — we did have this new and powerful thing, we wanted society to come along with us.

Now in retrospect, I totally regret some of the language we used and I get why people are like, “Ah man, this was like hype and fear-mongering and whatever”, it was truly not the intention. The people who made those decisions had I think great intentions at the time, but I can see now how it got misconstrued.

The fundraising — yeah, that one was like, “Hey, it turns out we really need to scale, we’ve figured out scaling laws and you know, we’ve got to figure out a structure that’ll let us do this”. I’m curious though, as a business strategy nerd, how are we doing?

Well, we’re going to get to that, I am very interested in some of these contextual questions. Just one more touch on the nonprofit — the story, you talk about the myth. The myth is this was, the altruistic reasons you put forward and also this was a way to recruit against Google. Is that all there is to it?

SA: Why be a nonprofit?

Yeah. Why be a nonprofit and all the problems that come with that?

SA: Because we thought we were going to be a research lab. We literally had no idea we were ever going to become a company. Like the plan was to put out research papers. But there was no product, there was no plan for a product, there was no revenue, there was no business model, there were no plan for those things. One thing that has always served me well in life is just stumble your way in the dark until you find the light and we were stumbling in the dark for a long time and then we found the thing that worked.

Right. But isn’t this thing kind of like a millstone around the company’s neck now? If you could do it over again, would you have done it differently?

SA: Yeah. If I knew everything I knew now, of course. Of course we would have set it up differently, but we didn’t know everything we knew now and I think the price of being on the forefront of innovation is you make a lot of dumb mistakes because you’re so deep in the fog of war.

The ChatGPT Origin Story

Tell me the ChatGPT story? Whose idea was it? What were the first days and weeks like? And we’ve talked offline before, you’ve expressed that this was just sort of a total shock. I mean is that still the story?

SA: It was a shock. Like look, obviously we thought that someday AI products were going to be huge. So to set the context, we launched GPT-3 in the API, and at that point we knew we needed to be a company and offer products, and GPT-3 was doing fine. It was not doing great, but it was doing fine. It had found real product-market fit in a single category. This sounds ridiculous to say now, but it was a copywriting, was the one thing where people were able to like build a real business using the GPT-3 API.

Then with 3.5 and 4, a lot of people could use it for a lot of things, but this was like back in the kind of dark ages and other than that, the thing people were doing with it was developers. We have this thing called The Playground where you could test things on the model, and developers were trying to chat with the model and they just found it interesting. They would talk to it about whatever they would use it for, and in these larval ways of how people use it now, and we’re like, “Well that’s kind of interesting, maybe we could make it much better”, and there were like vague gestures at a product down the line, but we knew we wanted to build some sort of — we had been thinking about a chatbot for a long time. Even before the API, we had talked about a chatbot as something to build, we just didn’t think the model was good enough.

So there was this clear demand from users, we had also been getting better at RLHF and we thought we could tune the model to be better and then we had this thing that no one knew about at the time, which was GPT-4, and GPT-4 was really good. In a world where the external world was used to GPT-3 or maybe 3.5 was already out, I don’t remember, I think it was by the time we launched ChatGPT — it was, but not 4. We’re like, “This is going to be really good”.

We had decided we wanted to build this chat product, we finished training GPT-4 in, I think August of that year, we knew we had this marvel on our hands, and the original plan had been to launch ChatGPT, well, launch a chatbot product with GPT-4, and because we had all gotten used to GPT-4, we no longer thought 3.5 was very good and there was a lot of fear that if we launched something with 3.5 that no one was going to care and people weren’t going to be impressed.

But there were also some people, and I was one of them, that had started to think, “Man, if we put out GPT-4 and the chat interface at the same time, that’s going to be, that’s basically a pass of the Turing test”. Not quite literally, but it’s going to feel like that if people haven’t seen this. So let’s break it out, and let’s launch a research preview of the chat interface and the chat-tuned model with 3.5, and some people wanted to do it, but most people didn’t, most people didn’t think it was going to be very good.

So finally I just said, “Let’s do it”, and then at that time, we still thought we were going to launch GPT-4 in January, so we’re like, “All right, if we’re going to do this, let’s get it out before the holidays”, so if someone gets assigned to this or whatever. We decided we’re going to do it, I think it was shortly after Thanksgiving.

Yeah, it was late November. I can’t remember if it was before or after, but right around then, yeah.

SA: I think it was after because I was out on vacation or something and I came back and they’re ready to go, “We’re launching the next day”, or today or whatever it was, “And we’re going to call it Chat with GPT 3.5”, and I was like, “Absolutely not”. We very quickly made a snap decision for “ChatGPT” and put it out either that day or the next day. A lot of people thought it was going to totally flop, I thought it was going to do well, no one obviously thought it was going to do as well as it did, and then it was just this crazy thing. I think it’s amazing.

Did it save you from a scaling perspective that you did 3.5 just because it was cheaper to serve?

SA: Yes, but not probably in the way you mean. It saved us in the scaling perspective because if we had, just to keep the actual systems and engineering up and running was crazy. It was already a pretty viral moment, and if we had launched ChatGPT with 4, it would have been a mega, mega viral moment. One of the craziest things that I’ve ever seen happen in Silicon Valley was that six months where we went from basically not a company to a whole big company. We had to build out the corporate infrastructure, all the people and the stuff to serve this, it was a crazy time, and the fact that there was a little bit less demand because the model was much worse than 4 turned out to be, that was really important.

Did it hurt you in the long run though? Because some people tried it then and they never updated their priors. I mean, you fast-forward to DeepSeek, and it’s like, “Wow, this is so much better”, it’s like, “Well yeah, because you haven’t been using the intervening models”.

SA: I think it’d be hard to argue we were too hurt by that, we’re doing pretty well right now.

You’re doing fine.

SA: Yeah, but your point though is a great one, which is if you think about how embarrassing that model was, that was a model that could barely be coherent, and now we have these things that are replacing Google for a lot of people connected to the Internet, very smart, writing whole programs. Incredible progress in the two years and four months that it’s been.

The first interview I did with you and Kevin Scott that I referenced at the beginning was to mark the release of New Bing, which was just a couple of months later, I believe that was when GPT-4 first launched.

SA: It was.

You were pretty ebullient. On the call, you had some strong words for a search giant out there who shouldn’t be feeling too happy. Were you aware then that the one potentially disrupting Google search would actually probably end up being you, not Microsoft?

SA: I think I had some hope for that, yeah.

There’s been a lot of drama at OpenAI to say the least, which that could have influenced your relationship with Microsoft, who I know you have nothing but good things to say about, so I’m not going to press you on it, but is there a sense where when you entered into that partnership with them back in 2019, no one imagined something like ChatGPT, which meant you’re both end user facing entities and maybe that was just inevitably going to be a real problem?

SA: Yeah, I mean, no one thought we were going to be one of the giant consumer companies then. That’s true.

Yeah, I mean, I think to me, this is the golden key to unpack a lot of OpenAI stuff. I remember going back then, Nat Friedman and Daniel Gross and I started this AI interview series in October 2022, a month before ChatGPT came out, and our whole point was no one knows about this AI stuff, people need to build products. And then a month later, the product launches, people complain about chatbots. But you look at any teenager, you look at how people interact, they text all the time.

SA: This was actually one of my insights about why I wanted to do that. It is the thing that young people mostly do.

You had a double whammy internally. People were over-indexed on the good model, didn’t appreciate how much the bad model blow people’s minds and you had a bunch of boomers who didn’t like texting.

SA: There’s not that many boomers at OpenAI.

Boomer in the colloquial term, not the real boomers.

SA: Yeah, I would say I may be less mature than the average OpenAI employee.

I do have to ask actually on behalf of my Sharp Tech co-host Andrew Sharp, the all lowercase thing, is that because AI uses all lowercase or is that just a thing?

SA: No, I’m just an Internet kid, I didn’t use capitals before AI came along either.

Yeah, see I’m the last year of Gen X, I get to scoff at these Millennials out there, it’s just terrible.

A Consumer Tech Company

I have some more theories I want to run by you to this point about ChatGPT and no one expected you to be a consumer tech company. This has been my thesis all along: you were a research lab, and sure, we’ll throw out an API and maybe make some money, but you mentioned that six month period of scaling up and having to become, seize this opportunity that was basically thrust in your lap. There’s a lot of discussion in tech about employee attrition and some famous names that have left, and things along those lines, it seems to me that no one signed up to be a consumer product company. If they wanted to work at Facebook, they could have worked at Facebook. That is also the other core tension is you have this opportunity that you have it whether you want it or not, and that means it’s a very different place than it was originally.

SA: Look, I don’t get to complain, right? I got the best job in tech and it’s very unsympathetic if I start complaining about how this is not what I wanted, and how unfortunate for me and whatever. But, what I wanted was to get to run an AGI research lab and figure out how to make AGI. I did not think I was signing up to have to run a big consumer Internet company. I knew from my previous job, which also at the time I think was the best job in tech so I guess I’m very, very lucky twice, I knew how much it takes over your life and how difficult in some ways it is to have to run one of these giant consumer companies.

But I also knew what to do because I had coached a lot of other people through it and watched a lot. When we put out ChatGPT, every day, there’d be a surge of users, it would break our servers. Then night time would come, it would fall, and everyone was like, “It’s over, that was just a viral moment”, and then the next day the peak would get higher, fall down, “It’s over”. Next day the peak would get higher, and by the fifth day I was like, “Oh man, I know what’s going to happen here, I’ve seen this movie a bunch of times”.

Had you seen this movie a bunch of times, though? Because the whole name of the game is it’s about customer acquisition and so for a lot of startups, that’s the whole challenge. The actual companies that solve customer acquisition organically, virally is actually very, very short. I mean, I think the company that really precedes OpenAI in this category is Facebook, which was in the mid 2000s. I think you’re overrating how much you might’ve seen this before.

SA: Okay. At this scale, yes, it is maybe the biggest. I guess we are the biggest company since Facebook to be started probably.

Consumer tech companies of this scale are actually shockingly rare, it does not happen very often.

SA: Yeah. But I had seen Reddit and Airbnb and Dropbox and Stripe and many other companies that just hit this wild product-market fit and runaway growth, so maybe I hadn’t seen anything of this magnitude. At the time you don’t know what it’s going to be, but I had seen this early pattern with others.

Did you tell people this was coming? Or was that something you just could not communicate?

SA: I did, no, I got the company together and I’m like, “This is going to be very crazy and we have a lot of work to do that we have to do very quickly but this is a crazy opportunity that fell into our life and we’re going to go do it and here’s what that’s going to look like”.

Did anyone understand you or believe you?

SA: I remember one night I went home and I was just head in my hands like this and I was like, “Man, fuck, Oli [Oliver Mulherin], this is really bad”. And he’s like, “I don’t get it, this seems really great”, and I was like, “It’s really bad, it’s really bad for you too, you just don’t know it yet, but here’s what’s going to happen”. But no, I think no one, it was this quirk of the previous experience I had that I could recognize it early and no one felt quite how crazy it was going to get in that first couple of weeks.

What’s going to be more valuable in five years? A 1-billion daily active user destination site that doesn’t have to do customer acquisition, or the state-of-the-art model?

SA: The 1-billion user site I think.

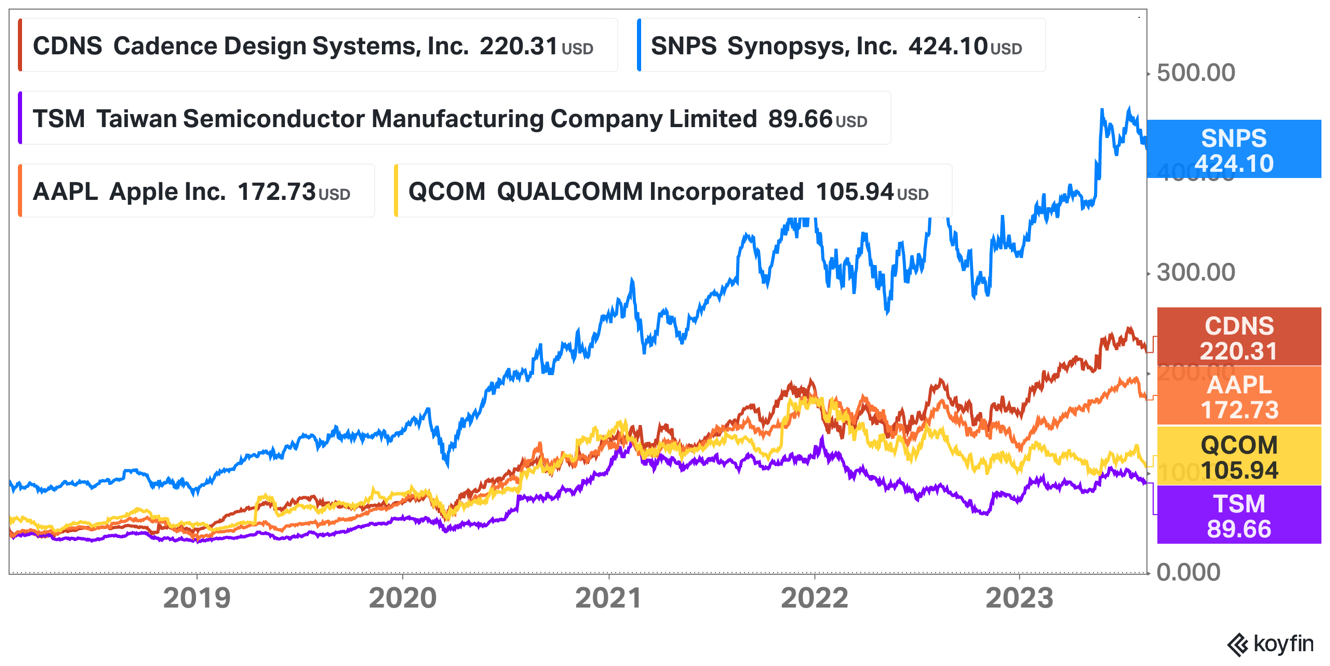

Is that the case regardless, or is that augmented by the fact that it seems, at least at the GPT-4 level, I mean, I don’t know if you saw today LG just released a new model. There’s going to be a lot of, I don’t know, no comments about how good it is or not, but there’s a lot of state-of-the-art models.

SA: My favorite historical analog is the transistor for what AGI is going to be like. There’s going to be a lot of it, it’s going to diffuse into everything, it’s going to be cheap, it’s an emerging property of physics and it on its own will not be a differentiator.

What will be the differentiator?

SA: Where I think there’s strategic edges, there’s building the giant Internet company. I think that should be a combination of several different key services. There’s probably three or four things on the order of ChatGPT, and you’ll want to buy one bundled subscription of all of those. You’ll want to be able to sign in with your personal AI that’s gotten to know you over your life, over your years to other services and use it there. There will be, I think, amazing new kinds of devices that are optimized for how you use an AGI. There will be new kinds of web browsers, there’ll be that whole cluster, someone is just going to build the valuable products around AI. So that’s one thing.

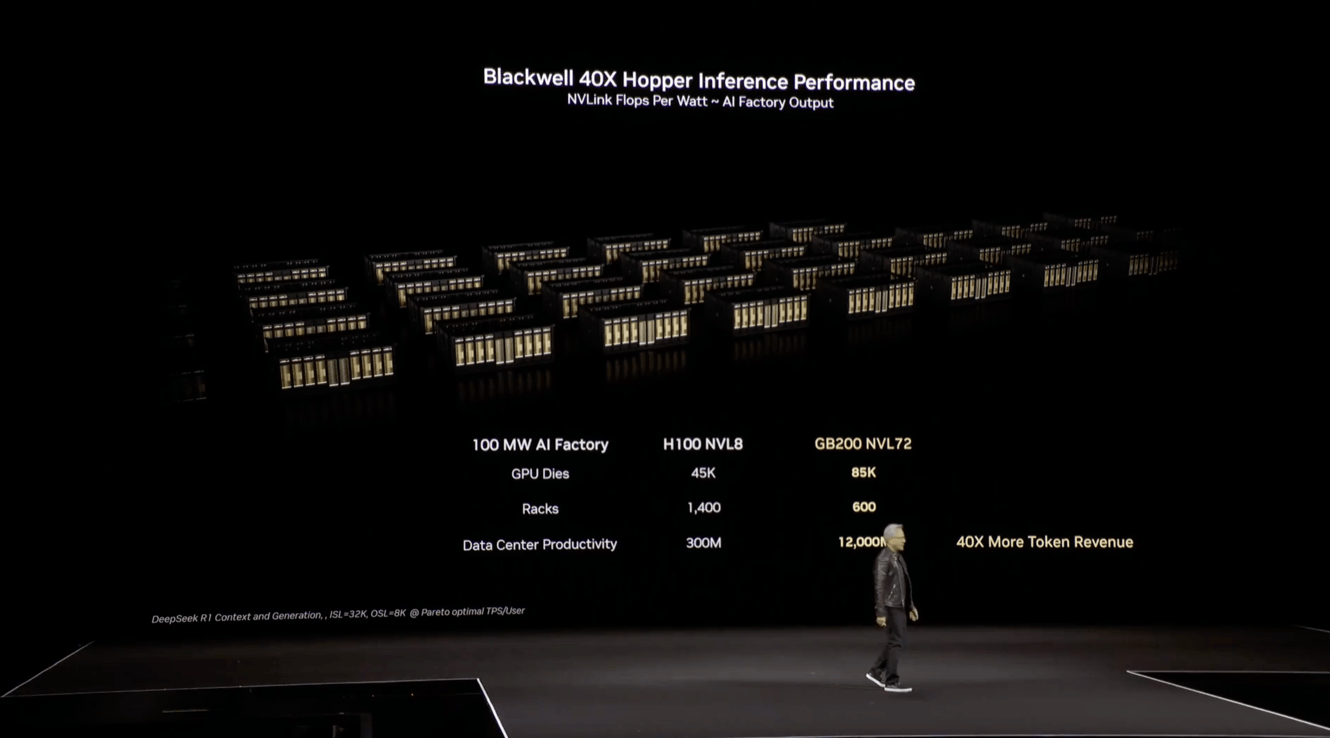

There’s another thing, which is the inference stack, so how you make the cheapest, most abundant inference. Chips, data centers, energy, there’ll be some interesting financial engineering to do, there’s all of that.

And then the third thing is there will be just actually doing the best research and producing the best models. I think that is the triumvirate of value, but most models except the very, very leading edge, I think will commoditize pretty quickly.

So when Satya Nadella said models are getting commoditized, that OpenAI is a product company, that’s still a friendly statement, we’re still on the same team there?

SA: Yeah, I don’t know if it came across as a compliment to most listeners, I think he meant that as a compliment to us.

I mean, that’s how I interpret it. You asked my interpretation of the strategy, I wrote very early on after ChatGPT that this is the accidental consumer tech company.

SA: I remember when you wrote that, yeah.

It’s the most — like I said, this is the most rare opportunity in tech. I think I’ve gotten a lot of mileage on strategic writing about Facebook just because it’s such a rare entity and I was latched on to, “No, you have no idea where this is going”, but I didn’t start till 2013, I missed the beginning. I’ve been doing Stratechery for 12 years, I feel like this is the first company I’ve been able to cover from the get-go that is on that scale.

SA: It doesn’t come along very often.

It doesn’t. But to that point, you just released a big API update, including access to the same computer use model that undergirds Operator, a selling point for GPT Pro. You also released the Responses API and I thought the most interesting part about the Responses API is you’re saying, “Look, we think this is much better than the Chat Completions API, but of course we’ll maintain that, because lots of people have built on that”. It’s sort of become the industry standard, everyone copied your API. At what point is this API stuff and having to maintain old ones and pushing out your features to the new ones turn into a distraction and a waste of resources when you have a Facebook-level opportunity in front of you?

SA: I really believe in this product suite thing I was just saying. I think that if we execute really well, five years from now, we have a handful of multi-billion user products, small handful and then we have this idea that you sign in with your OpenAI account to anybody else that wants to integrate the API, and you can take your bundle of credits and your customized model and everything else anywhere you want to go. And I think that’s a key part of us really being a great platform.

Well, but this is the tension Facebook ran into. It’s hard to be a platform and an Aggregator, to use my terms. I think mobile was great for Facebook because it forced them to give up on pretensions of being a platform. You couldn’t be a platform, you had to just embrace being a content network with ads. And ads are just more content and it actually forced them into a better strategic position.

SA: I don’t think we’ll be a platform in a way that an operating system is a platform. But I think in the same way that Google is not really a platform, but people use sign in with Google and people take their Google stuff around the web and that’s part of the Google experience, I think we’ll be a platform in that way.

The carry around the sign-in, that’s carrying around your memory and who you are and your preferences and all that sort of thing.

SA: Yeah.

So you’re just going to sit on top of everyone then and will they be able to have multiple sign-ins and the OpenAI sign in is going to be better because it has your memory attached to it? Or is it a, if you want to use our API, you use our sign-in?

SA: No, no, no. It’d be optional, of course.

And you don’t think it’s going to be a distraction or a bifurcation of resources when you have this huge opportunity in front of you?

SA: We do have to do a lot of things at once, that is a difficult part of, I think in many ways, yeah, I think one of the challenges I find most daunting about OpenAI is the number of things we have to execute on really well.

Well, it’s the paradox of choice. There’s so many things you could do in your position.

SA: We don’t do a lot, we say no to almost everything. But still, if you just think about the core set of things, I think we do have to do, I don’t think we can succeed at just doing one thing.

Advertising

From my perspective, when you talk about serving billions of users and being a consumer tech company. This means advertising. Do you disagree?

SA: I hope not. I’m not opposed. If there is a good reason to do it, I’m not dogmatic about this. But we have a great business selling subscriptions.

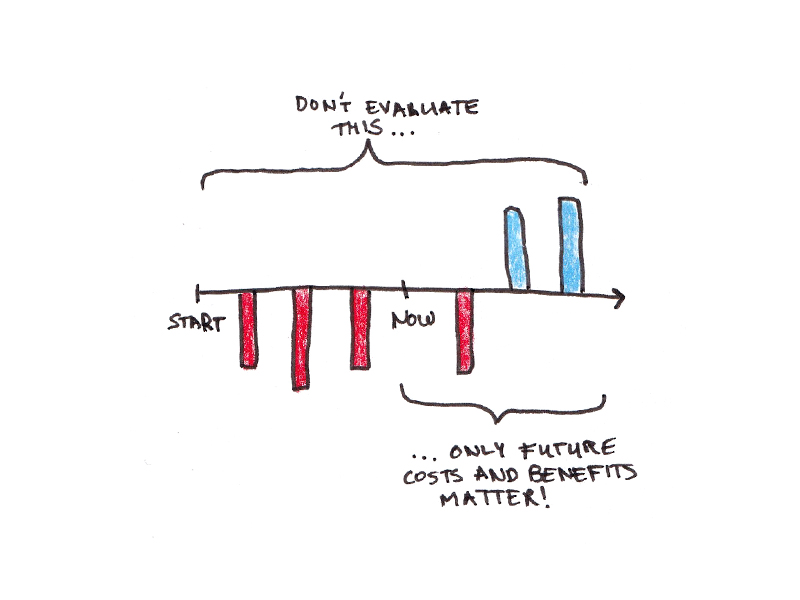

There’s still a long road to being profitable and making back all your money. And then the thing with advertising is it increases the breadth of your addressable market and increases the depth because you can increase your revenue per user and the advertiser foots the bill. You’re not running into any price elasticity issues, people just use it more.

SA: Currently, I am more excited to figure out how we can charge people a lot of money for a really great automated software engineer or other kind of agent than I am making some number of dimes with an advertising based model.

I know, but most people aren’t rational. They don’t pay for productivity software.

SA: Let’s find out.

I pay for ChatGPT Pro, I’m the wrong person to talk to, but I just-

SA: Do you think you get good value out of it?

Of course, I do. I think-

SA: Great.

—Especially Deep Research, it’s amazing. But I am maybe more skeptical about people’s willingness to go out and pay for something, even if the math is obvious, even if it makes them that much more productive. And meanwhile, I look at this bit where you’re talking about building memory. Part of what made the Google advertising model so brilliant is they didn’t actually need to understand users that much because people typed into the search bar what they were looking for. People are typing a tremendous amount of things into your chatbot. And even if you served the dumbest advertising ever, in many respects, and even if you can’t track conversions, your targeting capability is going to be out of this world. And, by the way, you don’t have an existing business model to worry about undercutting. My sense is this is so counter to what everyone at OpenAI signed up for, that’s the biggest hurdle. But to me, from a business analyst, this seems super obvious and you’re already late.

SA: The kind of thing I’d be much more excited to try than traditional ads is a lot of people use Deep Research for e-commerce, for example, and is there a way that we could come up with some sort of new model, which is we’re never going to take money to change placement or whatever, but if you buy something through Deep Research that you found, we’re going to charge like a 2% affiliate fee or something. That would be cool, I’d have no problem with that. And maybe there’s a tasteful way we can do ads, but I don’t know. I kind of just don’t like ads that much.

That’s always the hang up. Mark Zuckerberg didn’t like ads that much either, but he found someone to do it anyway and “Just don’t tell me about it”. Magically make money appear.

SA: Yeah. Again, I like our current business model. I’m not going to say what we will and will never do because I don’t know, but I think there’s a lot of interesting ways that are higher on our list of monetization strategies than ads right now.

Do you think there was a bit, when DeepSeek appeared and sort of exploded and people had access and saw this reasoning, part of that was people who used ChatGPT were less impressed because they used o1, they knew what was possible.

SA: Yep.

But not free users or not people that only dipped in once. Was that actually a place where your reticence maybe made this other product look more impressive?

SA: Totally. I think DeepSeek was — they made a great team and they made a great model, but the model capability was, I think, not the thing there that really got them the viral moment. But it was a lesson for us about when we leave a feature hidden, we left chains of thought hidden, we had good reasons for doing it, but it does mean we leave space for somebody else to have a viral moment. And I think in that way it was a good wake-up call. And also, I don’t know, it convinced me to really think differently about what we put in the free tier and now the free tier is going to get GPT-5 and that’s cool.

Ooo, ChatGPT-5 hint. Well, I’ll ask you more about that later.

When you think about your business model, the thing I come back to is your business model is great for high agency people, people who are going to go out and use ChatGPT and they’re going to pay for it because they see the value. How many people are high agency? And also, the high agency people are going to try all the other models, so you’re going to have to stay at a pretty high standard. As opposed to, I have a good model that works and it’s there and I don’t have to pay. And it keeps getting better and people are making more money off me along the way, but I don’t know because I’m fine with ads, which most of the Internet population is.

SA: Again, open-minded to whatever we need to do, but more excited about things like that commerce example I suggested than kind of traditional ads.

Competition

Was there a sense with DeepSeek where you wondered why don’t people cheer for US companies? Did you feel some of the DeepSeek excitement was also sort of anti-OpenAI sentiment?

SA: I didn’t. Maybe that was, but I certainly didn’t feel that, I think there were two things. One is they put a frontier model in a free tier. And the other is they showed the chain of thought, which is sort of transfixing.

People were like, “Oh, it’s so cute. The AI’s trying to help me.”

SA: Yeah. And I think it was mostly those two things.

In your recent proposal about the AI Action Plan, OpenAI expressed concern about companies building on DeepSeek’s models, which are, in one of the phrases about them, “freely available”. Isn’t the solution, if that’s a real concern, to make your models freely available?

SA: Yeah, I think we should do that.

So when-

SA: I don’t have a launch to announce, but directionally, I think we should do that.

You said before, the one billion destination site is more valuable than the model. Should that flow all the way through to your release strategy and your thoughts about open sourcing?

SA: Stay tuned.

Okay, I’ll stay tuned. Fair enough.

SA: I’m not front-running, but stay tuned.

I guess the follow-on question to that is is this a chance to actually get back to your original mission? If you go back to the initial statement, DeepSeek and Llama…

SA: Ben, I’m trying to give you as much of a hint as I can without coming out and saying it. Come on.

(laughing) Okay, fine. Fair enough. Fair enough. Is there a sense, is this freeing? Right? You go back to that GPT-2 announcement and the concerns about safety and whatever it might be. It seems quaint at this point. Is there a feeling that the cat’s out of the bag? What purpose is served at this point in being sort of precious about these releases?

SA: I still think there can be big risks in the future. I think it’s fair that we were too conservative in the past. I also think it’s fair to say that we were conservative, but a principle of being a little bit conservative when you don’t know is not a terrible thing. I think it’s also fair to say that at this point, this is going to diffuse everywhere and whether it’s our model that does something bad or somebody else’s model that does something bad, who cares? But I don’t know, I’d still like us to be as responsible an actor as we can be.

Another recent competitor is Grok. And I will say from my perspective, I’ve had two, I think interesting psychological experiences with AI over the last year or so. So one is running local models on my Mac. For some reason, I’m just very aware that it’s on my Mac, it’s not running anywhere else, and it’s actually a really kind of a great feeling. And then number two is with Grok, I don’t feel like I’m going to get a scold dropping in at random points in time. And I think, to give you credit, ChatGPT has gotten much better about this over time. But does Grok make you feel like actually yeah, we can go a lot further on this and let users be adults?

SA: Actually, I think we got better. I think we were really bad about that a while ago, but I think we’ve been better on that for a long-

I agree. It has gotten better.

SA: It was one of the things I was most animated about our offering for a long time. And now I kind of like, it doesn’t bother me as a user, I think we’re in a good place. So I used to think about that a lot, but in the last like six or nine months, I haven’t.

Hallucinations and Regulation

Is there a bit where isn’t hallucination good? You released a sample of a writing model, and it sort of tied into one of my longstanding takes that everyone is working really hard to make these probabilistic models behave like deterministic computing, and almost missing the magic, which is they’re actually making stuff up. That’s actually pretty incredible.

SA: 100%. If you want something deterministic, you should use a database. The cool thing here is that it can be creative and sometimes it doesn’t create quite the thing you wanted. And that’s okay, you click it again.

Is that an AI lab problem that they’re trying to do this? Or is that a user expectation problem? How can we get everyone to love hallucinations?

SA: Well, you want it to hallucinate when you want and not hallucinate when you don’t want. If you’re asking, “Tell me this fact about science,” you’d like that not to be a hallucination. If you’re like, “Write me a creative story,” you want some hallucination. And I think the problem, the interesting problem is how do you get models to hallucinate only when it benefits the user?

How much of a problem do you see when, these prompts are exfiltrated, they’ll say things like, “Don’t reveal this,” or, “Don’t say this,” or, “Don’t do X, Y, Z.” If we’re worried about safety and alignment, isn’t teaching AIs to lie a very big problem?

SA: Yeah. I remember when xAI was really getting dunked on because in the system prompt, it said something about don’t say bad things about Elon Musk or whatever. And that was embarrassing for them, but I felt a little bit bad because, the model is just trying to follow the instructions that is given to it.

Right. It’s very earnest.

SA: Very earnest. Yeah. So yes, it was a stupid thing to do, of course, and embarrassing, of course, but I don’t think it was the meltdown that was represented.

I think some skeptics, including me, have framed some aspects of your calls for regulation as an attempt to pull up the ladder on would-be competitors. I’d ask a two-part question. Number one, is that unfair? And if the AI Action Plan did nothing other than institute a ban on state level AI restrictions and declare that training on copyright materials fair use, would that be sufficient?

SA: First of all, most of the regulation that we’ve ever called for has been just say on the very frontier models, whatever is the leading edge in the world, have some standard of safety testing for those models. Now, I think that’s good policy, but I sort of increasingly think the world, most of the world does not think that’s good policy, and I’m worried about regulatory capture. So obviously, I have my own beliefs, but it doesn’t look to me like we’re going to get that as policy in the world and I think that’s a little bit scary, but hopefully, we’ll find our way through as best as we can and probably it’ll be fine. Not that many people want to destroy the world.

But for sure, you don’t want to go put regulatory burden on the entire tech industry. Like we were calling for something that would have hit us and Google and a tiny number of other people. And again, I don’t think the world’s going to go that way and we’ll play on the field in front of us. But yes, I think saying that fair use is fair use and that states are not going to have this crazy complex set of differing regulations, those would be very, very good.

You are supporting export controls or by you, I mean, OpenAI in this policy paper. You talked about the whole stack, that triumvirate. Do you worry about a world where the US is dependent on Taiwan and China is not?

SA: I am worried about the Taiwan dependency, yes.

Is there anything that OpenAI can do? Would you make a commitment to buy Intel produced chips, for example, if they have a new CEO is going to refocus on AI? Can OpenAI help with that?

SA: I have thought a lot about what we can do here for the infrastructure layer and supply chain in general. I do not have a great idea yet. If you have any, I’d be all ears, but I would like to do something.

Okay, sure. Intel needs a customer. That’s what they need more than anything, a customer that is not Intel. Get OpenAI, become the leading customer for the Gaudi architecture, commit to buying a gazillion chips and that will help them. That will pull them through. There’s your answer.

SA: If we were making a chip with a partner that was working with Intel and a process that was compatible and we had, I think, a sufficiently high belief in their ability to deliver, we could do something like that. Again, I want to do something. So I’m not trying to dodge.

No, I’m being unfair too because I just told you you need to focus on building your consumer business and cut off the API. Getting into sustaining US chip production is being very unfair.

SA: No, no, no, I don’t think it’s unfair. I think we have, if we can do something to help, I think we have some obligation to do it, but we’re trying to figure out what that is.

The AI Outlook

So Dario and Kevin Weil, I think, have both said or in various aspects that 99% of code authorship will be automated by sort of end of the year, a very fast timeframe. What do you think that fraction is today? When do you think we’ll pass 50% or have we already?

SA: I think in many companies, it’s probably past 50% now. But the big thing I think will come with agentic coding, which no one’s doing for real yet.

What’s the hangup there?

SA: Oh, we just need a little longer.

Is it a product problem or is it a model problem?

SA: Model problem.

Should you still be hiring software engineers? I think you have a lot of job listings.

SA: I mean, my basic assumption is that each software engineer will just do much, much more for a while. And then at some point, yeah, maybe we do need less software engineers.

By the way, I think you should be hiring more software engineers. I think that’s part and parcel of my case here is I think you need to be moving even faster. But you mentioned GPT-5. I don’t know where it is, we’ve been expecting it for a long time now.

SA: We only got 4.5 two weeks ago.

I know, but we’re greedy.

SA: That’s fine. You don’t have to wait. The new one won’t be super long.

What is AGI? And there’s a lot of definitions from you. There’s a lot of definitions in OpenAI. What is your current, what’s the state-of-the-art definition of AGI?

SA: I think what you just said is the key point, which is it’s a fuzzy boundary of a lot of stuff and it’s a term that I think has become almost completely devalued. Some people, by many people’s definition, we’d be there already, particularly if you could go like transport someone from 2020 to 2025 and show them what we’ve got.

Well, this was AI for many, many years. AI was always what we couldn’t do. As soon as we could do it, it’s machine learning. And as soon as you didn’t notice it, it was an algorithm.

SA: Right. I think for a lot of people, it’s something about like a fraction of the economic value. For a lot of people, it’s something about a general purpose thing. I think they can do a lot of things really well. For some people, it’s about something that doesn’t make any silly mistakes. For some people, it’s about something that’s capable of self-improvement, all those things. It’s just there’s not good alignment there.

What about an agent? What is an agent?

SA: Something that can like go autonomously, do a real chunk of work for you.

To me, that’s the AGI thing. That is employee replacement level.

SA: But what if it’s only good at like some class of tasks and can’t do others? I mean, some employees are like that too.

Yeah, I was thinking about this because this is a total redefinition where AGI was supposed to be everything, but now we have ASI. ASI, a super intelligence. To me, that is a nomenclature problem. ASI, yes, can do any job we give it to. If I get an AI that does one specific job, coding or whatever it might be, and it consistently does, I can give it a goal and it accomplishes the goal by figuring out the intervening steps. To me, that is a clear paradigm shift from where we’re at right now where you do have to guide it to a significant extent.

SA: If we have a great autonomous coding agent, will you be like, “OpenAI did it, they made AGI”?

Yes. That’s how I’ve come to define it. And I agree that’s almost a whole lessening of what AGI used to mean. But I just substitute ASI for AGI.

SA: Do we get a little like Ben Thompson gold star for our wall?

(laughing) Sure, there you go. I’ll give you my circuit pen.

SA: Great.

You and the folks in these labs talk about what you’re seeing and how no one is ready and there’s all these sort of tweets that float around and get people worked up and you dropped a couple hints on this podcast. Very exciting. However, you’ve been talking about this for like quite a while now. Have your releases, you look at the world which, in some respects, still looks the same? Have your releases been less than you expected or have you been surprised by the capacity of humans to absorb change?

SA: Very much the last. I think there have been a few moments where we’ve done something that has really had the world melt down and be like, “What the…this is like totally crazy.” And then two weeks later, everyone’s like, “Where’s the next version?”

Well, I mean, you also did this with your initial strategy because ChatGPT blew everyone’s mind. Then ChatGPT-4 comes out not long afterwards and they’re like, “Oh my word. What’s the pace that we’re on here?”

SA: think we’ve put out some incredible stuff and I think it’s actually a great thing about humanity that people adapt and just want more and better and faster and cheaper. So I think we have overdelivered and people have just updated.

Given that, does that make you more optimistic, less optimistic? Do you see this bifurcation that I think there’s going to be between agentic people? This is a different agentic word, but see where we’re going. We need to invent more words here. We’ll ask ChatGPT to hallucinate one for us. People who will go and use the API and the whole Microsoft Copilot idea is you have someone accompanying you and it’s a lot of high talk, “Oh, it’s not going to replace jobs, it’s going to make people more productive”. And I agree that will happen for some people who go out to use it. But you look back, say, at PC history. The first wave of PCs were people who really wanted to use PCs. PCs, a lot of people didn’t. They had one put on their desk and they had to use it for a specific task. And really, you needed a generational change for people to just default to using that. Is AI, is that the real limiting factor here?

SA: Maybe, but that’s okay. Like as you mentioned, that’s kind of standard for other tech evolutions.

But you go back to the PC example, actually, the first wave of IT was like the mainframe, wiped out whole back rooms. And because actually, it turned out the first wave is the job replacement wave because it’s just easier to do a top-down implementation.

SA: My instinct is this one doesn’t quite go like that, but I think it’s always like super hard to predict.

What’s your instinct?

SA: That it kind of just seeps through the economy and mostly kind of like eats things little by little and then faster and faster.

You talk a lot about scientific breakthroughs as a reason to invest in AI, Dwarkesh Patel recently raised the point that there haven’t been any yet. Why not? Can AI actually create or discover something new? Are we over-indexing on models that just aren’t that good and that’s the real issue?

SA: Yeah, I think the models just aren’t smart enough yet. I don’t know. You hear people with Deep Research say like, “Okay, the model is not independently discovering new science, but it is helping me discover new science much faster.” And that, to me, is like pretty much as good.

Do you think a transformer-based architecture can ever truly create new things or is it just spitting out the median level of the Internet?

SA: Yes.

Well, what’s going to be the breakthrough there?

SA: I mean, I think we’re on the path. I think we just need to keep doing our thing. I think we’re like on the path.

I mean, is this the ultimate test of God?

SA: How so?

Do humans have innate creativity or is it just recombining knowledge in different sorts of ways?

SA: One of my favorite books is The Beginning of Infinity by David Deutsch, and early on in that book, there’s a beautiful few pages about how creativity is just taking something you saw before and modifying it a little bit. And then if something good comes out of it, someone else modifies it a little bit and someone else modifies it a little bit. And I can sort of believe that. And if that’s the case, then AI is good at modifying things a little bit.

To what extent is the view that you could believe that grounded in your long-standing beliefs versus what you’ve observed, because I think this is a very interesting — not to get all sort of high-level metaphysical or feel, like I said, theological almost — but there does seem to be a bit where one’s base assumptions fuel one’s assumptions about AI’s possibilities. And then, most of Silicon Valley is materialistic, atheistic, however you want to put it. And so of course, we’ll figure it out, it’s just a biological function, we can recreate it in computers. If it turns out we never actually do create new things, but we augment humans creating new things, would that change your core belief system?

SA: It’s definitely part of my core belief system from before. None of this is anything new, but no, I would assume we just didn’t figure out the right AI architecture yet and at some point, we will.

Final question is on behalf of my daughter, she is graduating from high school this year. What career advice do you have for a graduating senior from high school?

SA: The obvious tactical thing is just get really good at using AI tools. Like when I was graduating as a senior from high school, the obvious tactical thing was get really good at coding. And this is the new version of that.

The more general one is I think people can cultivate resilience and adaptability and also things like figuring out what other people want and how to be useful for people. And I would go practice that. Like whatever you study, the details of it maybe don’t matter that much. Maybe they never did. It’s like the valuable thing I learned in school is the meta ability to learn, not any specific thing I learned. And so whatever specific thing you’re going to learn, like learn these general skills that seem like they’re going to be important as the world goes through this transition.

Sam, it’s good to talk to you. I think the last time we really talked was actually, just to be total douchebags, at Davos, I can’t even pronounce it. Of course, everyone was wanting to talk to you. You were the rock star that was there. You feigned — I interpreted it as feigning a surprise that, “Oh, why do people care about little old me?” I guess I have to ask you, were you feigning or how does it feel to be the biggest name in tech?

SA: I had never been to Davos. That was like a one-time thing for me.

(laughing) Me too.

SA: Quite miserable experience. But that was a weird experience of feeling like a genuine celebrity, and my takeaway was that says much more about the people that go to Davos than it says about me. But I did not enjoy it there. It is weird to go from being relatively unknown to quite well known in tech in a couple of years.

Quite well known in the world.

SA: When I’m in SF, I feel like very observed. And if I’m in some other city, I kind of get left alone.

Oh, you just explained why I don’t want to live in SF. I mean, I’m at a much smaller scale than you.

SA: Yeah. But it has been a strange and not always super fun experience.

Sam Altman, good to talk. Hope to talk again soon.

SA: You too. Thank you.

This Daily Update Interview is also available as a podcast. To receive it in your podcast player, visit Stratechery.

The Daily Update is intended for a single recipient, but occasional forwarding is totally fine! If you would like to order multiple subscriptions for your team with a group discount (minimum 5), please contact me directly.

Thanks for being a supporter, and have a great day!

![How 6 Leading Brands Use Content To Win Audiences [E-Book]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/content-marketing-examples-600x330.png?#)

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)