Why DeepSeek’s arrival looks like a key moment for safe AI

The AI industry is growing up fast. New model releases are now a regular event and premium AI features are quickly overtaken by free or freemium alternatives. Exhibit A: OpenAI unveiled its Deep Research tool, which can write reports on complex topics in minutes, as part of its $200-a-month Pro package, but rival Perplexity gives non-subscribers some access to its Deep Research assistant free of charge. (Yes, Google Gemini’s agentic research assistant is also called Deep Research.) With fewer fundamental breakthroughs, the likes of OpenAI, Anthropic, and xAI are slugging it out over incremental improvements in search and reasoning performance. As AI pricing falls and performance gaps close, the focus has shifted from novelty applications to finding real business value. It’s a new era for AI Agentic AI is the game-changer. Gartner forecasts that 33% of enterprise software applications will include agentic AI by 2028, a drastic increase from less than 1% in 2024. Some 15% of day-to-day work decisions could be made autonomously by AI agents, hiking business productivity and freeing up workers for more strategic tasks. It’s probably no surprise, then, that OpenAI—which famously took 4.5 years to launch ChatGPT “without any idea of who our customer was going to be,” according to CEO Sam Altman—is releasing its first-ever product roadmap. Nothing says “maturing market” like a product roadmap. As Finn Murphy, a founder and venture capitalist, posted on X from the AI Action Summit in Paris, where the EU said it would “mobilize” €200 billion for AI investment: “It really feels like the era of interesting technical breakthroughs being announced is over and the era of policy, partnerships, and money announcements is here.” Security matters Growing up brings responsibilities, of course, especially at the enterprise level. Among the 1,803 C-suite executives surveyed for the Boston Consulting Group (BCG) AI Radar, published in January, 76% recognized that their AI cybersecurity measures need further improvement. If anything, that number should be closer to 100%. Execs ranked data privacy and security as the top AI risk. Regulatory challenges and compliance also featured strongly. Their fears are not unfounded: AI applications open up a new attack surface for threat actors and security researchers have already succeeded in “breaking” all of the world-class AI models to some extent. Still, it took the shock arrival of China’s DeepSeek to properly push AI security into the mainstream. It is notable that consumers and corporates have concerns about a Chinese entity having their data but seem content that U.S. and Europe-based entities—which impose almost identical terms and conditions—will keep it secure. Security must be a key consideration for all AI models, not just those built (or hosted) outside the US. History shows us that bad actors are often the earliest adopters of new technology, from wire fraud to phone, text and email phishing scams. In an agentic world, where AI agents have been given access to critical business information and in-house applications, the blast radius from any attack may be exponential. Think like an attacker It’s often said that the best defense is to think like an attacker. Today, that means using Agentic Warfare to comprehensively test AI-driven systems for vulnerabilities long before they see the light of day. Automated red-teaming is the new standard in testing AI with speed, complexity, and scale. At every step, security has to sit alongside performance in choosing AI, rather than coming as an afterthought when something goes wrong. As much as cost, security-to-performance will be a key metric in model and app selection; this is a one-way-door decision for safe and successful AI implementations. Interestingly, the BCG survey reports that “the intuitive, friendly feel of GenAI masks the discipline, commitment, and hard work required” to introduce AI in the workplace. It is hard work but the rewards should be significant. Just as software led to era-defining leaps in innovation and productivity, agentic AI promises great advances in all sectors—as long as security is baked in from the beginning. Donnchadh Casey is CEO of CalypsoAI. The Fast Company Impact Council is a private membership community of influential leaders, experts, executives, and entrepreneurs who share their insights with our audience. Members pay annual membership dues for access to peer learning and thought leadership opportunities, events and more.

The AI industry is growing up fast. New model releases are now a regular event and premium AI features are quickly overtaken by free or freemium alternatives.

Exhibit A: OpenAI unveiled its Deep Research tool, which can write reports on complex topics in minutes, as part of its $200-a-month Pro package, but rival Perplexity gives non-subscribers some access to its Deep Research assistant free of charge. (Yes, Google Gemini’s agentic research assistant is also called Deep Research.)

With fewer fundamental breakthroughs, the likes of OpenAI, Anthropic, and xAI are slugging it out over incremental improvements in search and reasoning performance. As AI pricing falls and performance gaps close, the focus has shifted from novelty applications to finding real business value.

It’s a new era for AI

Agentic AI is the game-changer. Gartner forecasts that 33% of enterprise software applications will include agentic AI by 2028, a drastic increase from less than 1% in 2024. Some 15% of day-to-day work decisions could be made autonomously by AI agents, hiking business productivity and freeing up workers for more strategic tasks.

It’s probably no surprise, then, that OpenAI—which famously took 4.5 years to launch ChatGPT “without any idea of who our customer was going to be,” according to CEO Sam Altman—is releasing its first-ever product roadmap. Nothing says “maturing market” like a product roadmap.

As Finn Murphy, a founder and venture capitalist, posted on X from the AI Action Summit in Paris, where the EU said it would “mobilize” €200 billion for AI investment: “It really feels like the era of interesting technical breakthroughs being announced is over and the era of policy, partnerships, and money announcements is here.”

Security matters

Growing up brings responsibilities, of course, especially at the enterprise level. Among the 1,803 C-suite executives surveyed for the Boston Consulting Group (BCG) AI Radar, published in January, 76% recognized that their AI cybersecurity measures need further improvement. If anything, that number should be closer to 100%.

Execs ranked data privacy and security as the top AI risk. Regulatory challenges and compliance also featured strongly. Their fears are not unfounded: AI applications open up a new attack surface for threat actors and security researchers have already succeeded in “breaking” all of the world-class AI models to some extent.

Still, it took the shock arrival of China’s DeepSeek to properly push AI security into the mainstream. It is notable that consumers and corporates have concerns about a Chinese entity having their data but seem content that U.S. and Europe-based entities—which impose almost identical terms and conditions—will keep it secure. Security must be a key consideration for all AI models, not just those built (or hosted) outside the US.

History shows us that bad actors are often the earliest adopters of new technology, from wire fraud to phone, text and email phishing scams. In an agentic world, where AI agents have been given access to critical business information and in-house applications, the blast radius from any attack may be exponential.

Think like an attacker

It’s often said that the best defense is to think like an attacker. Today, that means using Agentic Warfare to comprehensively test AI-driven systems for vulnerabilities long before they see the light of day. Automated red-teaming is the new standard in testing AI with speed, complexity, and scale.

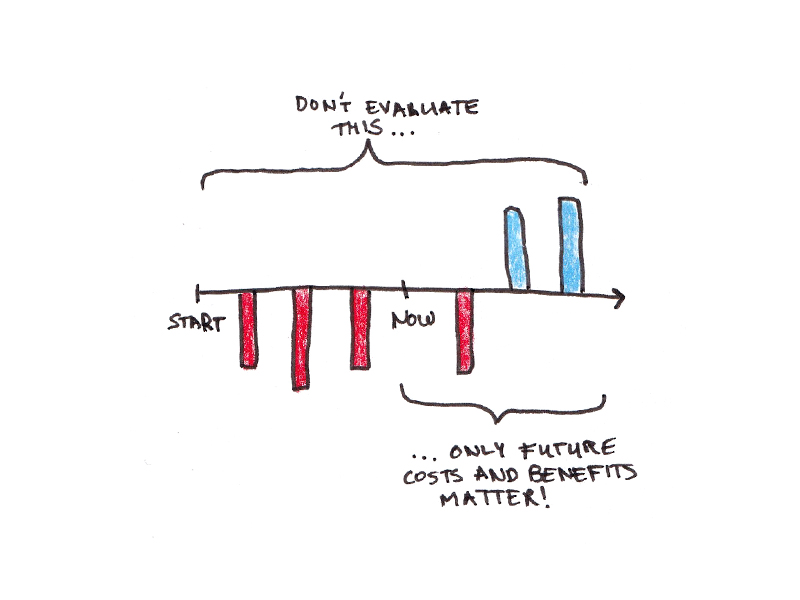

At every step, security has to sit alongside performance in choosing AI, rather than coming as an afterthought when something goes wrong. As much as cost, security-to-performance will be a key metric in model and app selection; this is a one-way-door decision for safe and successful AI implementations.

Interestingly, the BCG survey reports that “the intuitive, friendly feel of GenAI masks the discipline, commitment, and hard work required” to introduce AI in the workplace. It is hard work but the rewards should be significant.

Just as software led to era-defining leaps in innovation and productivity, agentic AI promises great advances in all sectors—as long as security is baked in from the beginning.

Donnchadh Casey is CEO of CalypsoAI.

The Fast Company Impact Council is a private membership community of influential leaders, experts, executives, and entrepreneurs who share their insights with our audience. Members pay annual membership dues for access to peer learning and thought leadership opportunities, events and more.

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)

![How Human Behavior Impacts Your Marketing Strategy [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/human-behavior-impacts-marketing-strategy-cover-600x330.png?#)

![How to Make a Content Calendar You’ll Actually Use [Templates Included]](https://marketinginsidergroup.com/wp-content/uploads/2022/06/content-calendar-templates-2025-300x169.jpg?#)

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)