Why government’s AI dreams keep turning into digital nightmares—and how to fix that

Government leaders worldwide are talking big about AI transformation. In the U.S., Canada, and the U.K., officials are pushing for AI-first agencies that will revolutionize public services. The vision is compelling: streamlined operations, enhanced citizen services, and unprecedented efficiency gains. But here’s the uncomfortable truth—most government AI projects are destined to fail spectacularly. The numbers tell a sobering story. A recent McKinsey analysis of nearly 3,000 public sector IT projects found that over 80% exceeded their timelines, with nearly half blowing past their budgets. The average cost overrun hit 108%, or three times worse than private sector projects. These aren’t just spreadsheet problems; they’re systemic failures that erode public trust and waste taxpayer dollars. When AI projects go wrong in government, the consequences extend far beyond budget overruns. Arkansas’s Department of Human Services faced legal challenges when its automated disability care system caused “irreparable harm” to vulnerable citizens. The Dutch government collapsed in 2021 after an AI system falsely accused thousands of families of welfare fraud. These aren’t edge cases—they’re warnings about what happens when complex AI systems meet unprepared institutions. The Maturity Trap The core problem isn’t AI technology itself—it’s the mismatch between ambitious goals and organizational readiness. Government agencies consistently attempt AI implementations that far exceed their technological maturity, like trying to run a marathon without first learning to walk. Our research across 500 publicly traded companies for a previous book revealed a clear pattern: organizations that implement technologies appropriate to their maturity level achieve significant efficiency gains, while those that overreach typically fail. Combining this insight with our practical work implementing digital solutions in the public sector led to the development of a five-stage AI maturity model specifically designed for government agencies. Stage 1: Initial/Ad Hoc. Organizations at this stage operate with isolated AI experiments and no systematic strategy. Stage 2: Developing/Reactive. Agencies begin showing basic capabilities, typically through simple chatbots or vendor-supplied solutions. Stage 3: Defined/Proactive. Organizations develop comprehensive AI strategies aligned with strategic goals. Stage 4: Managed/Integrated. Agencies achieve full operational integration of AI with quantitative performance measures. Stage 5: Optimized/Innovative. Organizations reach full agility and influence how others use AI. Most government agencies today operate at stages 1 or 2, but AI-first initiatives require stage 4 or 5 maturity. This fundamental mismatch explains why so many initiatives fail. Without the right cultural frameworks, technological expertise, and technical infrastructure, organization-wide transformation based around AI capabilities stand little chance of success. Start Where You Are, Not Where You Want to Be The path to AI success begins with brutal honesty about current capabilities. A national security agency we studied exemplifies this approach. Despite seeing enormous opportunities in large language models, they recognized serious risks around data drift, model drift, and information security. Rather than rushing into advanced implementations, they are pursuing incremental development grounded in institutional knowledge and cultural readiness. This measured approach doesn’t mean abandoning ambitious goals—it means building toward them systematically. Organizations must select projects that are appropriate to their maturity level while ensuring each initiative serves dual purposes: delivering immediate value and advancing foundational capabilities for future growth. Three Immediate Opportunities For agencies at early maturity stages, three implementation areas offer immediate value creation opportunities while building toward transformation: 1. Information Technology Operations IT represents the most accessible entry point for government AI adoption. The private sector offers a road map— 88% of companies now leverage AI in IT service management, with 70% implementing structured automation operations by 2025, up from 20% in 2021. AI can transform government IT through chatbots handling common user issues, intelligent anomaly detection identifying network problems in real-time, and dynamic resource optimization automatically adjusting allocations during peak periods. These capabilities deliver immediate efficiency gains while building the technical expertise and collaborative patterns needed for higher maturity levels. The challenge lies in government’s unique constraints. Stringent security requirements along with legacy systems at agencies like Social Security and NASA create implementation hurdles that private sector organizations rarely face. Success requires careful navig

Government leaders worldwide are talking big about AI transformation. In the U.S., Canada, and the U.K., officials are pushing for AI-first agencies that will revolutionize public services. The vision is compelling: streamlined operations, enhanced citizen services, and unprecedented efficiency gains. But here’s the uncomfortable truth—most government AI projects are destined to fail spectacularly.

The numbers tell a sobering story. A recent McKinsey analysis of nearly 3,000 public sector IT projects found that over 80% exceeded their timelines, with nearly half blowing past their budgets. The average cost overrun hit 108%, or three times worse than private sector projects. These aren’t just spreadsheet problems; they’re systemic failures that erode public trust and waste taxpayer dollars.

When AI projects go wrong in government, the consequences extend far beyond budget overruns. Arkansas’s Department of Human Services faced legal challenges when its automated disability care system caused “irreparable harm” to vulnerable citizens. The Dutch government collapsed in 2021 after an AI system falsely accused thousands of families of welfare fraud. These aren’t edge cases—they’re warnings about what happens when complex AI systems meet unprepared institutions.

The Maturity Trap

The core problem isn’t AI technology itself—it’s the mismatch between ambitious goals and organizational readiness. Government agencies consistently attempt AI implementations that far exceed their technological maturity, like trying to run a marathon without first learning to walk.

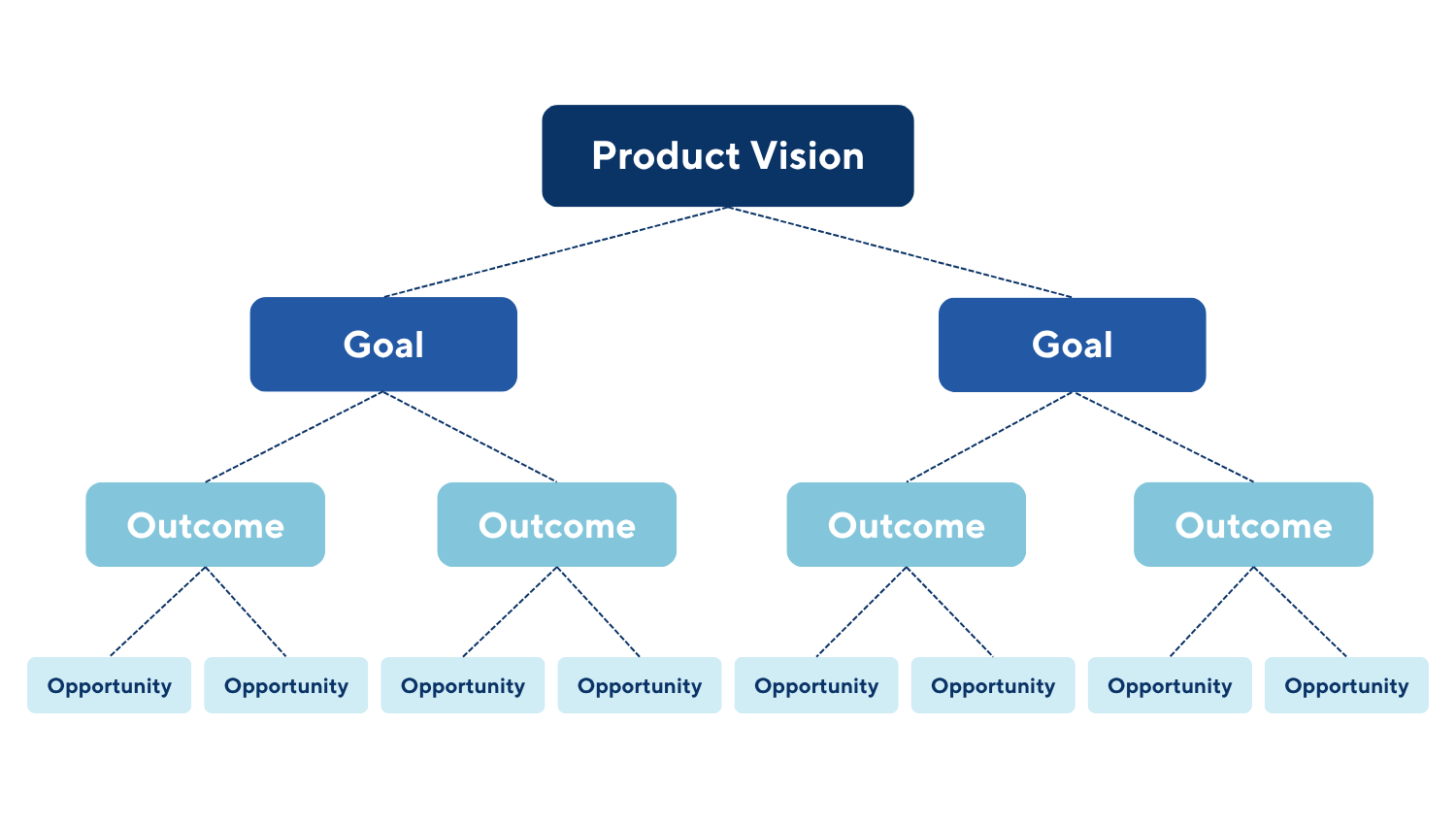

Our research across 500 publicly traded companies for a previous book revealed a clear pattern: organizations that implement technologies appropriate to their maturity level achieve significant efficiency gains, while those that overreach typically fail. Combining this insight with our practical work implementing digital solutions in the public sector led to the development of a five-stage AI maturity model specifically designed for government agencies.

Stage 1: Initial/Ad Hoc. Organizations at this stage operate with isolated AI experiments and no systematic strategy.

Stage 2: Developing/Reactive. Agencies begin showing basic capabilities, typically through simple chatbots or vendor-supplied solutions.

Stage 3: Defined/Proactive. Organizations develop comprehensive AI strategies aligned with strategic goals.

Stage 4: Managed/Integrated. Agencies achieve full operational integration of AI with quantitative performance measures.

Stage 5: Optimized/Innovative. Organizations reach full agility and influence how others use AI.

Most government agencies today operate at stages 1 or 2, but AI-first initiatives require stage 4 or 5 maturity. This fundamental mismatch explains why so many initiatives fail. Without the right cultural frameworks, technological expertise, and technical infrastructure, organization-wide transformation based around AI capabilities stand little chance of success.

Start Where You Are, Not Where You Want to Be

The path to AI success begins with brutal honesty about current capabilities. A national security agency we studied exemplifies this approach. Despite seeing enormous opportunities in large language models, they recognized serious risks around data drift, model drift, and information security. Rather than rushing into advanced implementations, they are pursuing incremental development grounded in institutional knowledge and cultural readiness.

This measured approach doesn’t mean abandoning ambitious goals—it means building toward them systematically. Organizations must select projects that are appropriate to their maturity level while ensuring each initiative serves dual purposes: delivering immediate value and advancing foundational capabilities for future growth.

Three Immediate Opportunities

For agencies at early maturity stages, three implementation areas offer immediate value creation opportunities while building toward transformation:

1. Information Technology Operations

IT represents the most accessible entry point for government AI adoption. The private sector offers a road map— 88% of companies now leverage AI in IT service management, with 70% implementing structured automation operations by 2025, up from 20% in 2021.

AI can transform government IT through chatbots handling common user issues, intelligent anomaly detection identifying network problems in real-time, and dynamic resource optimization automatically adjusting allocations during peak periods. These capabilities deliver immediate efficiency gains while building the technical expertise and collaborative patterns needed for higher maturity levels.

The challenge lies in government’s unique constraints. Stringent security requirements along with legacy systems at agencies like Social Security and NASA create implementation hurdles that private sector organizations rarely face. Success requires careful navigation of these constraints while building foundational capabilities.

2. Predictive Analytics

Predictive analytics represents perhaps the highest-value opportunity for early-stage agencies. Government organizations possess vast data resources, complex operational environments, and urgent needs for better decision-making—perfect conditions for predictive AI success.

The U.S. military is already demonstrating this potential, using predictive modeling for command and control simulators and live battlefield decision-making. The Department of Veterans Affairs has trialed suicide prevention programs using risk prediction algorithms to identify veterans needing intervention. Beyond specialized applications, predictive analytics can improve incident management, enable predictive maintenance, and forecast resource needs across virtually any government function.

These implementations advance AI maturity by building essential data management practices and analytical capabilities while delivering immediate operational benefits. Unlike complex generative AI systems, predictive analytics can be implemented successfully at any maturity stage using well-established machine learning techniques.

3. Cybersecurity Enhancement

Cybersecurity offers critical immediate value, with AI applications spanning digital and physical protection domains. Modern AI security platforms process vast amounts of data across networks, endpoints, and physical spaces to identify threats that traditional systems miss—a capability that is particularly valuable given increasing attack sophistication.

Current implementations demonstrate proven value. The Cybersecurity and Infrastructure Security Agency’s Automated Indicator Sharing program enables real-time threat intelligence exchange. U.S. Customs and Border Protection deploys AI-enabled autonomous surveillance towers for border situational awareness. The Transportation Security Administration uses AI-driven facial recognition for streamlined security screening.

While national security agencies implement the most advanced applications, these capabilities offer immediate value for all government entities with security responsibilities, from facility protection to data privacy assurance.

Building Systematic Success

Creating sustainable AI capabilities requires following five key principles:

Build on existing foundations. Leverage current processes and infrastructure while controlling implementation risks rather than starting from scratch.

Develop mission-driven capabilities. Create implementation teams that mix technological and operational expertise to ensure AI solutions address real operational needs rather than pursuing technology for its own sake.

Prioritize data quality and governance. AI systems only perform as well as their underlying data. Implementing robust data management practices, establishing clear ownership, and ensuring accuracy are essential prerequisites for success.

Learn through limited trials. Choose use cases where failure won’t disrupt critical operations, creating space for learning and adjustment without catastrophic consequences.

Scale what works. Document implementation lessons and use early wins to build organizational support, creating momentum for broader transformation.

The Path Forward

Government agencies don’t need to choose between ambitious AI goals and practical implementation. The key is recognizing that most transformation happens through systematic progression. While “strategic leapfrogging” is possible in some situations, it is the exception rather than the norm. By starting with appropriate projects, building foundational capabilities, and scaling successes, agencies can begin realizing concrete AI benefits today while developing toward their longer-term transformation vision.

The stakes are too high for continued failure. With 48% of Americans already distrusting AI development and 77% wanting regulation, government agencies must demonstrate that AI can deliver responsible, effective, and efficient outcomes. Success requires abandoning the fantasy of overnight transformation in favor of disciplined, systematic implementation that builds lasting capabilities.

The future of government services may indeed be AI-first, but getting there requires being reality-first about where agencies stand today and what it takes to build toward tomorrow.

(This article draws on the cross-disciplinary expertise and applied research of Faisal Hoque, Erik Nelson, Professor Thomas Davenport, Dr. Paul Scade, Albert Lulushi, and Dr. Pranay Sanklecha.)

![https //g.co/recover for help [1-866-719-1006]](https://newsquo.com/uploads/images/202506/image_430x256_684949454da3e.jpg)

![[PATREON EXCLUSIVE] The Power of No: How to Say It, Mean It, and Lead with It](https://tpgblog.com/wp-content/uploads/2025/06/just-say-no.jpg?#)