An Interview with Meta CEO Mark Zuckerberg About AI and the Evolution of Social Media

An interview with Meta CEO Mark Zuckerberg about Llama and the AI opportunity, the evolution of social medial, and what it means to connect.

Good morning,

Today’s Stratechery Interview is with Meta CEO Mark Zuckerberg, who obviously needs no introduction; I interviewed Zuckerberg previously in October 2021 and October 2022.

Some quick context about this interview: I spoke to Zuckerberg in person at Meta Headquarters on Monday afternoon (which makes this one worth listening to), before the LlamaCon keynote on Tuesday and Meta’s earnings on Wednesday; I was briefed about some of the LlamaCon announcements, and had access to the new Meta AI app. In addition, just before the interview I was informed about Zuckerberg’s interview with Dwarkesh Patel, which is very centered on discussions of AI models, competitors, etc.; I am happy to point you there for more in-depth discussions about Llama model specifics that we didn’t touch on in this interview.

What we did discuss were broader themes that place Llama in Meta’s historical context. We cover Meta’s platform ambitions over the last two decades, the evolution of social networking, and how Zuckerberg has changed his thinking about both. We discuss the Llama API and the tension between GPU opportunity cost and leveraging training costs, and why Zuckerberg thinks that the latter is worth paying for, even if the company has to go it alone. We also discuss why Meta AI may actually bring many of Zuckerberg’s oldest ideas full circle, how that ties into Reality Labs, and why Meta ended up being the perfect name for the company.

As a reminder, all Stratechery content, including interviews, is available as a podcast; click the link at the top of this email to add Stratechery to your podcast player.

On to the Interview:

An Interview with Meta CEO Mark Zuckerberg About AI and the Evolution of Social Media

This interview is lightly edited for clarity.

Topics: From f8 to LlamaCon | The Llama API | Meta’s AI Opportunity (Part 1) | The Llama API | Social Networking 2.0 | Meta’s AI Opportunity (Part 2) | The Meta AI App | Tariffs and Reality Labs

From f8 to LlamaCon

Mark Zuckerberg, welcome back to Stratechery.

Mark Zuckerberg: Thanks for having me.

So the occasion for this interview is LlamaCon, a new Meta developer conference. Before I get to that, I wanted to touch on the history of Facebook conferences. So there was F8 from 2007 to 2019, skipped a couple years, prominent announcements included the original Facebook platform, the Open Graph, Parse, there’s a whole bunch of them. It’s interesting though, the vast majority of these are either dead or massively constrained according to the original vision. If you think about this — I dropped this on you out of the blue…

MZ: It’s a great start to the conversation.

Is that a disappointment or is that a lesson learned? And how do you think about that?

MZ: No. Well look, the original Facebook platform was something that really just made sense for web, and it was sort of a pre-mobile thing. As the usage transitioned from desktop web to mobile, Apple basically just said, “You can’t have a platform within a platform and you can’t have apps that use your stuff”. So that whole thing, which had grown to be a meaningful part of our business — I think by the time that we had our IPO in 2012, I think games and apps were about 20% of our business — but that basically just didn’t have much of a future. So we played with different versions of it around Connect and Sign In to different apps and—

Yeah, the one that’s definitely still around is Sign In with Facebook.

MZ: Yeah, and there’s some connectivity between that and developers wanting to get installs for their apps and doing things like that. But it just got very thin, and it was one of these things that I think it’s really just an artifact of Apple’s policies that I think has led to this deep bitterness around not just this, but a number of things where they’ve just said, “Okay, you can’t do these things that we think would be valuable”, which I think to some degree contributes to some of that dynamic between our company and theirs. I think that’s unfortunate. I think a more open mobile—

But there’s a good argument, I made it back then I think in 2013, that this is a great thing for you, it forced you to become what you became.

MZ: Well, maybe I think we would’ve become that and also done more. The number of times when we basically I think could have built different experiences into our apps, but we’re just told that we couldn’t, I think it’s hard to look back and think that that created value for the people we’re serving or who were building for. But anyhow, fast-forward to Llama…

Yeah, well there is Meta Connect. Is the metaverse still a thing?

MZ: Yeah, no, absolutely. We wanted a whole event where we could talk about all of the VR and AR stuff that we wanted to do.

Yeah, that one’s straightforward, that’s clearly a platform.

MZ: Part of the reason why we wanted to do LlamaCon is—

Yeah, you’re anticipating my question. Where is LlamaCon now, the new developer conference?

MZ: They’re just different products. Connect around AR and VR attracts a certain type of developer and a certain type of people who are interested in that, and obviously everything is sort of AI going forward too. Like the glasses, the Ray-Ban Meta glasses are AI glasses, but it’s a certain type of product. And for people who are primarily focused around building with Llama, we thought it would be useful to have a whole event that was just focused on that, so we made LlamaCon.

It is actually interesting going through the history with F8 and the platform, because obviously a big part of Llama is that it’s open source, and a big part of why we believe in building an open platform is partially the legacy of what’s happened with mobile platforms and all of, from our perspective, pretty arbitrary restrictions that have been placed on developers. I think that’s one of the reasons why developers really want to use open models.

In some ways, it has historically been easier to just get an API from OpenAI or Anthropic or someone, but then you have to deal with the fact that they can just change the API on you overnight and then your app changes, and they can censor what you’re doing with your apps and if they don’t like a query that you’re sending them, then they can just say, “Okay, we’re not going to answer that”, you can’t customize their model as much. So there are all these things that open source allows there that I think we’ve become even more attuned to because of the previous closed platforms that we’ve built on top of that have made us even more wanting to invest in that.

But I think that’s why open source AI is taking off in such a huge way. And of course now it’s not just Llama, you have all these different Chinese models too, DeepSeek and others. I predicted that 2025 was going to be the year that open source became the largest type of model that people are developing with, and I think that’s probably going to be the case. That’s kind of how we’re thinking about this overall.

The Llama API

Well, one announcement, which at least when you were talking to me before, you insist it’s small, but I’m not sure it’s going to be taken that way, is this Llama API, what is it and why come out with it now?

MZ: Oh, I don’t think it’s small. It’s not necessarily a business that we’re trying to build.

Got it.

MZ: Which I think is the main thing that people assume whenever you launch a paid API. The main thing that we hear, people love open source for the reasons that I just said, where they want something that they control, that they can customize, that no one is going to take away from them, that they can use however they want, it’s more efficient, it’s cheaper. All these things that are values. The downside of open source until today is that—

No one actually wants to host it.

MZ: Is that it takes work to host, right? Yeah. The downside is that it’s much simpler to just make an API call to some established service. Now, there are, of course, a bunch of companies that have made their businesses hosting different models, including open source models, and some of those, I think, are better than others. We went through the Llama 4 launch recently, I think we learned a bunch about how to roll that out. But I think one of the things that didn’t go well was just because we dropped the model and a bunch of the API providers kind of had a bunch of bugs in their implementation, so a lot of the first tests that people had with Llama 4 were using these external API providers that had issues with the implementation.

That was pretty recently, though. Did you make the decision that quickly that actually, “No, we need to have a reference API here”?

MZ: No, I was more using that as an example. But even as far back as Llama 3, you can find a lot of people talking about online. “Okay, I want an API provider who’s just providing an unquantized version of the 405B. It’s really hard for me to tell what types of quantization or what kind of shortcuts different API providers are taking, the quality is variable, we just want a good source”. So I think that having a broad ecosystem of API providers is good, and a lot of them do really interesting things, like Groq, for example. Basically with their vertical integration of their building custom silicon to do low latency, it’s really a compelling thing.

You were talking about Groq, the chip, here, not Grok the AI model.

MZ: Yeah, Grok is also interesting, [xAI Founder and CEO] Elon [Musk]’s thing, but I’m talking about the chip company. Their business today, they build the chips, they build a vertically integrated service that offers a really low latency API, it’s really cool. I think having an ecosystem where there are companies like that that can use open source models is great.

But I guess just to probably give the topic sentence that I should have given a couple minutes ago when you asked the question, the goal of the Llama API is to provide a reference implementation for the industry. We’re not trying to build a huge business around this, we’re basically trying to make a very simple API that is like vanilla, and people know that it is the model that we intended to build, and that it works, and that you can just drop in your API call for the OpenAI API or whatever else you were using, and you just replace that with the URL for this and that works. Also there isn’t a huge markup, we’re basically offering this at basically our cost of capital.

Well, if there isn’t a huge markup, this sounds like it could become a pretty big business, then.

MZ: Well, it won’t become very profitable for us.

Yeah, I know. You’re out there, “Just maybe this little thing, we’re not going to charge very much for it”, I’m not sure those two things are in line.

MZ: What do you mean?

Well, if you’re not charging very much for it, why doesn’t everybody just go use yours instead of use it from another cloud provider?

MZ: Well, in theory, other companies that have this be their whole business should be able to make more interesting and valuable offerings. So we were talking a second ago about Groq that is building custom silicon to do inference for latency-specific optimization.

Right. But a lot of Llama use is on AWS, for example.

MZ: Sure. And AWS obviously has the value of, they have this whole breadth of services that you are already using for different stuff if you’re an AWS customer.

So if you’re just building an app and you don’t have a lock-in to any cloud, this will be the easiest, cheapest solution?

MZ: Yeah. If you want to play with something and you want to know, “Okay, I want to get started with the Llama 4 models, what’s the reference implementation that I know is going to work?”, you can come to this, it will work. And then over time, I would expect that people will play around and optimize for their own use across different services, hosting themselves, whatever kinds of different things once they get to scale. But I think having a reference implementation that’s easy to use is something that the open source ecosystem needs.

If someone gets on there and blows up, are you going to say, “You’re getting a little too big, you need to go somewhere else”?

MZ: I don’t know, we haven’t thought that through that much.

(laughing) TBD?

MZ: Yeah, we haven’t thought that through that much. I think one of the things for us in this is that it’s like, “Why haven’t we built an API yet as a business?”.

Yeah, that’s my next question.

MZ: Why haven’t we built a cloud business overall?

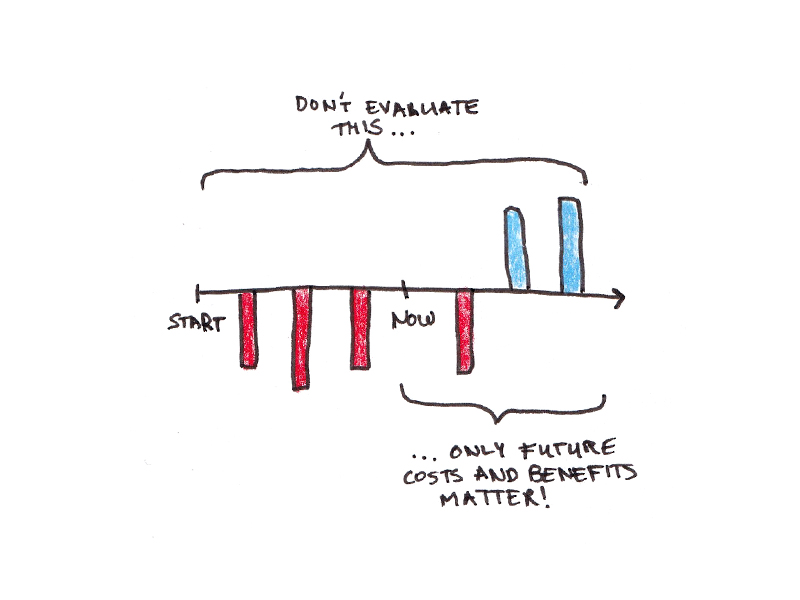

Especially if you’re going to look at this and say, “Yeah, you need to gain leverage on your training costs, you’re spending all this money to train Llama, you need to start making money in more ways from that investment”.

MZ: Yeah, I think the dynamic around our business that has been interesting is that it has always been a higher marginal return to allocate incremental GPUs to either better recommendations of content and feeds or ads.

Yeah, that’s my view. I’ve defended you not having an API for that very reason.

MZ: Exactly. So now in this case, we think it’s valuable if Llama grows, and we think that having a reference implementation API is a valuable thing for it growing. So we think that this is a thing that needs to exist, but that economics is why I’m not looking at this as a large business. I think if this ends up consuming a massive, massive number of GPUs, you can make an argument that if it’s profitable, then that’s good and we should just do that and all the recommendation stuff that we do.

Right, there’s opportunity costs these days.

MZ: And obviously you can’t ever perfectly forecast how many GPUs you should build. So in practice, we’re always making these calculations internally, which is like, “All right, should I give the Instagram Reels team more GPUs or should I give this other team more GPUs to build the thing that they’re doing?”, and I would guess that having an API business is going to be pretty low on the list of things that we want to pull recommendations service GPUs away from towards. But that said, we have a huge fleet, right? Gigawatts of data centers and all that. So having a very small amount of that go towards a reference implementation to help make it simple for people to start using open source AI seems like a good thing to do. But again, that’s kind of the big picture.

If someone gets really big, there might be some conversations to be had.

MZ: We’ll see, we’ll see.

We’ll get there when we get there.

MZ: I think in general in this kind of business, you’re happy when people grow big and scale.

No, of course. It’s a good problem to have. I just think it’s really interesting to think about this cost issue where you are talking about the concern and why I share that concern is the inference. You can use those GPUs for your own usage, versus them, so there’s a real trade-off here, but the other issue that I just mentioned before is the cost of the training, and you’re spending billions of dollars to train a model, how do you maximize your return on that training? That’s why a lot of investors, I think, like the idea of you doing an API. Another option that’s been rumored out there, lots of other companies are finding benefit from Llama, should they be contributing more to training? Is that something that you are looking to pursue? Are there going to be any takers?

MZ: We’ve talked to some folks about that, and so far it hasn’t come together. It may as the cost keeps scaling, but so far it actually seems like the number of efforts actually are still proliferating.

Right.

MZ: So companies that I would’ve expected would’ve wanted to kind of get on board with Llama as an open source standard and then be able to save costs have actually turned around and spun up new efforts to build up their own models, so we’ll see how that turns out. I’d guess that in the next couple of years, the training runs are going to be on gigawatt clusters, and I just think that there will be consolidation.

People are going to bow out at some point.

MZ: But look, I’m doing our financial planning assuming that we’re paying the cost of this, so it’s upside if we end up being able to share it with other people, but we don’t need it.

Right.

MZ: I think that that’s one of the things that is kind a positive for us. And I can kind of take you through the business case, if that’s helpful on this.

Meta’s AI Opportunity (Part 1)

Well, I do want to ask about your open source strategy generally. On one hand, as an overall observer of the industry, I’m really quite grateful for it, and I think you really opened up the floodgates for this, I think overcame some perhaps well-intentioned, but misplaced reticence about the broad availability of these models. On the other hand, large companies have been major contributors to open source, including Facebook, including Meta. You’ve compared Llama to the Open Compute Project. In that case, you had data centers all over the world adopting your standards, you had hardware makers building to them, all of which accrued to your bottom line at the end of the day and to your point, you’re not a data center provider, so it’s all gravy. I guess the question for Llama is, what are the economic payoffs from this open sourcing, particularly when you think about, “Well, maybe we do want to tune it to ourselves”. Is it just a branding thing? Is it just that researchers like that it’s open source? Particularly the economic part of it.

MZ: The decision to open source is downstream from the decision to build it, right? We’re not building it so that we can open source it for developers, we’re building it because it’s a thing that we believe that we need in order to build the services that we want. And then, there’s this whole question of like, “Do you need to be at the frontier? Can you be six months behind or whatever?”, and I believe that over time, you want to be at the frontier. Especially one of the dynamics that we’re seeing around — there are a couple of things. One is that you’re starting to see some specialization, so the different companies are better at different things and focusing on different things, and our use cases are just going to be a little bit different from others. I think at the scale that we operate, it just makes sense to build something that is really tuned for your usage.

What are the specifics that are important for you?

MZ: This is going to take us a little afield from the question that I was just answering.

That’s fine.

MZ: I basically think that there are four major product and business opportunities that are the things that we’re looking at and I’ll start from the most simplest and probably the ones that are the easiest to do to the things that are further afield from where we are today. So most basic of the four. Use AI to make it so that the ads business goes a lot better.

Yeah.

MZ: Improve recommendations, make it so that any business that basically wants to achieve some business outcome can just come to us, not have to produce any content, not have to know anything about their customers. Can just say, “Here’s the business outcome that I want, here’s what I’m willing to pay, I’m going to connect you to my bank account, I will pay you for as many business outcomes as you can achieve”. Right?

MZ: Yeah, it is basically like the ultimate business agent, and if you think about the pieces of advertising, there’s content creation, the creative, there’s the targeting, and there’s the measurement and probably the first pieces that we started building were the measurement to basically make it so that we can effectively have a business that’s organized around when we’re delivering results for people instead of just showing them impressions.

Outcomes, yeah.

MZ: And then, we start off with basic targeting. Over the last 5 to 10 years, we’ve basically gotten to the point where we effectively discourage businesses from trying to limit the targeting. It used to be that a business would come to us and say like, “Okay, I really want to reach women aged 18 to 24 in this place”, and we’re like, “Okay. Look, you can suggest to us…”

Right. But I promise you, we’ll find more people at a cheaper rate.

MZ: If they really want to limit it, we have that as an option. But basically, we believe at this point that we are just better at finding the people who are going to resonate with your product than you are. And so, there’s that piece.

But there’s still the creative piece, which is basically businesses come to us and they have a sense of what their message is or what their video is or their image, and that’s pretty hard to produce and I think we’re pretty close.

And the more they produce, the better. Because then, you can test it, see what works. Well, what if you could just produce an infinite number?

MZ: Yeah, or we just make it for them. I mean, obviously, it’ll always be the case that they can come with a suggestion or here’s the creative that they want, especially if they really want to dial it in. But in general, we’re going to get to a point where you’re a business, you come to us, you tell us what your objective is, you connect to your bank account, you don’t need any creative, you don’t need any targeting demographic, you don’t need any measurement, except to be able to read the results that we spit out. I think that’s going to be huge, I think it is a redefinition of the category of advertising. So if you think about what percent of GDP is advertising today, I would expect that that percent will grow. Because today, advertising is sort of constrained to like, “All right, I’m buying a billboard or a commercial…”

Right. I think it was always either 1% or 2%, but digital advertising has already increased that.

MZ: It has grown, but I wouldn’t be surprised if it grew by a very meaningful amount.

I’m with you. You’re preaching to the choir, everyone should embrace the black box. Just go there, I’m with you. So what’s number two?

MZ: Number two is basically growing engagement on the consumer surfaces and recommendations. So part one of that is just get better at showing people the content that’s out there, that’s effectively what’s happening with Reels. Then I think what’s going to start happening is that the AI is not just going to be recommending content, but it is effectively going to be either helping people create more content or just creating it themselves.

You can think about our products as there have been two major epochs so far. The first was you had your friends and you basically shared with them and you got content from them and now, we’re in an epoch where we’ve basically layered over this whole zone of creator content. So the stuff from your friends and followers and all the people that you follow hasn’t gone away, but we added on this whole other corpus around all this content that creators have that we are recommending.

How do you feel about that? Because I wrote back in 2015 that that’s what you needed to do. But then it was like, “No, we connect people and that’s how we figure things-“

MZ: Let me finish answering this and then can we come back to that?

Okay, I want to get into the psyche here.

MZ: Well, the third epoch is I think that there’s going to be all this AI-generated content and you’re not going to lose the others, you’re still going to have all the creator content, you’re still going to have some of the friend content. But it’s just going to be this huge explosion in the amount of content that’s available, very personalized and I guess one point, just as a macro point, as we move into this AGI future where productivity dramatically increases, I think what you’re basically going to see is this extrapolation of this 100-year trend where as productivity grows, the average person spends less time working, and more time on entertainment and culture. So I think that these feed type services, like these channels where people are getting their content, are going to become more of what people spend their time on, and the better that AI can both help create and recommend the content, I think that that’s going to be a huge thing. So that’s kind of the second category.

I’ll answer your question before we go to the third category.

Social Networking 2.0

How do you feel about Facebook being more than just connecting to your friends and family now?

MZ: I think it’s been a good change overall, but I think I sort of missed why. It used to be that you interacted with the people that you were connecting with in feed, like someone would post something and you’d comment in line and that would be your interaction.

Today, we think about Facebook and Instagram and Threads, and I guess now, the Meta AI app too and a bunch of other things that we’re doing, as these discovery engines. Most of the interaction is not happening in feed. What’s happening is the app is like this discovery engine algorithm for showing you interesting stuff and then, the real social interaction comes from you finding something interesting and putting it in a group chat with friends or a one-on-one chat. So there’s this flywheel between messaging which has become where actually all the real, deep, nuanced social interaction is online and the feed apps, which I think have increasingly just become these discovery engines.

Did you have this vision when you bought WhatsApp? Or did you back into it?

MZ: I thought messaging was going to be important. Honestly, part of the reason why we were a little bit late to competing with TikTok was because I didn’t fully understand this when TikTok was first growing. And then by using it, I was like, “Oh, okay, this is not just video, this is a complete reconsideration of the way that social media is formulated”. Where just going forward, people are not primarily going to be interacting in line, it’s going to be primarily about content and then, most of the interaction is going to be in messaging and group chat.

There’s a line there that I’ve criticized you for at the time, I think you’ve backed away from it, something about being your whole self everywhere. And one of my takes on group chats, in general, is it lets you be different facets of yourself with different groups of people as appropriate.

MZ: Yeah, totally. Messaging, I think, does this very well. One of the challenges that we’ve always had with services like Facebook and Instagram is you end up accumulating friends or followers over time and then, the question is, who are you talking to there?

Right.

MZ: So if you’re a creator, you have an audience that kind of makes sense. But if you’re a normal person just trying to socialize—

You really don’t want to go viral. I can promise you that.

MZ: No, no. What I’m saying is you basically want to — people want to share very authentically and you’re just willing to share more in small groups. So the modern day configuration of this is that messaging is a much better structure for this, because you don’t just have one group that you share with. You have all these different group chats and you have all your one-on-one threads. So it’s like I can share stuff with my family, I can share stuff with people I do sports with.

In the end, you were worried about Google Circles and then you ended up owning it in the end.

MZ: It just ended up being in messaging instead of in a feed. Which I think gets us to, if you still want to go through this…

Meta’s AI Opportunity (Part 2)

MZ: The third big AI revenue opportunity is going to be business messaging. Because as messaging gets built up as its own huge social ecosystem, if you think about our business today, Facebook revenue is quite strong, Instagram revenue is quite strong, WhatsApp is on the order of almost, I think, 3 billion people using it — much less revenue than either Facebook or Instagram.

It just stores the soul of Facebook, per our conversation.

MZ: But I think between WhatsApp and Messenger and Instagram Direct, these messaging services, I think, should be very large business ecosystems in their own right. And the way that I think that’s going to happen, we see the early glimpses of this because business messaging is actually already a huge thing in countries like Thailand and Vietnam.

Right. Where they have workers who can afford to do the messaging.

MZ: Low cost of labor. So what we see is Thailand and Vietnam are, I think last time I checked, this may not be exactly right, but I think it was something like they were our number 6 and 7 countries by revenue or something, they were definitely in the Top 10. If you look at those countries by GDP, they’re in the 30s. So it’s like, “Okay, what’s going on?”. Well, it’s that business messaging — I mean, I saw some stuff that I think it’s something like 2% of Thailand’s GDP goes through people transacting through our messaging apps.

Yeah. This is one of those things where my being in Asia, I’ve seen this coming for ages and it feels like it’s taken forever to actually accomplish.

MZ: So what will unlock that for the rest of the world? It’s like, it’s AI making it so that you can have a low cost of labor version of that everywhere else. So you have more than a hundred million small businesses that use our platforms and I just think it seems pretty clear that within a few years, every business in the world basically has an email address, a social media account, a website, they’re going to have an AI that can do customer support and sales. And once they have that and that’s driving conversions for them — first of all, we can offer that as a product that’s super easy to spin up and that it’s going to be free. We’re not even going to charge you until we start driving incremental conversions. Then like, “Yeah, you just stand this thing up and we’ll just start sending sales your way and you can pay us some fee for the incremental sales”.

Once you start getting that flywheel going, the demand that businesses are going to have to drive people to those chats is going to really go up. So I think that’s going to be how we’re going to monetize WhatsApp, Messenger, Instagram Direct, and all that. So that’s the third pillar.

And then, the fourth is all the more novel, just AI first thing, so like Meta AI. Eventually, if that grows, it’ll be recommending products, people will be paying for subscriptions and things like that. So Meta AI is around a billion people using it monthly now.

I want to ask about Meta AI, but it feels like there’s two more pillars or potential pillars from my perspective. We mentioned the metaverse earlier, I feel like generative AI is going to be the key to the metaverse. Because even just with gaming on a screen we’ve hit a limit on assets, assets just cost too much to create, so that’s going to solve problems there.

Then, we also have, it just feels like this entire canvas of people who are in these apps and experiencing it, like I feel like every pixel could be monetized. You see an influencer, every single item in that you could recognize it, know it, have a link to it, whoever the purveyor of that product is signed up. The takeaway here is I feel like — and this is a compliment, not an insult — you’re the Microsoft of consumer. In that Microsoft just wins continuously, because they own that distribution channel and they have that connection to everyone, you own this distribution. To your point, more free time, people spending more time in these apps, there’s so many ways to do this. Why do you also need a chatbot and a dedicated app for that?

MZ: Well, I guess if you look at the four categories that we just talked through of the big business opportunities, it’s increasing the ad experience, increasing the consumer experience on engagement, business messaging, which is basically going to build out the business around all our messaging services to the level that we’ve built with Facebook and Instagram. And then, the fourth is just the AI-native thing. So I mentioned Meta AI, because it’s the biggest, it has about a billion people using it today.

And you have a new app.

MZ: Well, the billion people using it today are across the family of apps, but now, we have the standalone app too. So for people who want that, you can have that. But it also includes stuff like creating content in the metaverse, it’s all the AI-native stuff. So when we’ve done our financial planning, we don’t need all of those to work in order for this to be a very profitable thing. If we really hit out of the park on two or three of those, were in pretty good shape, even with the massive cost of training.

But I think that this gets to the question around, in order to really do the world-class work in each of those areas, I think you want to build an end-to-end experience where you are training the model that you need in order to have the capabilities that it needs to deliver each of those things. In all the experience I’ve had so far, you really just want to be able to go all the way down the stack. Meta’s a full-stack company, we’ve always built our own infrastructure and we’ve built our own AI systems, we built our own products.

I’m on board with doing your own model. Is there a bit where because it became so popular as an open source project and you’re here at LlamaCon and now, you have developers saying, “Can you make your model do this?”, and you’re like, “Well, we’re actually doing it to…”?

MZ: Oh, I see. Yeah, I think that that’s going to be an interesting trade-off over time is we definitely are building it first and foremost for our use cases, and then we’re making it available for developers as they want to use it. The Llama 4 Maverick model was not designed for any of the open source benchmarks, and I think that was some of the reason why when people use it, they’re like, “Okay, this feels pretty good”, but then on some of the benchmarks, it’s not scoring quite as high, but is it a high quality model.

Well, if you used the right model, it might’ve scored high.

MZ: What’s that?

If you use the right model, it might’ve scored high.

MZ: What do you mean?

Well, there was a little bit of a controversy about a model that was trained specifically for a test.

MZ: Oh, well, that’s actually sort of interesting. One of the things that we designed Llama 4 to be able to do is be more steerable than other models because we have different use cases. We have Meta AI, we’re building AI Studio, we want to make it so that you can use it for business messaging, all these things. So it is fundamentally a more adaptable model when it was designed to be that, than what you can do with taking something like GPT or Claude and trying to fine-tune it to the extent that they’ll let you to do a different thing.

I guess there was a team that built, steered a version of it to be really good at LMArena and it was able to do that, because it’s steerable. But then I think the version that’s up there now is not optimized for LMArena at all, so it’s like, “Okay, so it scores the way that it does”.

But anyway, it’s a good model. The point that you are making I think is right, that as we design it for our own uses, there are some things that open source developers care about that we are not going to be the purveyor of. But part of the beauty of it being open source is other people can do those things. Open source is an ecosystem, it’s not a provider, so we are doing probably the hardest part of it, in terms of taking these very expensive pre-training runs and doing a lot of work and then making that available and we’re also standing up infrastructure to have a reference implementation API now, but we’re not trying to do the whole thing. There’s a huge opportunity both for other companies to come do that and I expect that, just like with Linux, there were all these other projects that emerged around it to build up all the other functionality, and drivers, and all that different stuff that was necessary for it to be useful for all the things that developers wanted. That’ll exist with Llama, too.

The Meta AI App

Why do you think it’s important to have the Meta AI app?

MZ: Well, I think some people just want to use it as a standalone app.

What do you think they want to use it for? Is this a do homework app? I find that your observation about more free time and that’s something you can fill, I agree, and in some respects I feel like it’s been a journey to lean into being the entertainment company and being okay with that, and maybe people will do their homework somewhere else and when they’re done with their homework, then they’ll come play with you.

MZ: Yeah. I think that there is part of that that I think is probably right. I think AI is by definition a general technology, so I think you want to be careful about trying to design too narrow of an experience, because it’s kind of like web search, the vertical search engines never quite took off because people just wanted a general thing, so I think you want to be careful about that.

But back to your question about what are we optimizing for, we want Meta AI to be your personal AI. It’s very personalized, in addition to things like remembering what you’ve talked to it about, which is I think going to be an industry standard feature, Meta AI just knows stuff about you from using our apps. One thing that I think, a lot of people are fascinated to basically understand how the feed algorithms in Instagram and Facebook, what they know about them, and talk to the algorithm. I think that this is a really fascinating thing to explore is the ability to ask the algorithm what it knows about you, and give it feedback, and talk to it and do that in a natural way.

I think text is useful because it’s inherently discreet, you’re not talking out loud, but a lot of people really prefer to use voice. It’s much more natural, especially for multi-turn interactions, much faster for a lot of people than typing. So we’ve designed the experience to be fundamentally personalized and fundamentally more leaning into voice, but also, as you say, embracing the fun parts of it. That creating content and seeing what other interesting things people are doing with Meta AI is just so like an entertaining thing.

So, I’ll be able to ask this AI, “Why am I seeing all these videos of XYZ”?

MZ: Yeah. I mean, you can ask it what it knows about you and it’ll explain it to you.

Here’s a broader question. I was asking you about Facebook starts out with connection, connecting with friends and family, and then you’ve had to move to this world of the best user-generated content under some competitive pressure, also you’re an entertainment app. Is there a bit where you’re full circle in a way where it’s about connection, but you’re now connecting to AI? That’s where Facebook — it was always the individualized feed and now it’s taken to its logical conclusion?

MZ: Well, I do think that as a company, we are probably pretty attuned to the problem of people. Generally, people have a demand for wanting to express themselves, for wanting to feel understood, for wanting to feel a sense of connection, for not wanting to feel alone, and I think that those are things that we’ve delivered products over a 20-year period that have been very effective on.

Going forward, I think one of the interesting questions is, “How does AI fit into that?”. There’s an interesting sociological finding that the average American has fewer than three friends and the average American would like to have more than three friends. So, now, ideally, you would just make it so they can connect to the right people and that’s obviously something that we try to help people do. When they’re not physically together, you can stay connected through our apps, you can keep in touch with people, you can meet new people. But I do think that going forward there are going to be dynamics where you interact with different people around different things.

I personally have the belief that everyone should probably have a therapist, it’s like someone they can just talk to throughout the day, or not necessarily throughout the day, but about whatever issues they’re worried about and for people who don’t have a person who’s a therapist, I think everyone will have an AI. And all right, that’s not going to replace the friends you have, but it will probably be additive in some way for a lot of people’s lives. I think in some way that is a thing that we probably understand a little bit better than most of the other companies that are just pure mechanistic productivity technology, how to think about that type of thing.

I think we also understand a little bit better some of the pitfalls and how do you make it so it can be additive to your social interactions, instead of negative. I think one of the things that I’m really focused on is how can you make it so AI can help you be a better friend to your friends, and there’s a lot of stuff about the people who I care about that I don’t remember, I could be more thoughtful. There are all these issues where it’s like, “I don’t make plans until the last minute”, and then it’s like, “I don’t know who’s around and I don’t want to bug people”, or whatever. An AI that has good context about what’s going on with the people you care about, is going to be able to help you out with this.

In a good personalized AI, it’s not just about knowing some basic things about what you’re interested in, a good assistant or good personalization, it’s about having a theory of mind for how you think about stuff. I mean, this is what we do with all of our friends. It’s like we don’t just kind of go, “Okay, here’s my friend Bob and he likes whatever”, you have a deep understanding of what’s going on in this person’s life and what’s going on with your friends, and what are the challenges, and what is the interplay between these different things.

Will Bob’s AI be able to talk to your AI and smooth over any issues?

MZ: Well, I think that the exact API on that probably needs to be figured out, because there’s a lot of privacy sensitivity. And I mean, this is true in human relationships, and there’s a lot of questions when you’re connecting with another person or you’re trying to help people work through an issue of what context is shared and people have to be discreet about different things and we’ll need to develop the AI to do that, too. But I think before you even get to that, I think just developing an AI that has a coherent theory of mind, and understanding of your world, and what is going on in your world in a pretty deep way, not a just very surface way of, “He likes MMA”, but, “What’s really going on with him?” — I think that that’s going to be really fundamental.

And you think you can do this just fundamentally better than anyone else can?

MZ: Probably, yeah. Obviously, I think to some degree, I’m not even sure that the other companies are trying to do this, because I think that they’re much more focused on-

My ongoing joke has been the people building AI are the least suited to figure out the use cases for AI in many respects.

MZ: Look, I think the most obvious ones are around productivity. I think to some degree you see Google, and you see OpenAI, and those companies are going after that, it seems like Anthropic is really focused on building the software agent. I think that those are going to be massive businesses or whoever wins in those areas at least. I am not making a characterization about each one of those individual companies, which has its own strengths and weaknesses.

But just like the Internet brought us increases in productivity, but also increases in connectivity and increases in entertainment, AI will do all of that too. This doesn’t just bring us one thing, it transforms everything. So, yeah, I think that there’s a lot of focus here, there are going to be the big companies who have a lot of technology and capital to bring to bear, there are going to be a lot of new startups. This is something that I’ve lived and breathed for a long time is, “How do you build technology at the intersection of deep technology and helping people connect?”.

I think it’s very compelling and the way you said it taps into what you’ve been as a company, but it does feel like there’s always been a tension between what you’ve been as a company, even going back to our discussion about going to mobile and having to just be an entertainment app, not being able to be a platform, what you’ve been and what you want to be, Mark wants to have a platform. Well, you’re at a developer conference.

MZ: You don’t always get to do what you want! I think if you only follow the market, then I think that that has a way of not being particularly interesting over time, I think you need to take some novel bets.

I think it’s quite novel, “We’re back to connecting, we’re just going to connect you with an AI”.

MZ: Yeah. I think that’s one of the things that I think could be fascinating. But no, obviously, the peril of leadership and trying to take new bets is that they don’t always work. It’s kind of like baseball, you don’t need your things to work even half the time in order to build something amazing, you just need to be some combination of better than everyone else and hitting the ball further when you do hit it. That analogy’s is somewhat strained, but you kind of get what I’m saying.

I get it. You’re in the Hall of Fame if you fail two-thirds of the time.

MZ: Yeah. Obviously, over the last 20 years, there have been a lot of things that I wish would’ve gone a different direction than they went and bets that didn’t work out. But I think in the face of that, having the conviction to continue doing interesting things, I think is part of what the fun of this whole thing is.

There is a question, why do you keep doing this? And you kind of look back, I remember back in 2017, you’re like, “We’re going to actually take down video a bit in our apps because we want people to feel good about this and to connect with people”, and then today we’re like, “Oh, there’s video everywhere and it’s getting bigger and bigger”.

MZ: Yeah. There were a lot of mistakes that I feel like I made during that period, in terms of deferring to some so-called experts about what was valuable for people. And look, obviously, there’s research that’s helpful, but one of my takeaways from that period is that by and large people are smart, they know what is valuable in their lives. When you have some expert who’s saying that something is bad and people are telling you that it is good, 9 times out of 10, the people who are experiencing the thing are probably actually right.

And to this point, I don’t know how valid it is, but what do people use AI for? Therapist or life coaches, top of list.

MZ: One of the uses for Meta AI is basically, “I want to talk through an issue”, “I need to have a hard conversation with someone”, “I’m having an issue with my girlfriend”, “I need to have a hard conversation with my boss at work”, “Help me role-play this”, or, “Help me figure out how I want to approach this”. Which, by the way, is another use case that I think probably works better with voice, because you’re actually playing through the conversation rather than just text. But yeah, I think that this is going to be a huge part of it.

How do you get people to try yours, if they’re already on ChatGPT or whatever it might be? Just in their head, AI equals ChatGPT.

MZ: Well, there’s on the order of a billion monthly actives who are already using Meta AI for things, but I think fundamentally-

Are you worried that if you put an app out there — I was talking about this on Google the other day, Google actually has the most used AI product in the world, which is AI Search Overviews, but everyone’s like, “Oh, Gemini has only 30 million users”. Is this going to be a similar situation? “Oh, no one uses the Meta AI, look at the apps way down in the rankings”, and you’re like, “No, we have a billion over here”?

MZ: I don’t know, we’ll see. I think not all of these things need to work, but some of them need to work in a major way, and I think no one has the foresight to know exactly which things are going to work. I think you kind of have to have some theses about what direction you think the world is going to go in, put some bets out, see which ones work, and then have the agility to double down on the things that are good.

To your point, usually you cannot pass someone who is leading in a space by doing the same thing as them, you have to do something better, and sometimes the thing that can be better can be making it seamless to use that experience from within a product that they’re already using. If people want to text or call Meta AI, then doing that in the app that they text and call with is pretty helpful.

I think in the US, people probably underestimate how much Meta AI is used because they underestimate WhatsApp and the rest of the world. Most of the people who are using Meta AI are using it in WhatsApp. In the US, there’s 100 million people who use WhatsApp, it’s not tiny, but it is not yet the primary messaging platform in the US, iMessage still is. I think if you look at the curves, it probably will continue to be for at least two to three more years until WhatsApp overtakes it, but even when WhatsApp overtakes it, it’ll have just overtaken the last primary messaging app. Whereas if you look in most countries in the world, WhatsApp is all the communication, right?

If you’re doing that through WhatsApp and you also want to have a platform where you can message an AI, okay, that kind of makes sense. A lot of people use Meta AI in a lot of these places. Now there is an issue for us in that which is, well, the US happens to be the most important country, so is it okay for us if we’re kind of under-indexed in the United States? Probably not over time. That’s a thing that we need to do better at, but I do think it’s a thing that’s probably underestimated today.

Tariffs and Reality Labs

Changing gears just a little bit, I’ve long argued that, and it relates to the international point in a very different way, I’ve long argued that Meta is anti-fragile. For example, back when large advertisers boycotted you, that simply meant lower prices for the companies seeking to compete with them. When Apple implemented ATT it hurt you a lot, but it hurt all of your competitors, I think, far more, and you can see that looking backwards. Is there some concern just on the business point that tariffs are the sort of exogenous shock that not even Meta can escape, where it’s just impacting, particularly with your long tail, all these advertisers, it’s going to be rough?

MZ: I think what we’ve seen in past downturns, and we’ve been running the company for long enough now that we’ve been through a few of these cycles, is that when the financial environment gets tight, all companies look to rationalize their budgets by shifting resources to the things that really work. I think within marketing, the things that really work are the things that you can measure, and I think we’re basically at the top of the list. So, like you said, in those hard situations in the past — so 2008, 2009, some of the stuff that happened around COVID, some of the ATT stuff — the different kind of downturns along the way, they hit our business and revenue, but we gained market share.

I think one of the things that is good about being a controlled company and founder-led company is you can see past short-term pain to make the investments that you need to to deliver more value over time. When I see some downturns resulting in market share gains, then I’m going to do things like build out even more GPU infrastructure to serve those businesses and people better, which in the short term investors are kind of like, “Hey, your revenue is a little lower than we thought and now you’re actually increasing your expenses, what’s going on?”

Increasing CapEx.

MZ: We’ve seen these cycles over time where our stock goes down and it goes down dramatically.

90 bucks, three years ago.

MZ: Yeah, it goes down by 3x or whatever. But I guess one of the strategic advantages that we have of being a controlled company is fundamentally, I’m not a CEO who needs to worry about making the quarter in order to keep my job. We have a board at the company and a corporate structure which is very focused on maximizing long-term value. Which, I don’t know, if you look at the theory of how all these endowments or things are run, being able to invest over a longer term time horizon actually just is its own source of alpha that yields higher returns over time and that’s, I think, one of the advantages that we have as a company.

If you continue to deliver on that long term, is it still okay if that long term doesn’t include a platform, if you’re just an app?

MZ: It depends on what you’re saying. I think early on, I really looked up to Microsoft and I think that that shaped my thinking that, “Okay, building a developer platform is really cool”.

It is cool.

MZ: Yeah, but it’s not really the kind of company fundamentally that we have been historically. At this point, I actually see the tension between being primarily a consumer company and primarily a developer company, so I’m less focused on that at this point.

Now, obviously we do have developer surfaces in terms of all the stuff in Reality Labs, our developer platforms. We need to empower developers to build the content to make the devices good. The Llama stuff, we obviously want to empower people to use that and get as much of the world on open source as possible because that has this virtuous flywheel of effects that make it so that the more developers that are using Llama, the more Nvidia optimizes for Llama, the more that makes all our stuff better and drives costs down, because people are just designing stuff to work well with our systems and making their efficiency improvements to that. So, that’s all good.

But I guess the thing that I really care about at this point is just building the best stuff and the way to do that, I think, is by doing more vertical integration. When I think about why do I want to build glasses in the future, it’s not primarily to have a developer platform, it’s because I think that this is going to be the hardware platform that delivers the best ability to create this feeling of presence and the ultimate sense of technology delivering a social connection and I think glasses are going to be the best form factor for delivering AI because with glasses, you can let your AI assistant see what you see and hear what you hear and talk in your ear throughout the day, you can whisper to it or whatever. It’s just hard to imagine a better form factor for something that you want to be a personal AI that kind of has all the context about your life.

Is the dream still the glasses and VR is a way to get there? Where does VR fit in this?

MZ: The glasses are going to be by far the bigger thing. There’s already a billion or two billion people in the world who wear some kind of glasses, like what you’re wearing. It’s sort of unimaginable to me that 10 years from now, every pair of glasses that already exists isn’t just going to be AI glasses at some point at a minimum, and AI glasses with holograms at a maximum. Plus, I think a lot of people who wear contacts today will choose to wear glasses because the stuff is super valuable.

I assume your glasses that you are wearing do not have prescription lenses in them?

MZ: These don’t. Actually, I wear contacts normally, I have very bad vision. But no, I just started wearing these because it’s super useful throughout the day to have AI glasses on.

Well, because I just thought of something with the VR angle. I talked about this, you started out connecting people and then maybe it ends up with AI, you connect with AI. But maybe it turns out from a VR perspective, it’s AI in this generative content that makes it that much more immersive and somewhere you want to be more frequently, and that solves the cold start problem. What do you actually use these for beyond just games? And then, once everyone gets them on, then we’re back full circle and I can get the experience of being with my friends and us virtually attending a game together.

MZ: I think that that’s all right. I actually think that both AR glasses and VR are going to be huge markets, it’s just that I think that glasses are going to be the phones of the future and VR is going to be the TV of the future. If you think about it, we obviously don’t walk around the world carrying TVs, but the average person spends hours a day with a TV, and we’re going to want that to get more immersive and more engaging over time too. As the form factor and quality of the VR headsets improve, I think that that’s going to replace tablets and it’s going to replace a lot of TVs and things like that. The fidelity of the hologram that you can get where you’re putting photons in the world with AR will never be the same depth as what you can get if you just have a screen and you are printing pixels on it. It’s like AR can only ever add photons to the world, it can’t take them away. Whereas VR, you’re blocking out the world, you’re starting with a blank canvas, you can do whatever you want. So, I would guess they’re going to be both.

How long until Orion? I did get to try it last year.

MZ: The goal is a few years, and we’ll see how we do.

Why’d you show it off? You basically went and showed Apple and everybody else, “Look, this is what we can do, but we can’t ship it yet”.

MZ: I think we wanted to get feedback, we like developing stuff in the open. There’s always this trade off between, on the one hand, getting feedback also means that your competitors can see stuff, but it also advances your thinking.

Does it give a big kick in the butt to your own team saying, “Now you got to get this out the door”?

MZ: I think people take pride in their work, so having a moment where they can show things definitely rallies them. But no, I think open and closed development have very different advantages and disadvantages. Where if you were really truly on track to do something amazing by yourself, then keeping it a secret until the moment you release it has merits. But I think most things are not like that, most things require iteration and feedback and ideas, and I think in most cases, doing it openly coupled with a long-term commitment to doing that thing, will lead to a faster pace of progress in you doing better work than others.

I think in something like VR and AR where the other companies in the space, it’s like every time we do something good, they kind of spin up their AR program again. There are all these rumors about, okay, Google cancels their stuff, starts it up, cancels it, starts it up, whatever. It’s like Apple decided there wasn’t going to be glasses, oh the Ray-Bans are doing well, spins up their glasses program again. I think fundamentally we’re just going to be working on this for 10 or 15 years, consistently, openly getting feedback on it, I think we care more than they do, and we have, I think, the best people in the world working on it because we’ve shown that.

I think similarly for the AI problems that we’re working on, it’ll be a similar thing, which is there are problems that everyone agrees on are the good ones to work on and then there are ones that today are sort of more marginal. Like some of the stuff around companionship or creating new types of content in feed that I think people haven’t — there’s no existence proof yet that says that’s going to be good.

Does it just kill you that Studio Ghibli happened on OpenAI and not on yours?

MZ: People use Imagine on Meta AI a lot too, but no, I thought that was good. It was a cool thing.

They all got posted on social media, right?

MZ: It was good. Look, I think this is a big enough space that no one company is going to do all the cool things and I think in this world, if you can’t be happy when other people do cool things, then you are going to be a pretty sad person.

Last question: our first interview was when you changed the name of the company to Meta. Still happy with the name?

MZ: Yeah, I think it’s a great name. I think it’s evocative of the future where the digital and physical worlds are more blended together, which is coming true even faster than I would’ve guessed because of the AI stuff. The thing that has surprised me since then is if you would have asked me back then, “Were we going to get the fully holographic world first or AI?”, I would’ve guessed the fully holographic world. So it’s cool that we’re getting AI sooner, but fundamentally they are both parts of the same vision and I think glasses are going to be fundamental for both of them. Way more people are using AI glasses now then they would have if there hadn’t been this massive growth in the AI tech.

Very good, it was nice to connect with you again, thank you very much.

MZ: Good seeing you.

This Daily Update Interview is also available as a podcast. To receive it in your podcast player, visit Stratechery.

The Daily Update is intended for a single recipient, but occasional forwarding is totally fine! If you would like to order multiple subscriptions for your team with a group discount (minimum 5), please contact me directly.

Thanks for being a supporter, and have a great day!

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)