Kubernetes Autoscaling: Unlocking Efficiency at Scale with Kapstan

As businesses scale, the need for robust infrastructure that can seamlessly adjust to fluctuating workloads becomes critical. Kubernetes, the leading container orchestration platform, addresses this challenge with autoscaling—a feature that ensures your applications remain responsive and cost-efficient no matter the traffic spikes or dips. At Kapstan, we help companies tap into the full power of Kubernetes autoscaling to streamline operations, reduce cloud costs, and boost performance.

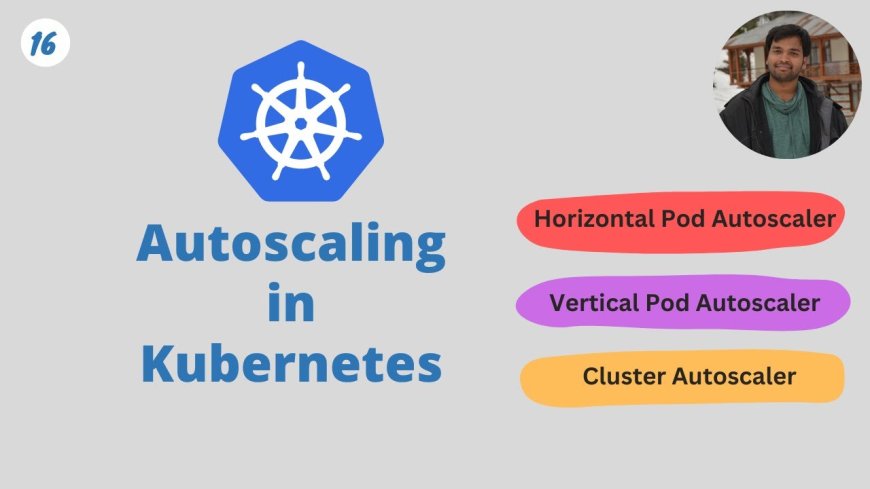

What Is Kubernetes Autoscaling?

Kubernetes autoscaling is the dynamic adjustment of compute resources based on real-time demand. Rather than provisioning fixed resources that may go underutilized or overloaded, autoscaling allows your infrastructure to respond automatically to traffic changes.

Kubernetes offers three main types of autoscaling:

-

Horizontal Pod Autoscaler (HPA)

Adjusts the number of pod replicas in a deployment based on CPU usage or other metrics. -

Vertical Pod Autoscaler (VPA)

Adjusts the resource requests and limits (CPU/memory) of individual pods without changing the pod count. -

Cluster Autoscaler (CA)

Scales the number of nodes in the cluster by adding or removing VMs based on pending pods and node utilization.

Why Autoscaling Matters

1. Optimized Resource Utilization

Autoscaling ensures that your workloads only use the compute resources they need. This avoids waste and reduces the risk of over-provisioning, leading to significant savings—especially in cloud-native environments.

2. Improved Application Performance

During peak loads, autoscaling quickly adjusts resources, ensuring smooth user experiences without manual intervention. When demand drops, unused resources are automatically decommissioned.

3. Operational Simplicity

With the right configuration, autoscaling handles growth and contraction behind the scenes. This means your DevOps team can focus more on delivering features than managing infrastructure.

Implementing Kubernetes Autoscaling with Kapstan

At Kapstan, we guide businesses through end-to-end Kubernetes optimization, with autoscaling as a cornerstone of our services. Whether you’re running microservices, APIs, or batch jobs, our team ensures your workloads scale efficiently and reliably.

Step-by-Step Deployment

-

Assessment & Configuration

We analyze your current workload behavior and define key metrics (like CPU, memory, or custom application metrics) to trigger autoscaling events. -

HPA/VPA/CA Setup

Based on your needs, we implement and fine-tune the right mix of HPA, VPA, and Cluster Autoscaler. For cloud platforms like AWS, GCP, or Azure, we integrate platform-native tools (like Karpenter or GKE Autoscaler) to further enhance capabilities. -

Monitoring & Observability

Using Prometheus, Grafana, and other monitoring tools, we provide real-time visibility into autoscaling behavior. This ensures performance is always transparent and traceable. -

Cost Control

Kapstan also integrates cost tracking tools to ensure your autoscaling setup aligns with your budget. You’ll only pay for the resources you actually use.

Kubernetes Autoscaling in Action: Real-World Impact

Let’s say you operate an e-commerce application that experiences traffic surges during promotions. Without autoscaling, your app could crash under load or run on idle servers at night.

With Kapstan’s autoscaling solution:

-

HPA adds pods automatically as traffic increases.

-

Cluster Autoscaler provisions new nodes when the current ones are full.

-

VPA adjusts pod resources to better match demand.

-

At night, unused nodes are safely decommissioned—saving money.

This intelligent scaling model not only ensures uptime but also optimizes cloud spending and improves the user experience.

Best Practices from Kapstan’s Playbook

-

Set realistic CPU/memory limits and requests to avoid resource starvation or over-allocation.

-

Use custom metrics (like queue length or request latency) for better scaling decisions beyond CPU/memory.

-

Simulate load scenarios before production deployment to test how your application reacts to scaling.

-

Implement cooldown periods to prevent excessive scaling events and system instability.

The Future of Autoscaling: AI and Event-Driven Scaling

As workloads become more complex, the next evolution of autoscaling will incorporate AI-based predictions and event-driven triggers. At Kapstan, we’re already exploring solutions that leverage machine learning to anticipate resource needs before they spike—taking autoscaling from reactive to proactive.

Conclusion

Kubernetes autoscaling is essential for modern infrastructure—balancing performance, efficiency, and cost. With the right expertise, it can transform your operations and drive significant ROI.

At Kapstan, we specialize in designing and implementing autoscaling solutions tailored to your unique workload demands. Whether you're just getting started with Kubernetes or optimizing a mature deployment, our team ensures your infrastructure scales with confidence.

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)