Archetype AI is like ChatGPT for the physical world

Bellevue, Washington, is the home of thousands of Microsoft employees. Its AI-powered traffic monitoring system lives up to such expectations. Using existing traffic cameras capable of reading signs and lights, it tracks not just crashes but also near misses. And it suggests solutions to managers, like rethinking a turn lane or moving a stop line.But this AI technology wasn’t born out of Microsoft and its big OpenAI partnership. It was developed by a startup called Archetype AI. You might think of the company as OpenAI for the physical world.[Image: Archetype AI]“A city will report an accident after an accident happens. But what they want to know is, like, where are the accidents that nearly happened—because that they cannot report. And they want to prevent those accidents,” says Ivan Poupyrev, cofounder of Archetype AI. “So predicting the future is one of the biggest use cases we have right now.”Poupyrev and Leonardo Giusti founded Archetype after leaving Google’s ATAP (advanced technology and projects) group, where they worked on cutting-edge projects initiatives like the smart textile Project Jacquard and the gadget radar Project Soli. Poupryev details his history of working at giants like Sony and Disney, where engineers always had to develop one algorithm to understand something like a heartbeat, and another for steps. Each physical thing you wanted to measure, whatever that may be, was always its own discrete system—another mini piece of software to code and support.There’s simply too much happening inside our natural world to measure or consider it all through this one-problem-at-a-time approach. As a result, our highest-tech hardware still understands very little of our real environment, and what is actually happening in it.What Archetype is suggesting instead is an AI that can track and react to the complexity of the physical world. Its “Newton” foundational model is trained on piles of open-sensor data from sources like NASA—which publishes everything from ocean temperatures gathered with microwave scanners to infrared scans of cloud patterns. And much like an LLM can infer linguistic reasoning by studying texts, Newton can infer physics by studying sensor readings. [Image: Archetype AI]The company’s big selling point is that Newton can analyze output from sensors that already exist. Your phone has a dozen or more, and the world may soon have trillions—including accelerometers, electrical and fluid flow sensors, optical sensors, and radar. By reading these measurements, Newton can actually track and identify what’s going on inside environments to a surprising degree. It’s even proven capable of predicting future patterns to foresee actions ranging from the swing of a small pendulum in a lab to a potential accident on a factory floor to the sunspots and tides in nature.In many ways, Archetype is constructing the sort of system truly needed for ambient computing, a vision in which the lines between our real world and computational world blur. But rather than focusing on a grand heady vision, it’s selling Newton as a sort of universal translator that can turn sensor data into actionable insight.“[It’s a] fundamental shift to how we see AI as a society. [Right now] it’s an automation technology where we replace part of our human labor with AI. We delegate to AI to do something,” Giusti says. “We are trying to shift the perspective, and we see AI as an interpretation layer for the physical world. AI is going to help us better understand what’s happening in the world.”Poupyrev adds, “We want AI to act as a superpower that allows us to see things we couldn’t see before and improve our decision-making.” [Image: Archetype AI]How does Archetype AI work? Lenses.In one of Archetype’s demos, a radar notices someone entering the kitchen. A microphone can listen for anything prompted, like washing dishes. It’s a demonstration of two technologies that reside in many smartphones, but through the context of Newton, sensor noise becomes knowledge.In another demo, Newton analyzes a factory floor and generates a heat map of potential safety risks (notably drawn in the path of a forklift coming close to people). In yet another demo, Newton analyzes the work of construction boats, and actually charts out a timeline of their active hours each day. Of course, physics alone can’t extrapolate everything happening in these scenes, which is why Newton also includes training data on human behavior (so it knows if, say, shaking a box might be inferred as “mishandling” it) and uses traditional LLM technology for labeling what’s going on.Each different front-end UX described above required some custom code, and Archetype has been working with its early partners in a white-glove approach. But the core logic at play is all built upon Newton. “Our companies don’t care about some AGI benchmark we can meet and not meet,” Poupyrev says. “What they care about is that this model solved their particular use cases.”Much like entire apps ar

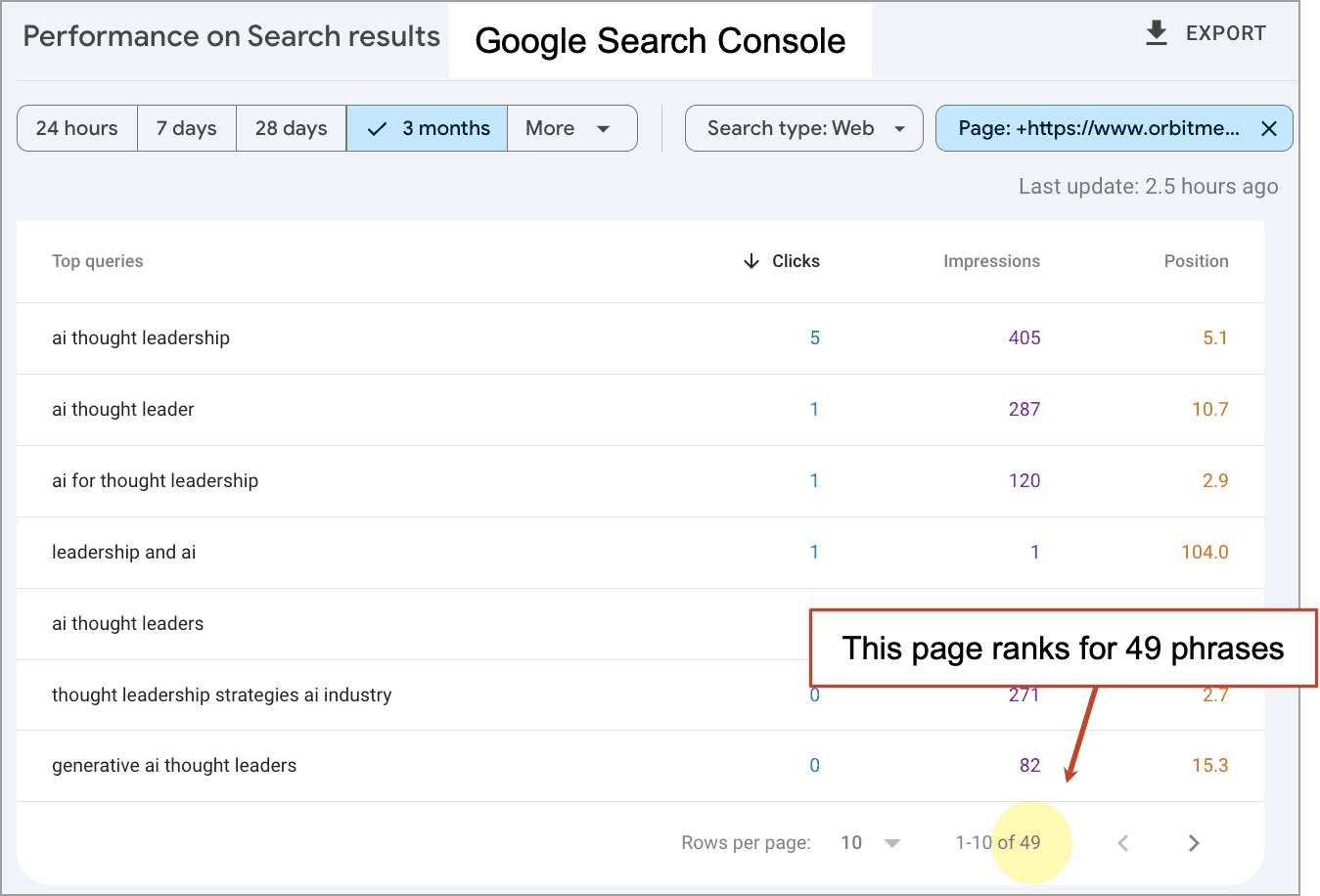

Bellevue, Washington, is the home of thousands of Microsoft employees. Its AI-powered traffic monitoring system lives up to such expectations. Using existing traffic cameras capable of reading signs and lights, it tracks not just crashes but also near misses. And it suggests solutions to managers, like rethinking a turn lane or moving a stop line.

But this AI technology wasn’t born out of Microsoft and its big OpenAI partnership. It was developed by a startup called Archetype AI. You might think of the company as OpenAI for the physical world.

“A city will report an accident after an accident happens. But what they want to know is, like, where are the accidents that nearly happened—because that they cannot report. And they want to prevent those accidents,” says Ivan Poupyrev, cofounder of Archetype AI. “So predicting the future is one of the biggest use cases we have right now.”

Poupyrev and Leonardo Giusti founded Archetype after leaving Google’s ATAP (advanced technology and projects) group, where they worked on cutting-edge projects initiatives like the smart textile Project Jacquard and the gadget radar Project Soli. Poupryev details his history of working at giants like Sony and Disney, where engineers always had to develop one algorithm to understand something like a heartbeat, and another for steps. Each physical thing you wanted to measure, whatever that may be, was always its own discrete system—another mini piece of software to code and support.

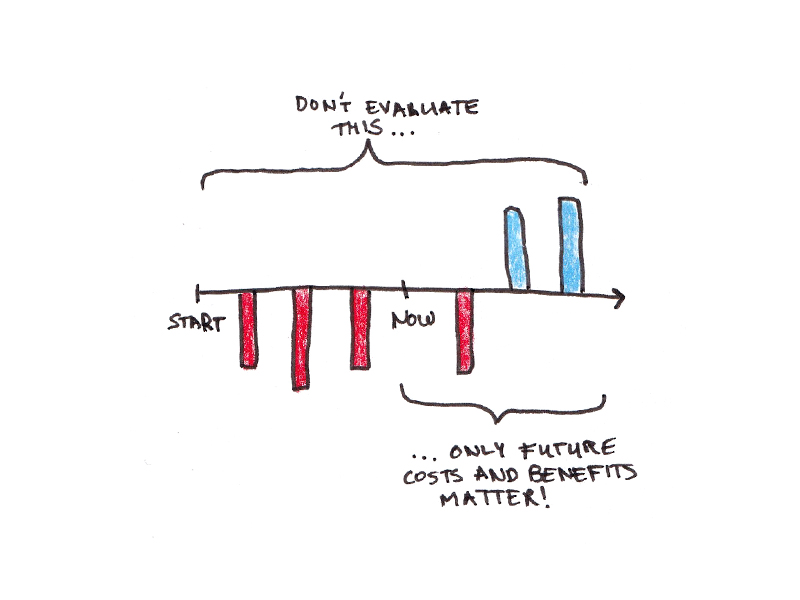

There’s simply too much happening inside our natural world to measure or consider it all through this one-problem-at-a-time approach. As a result, our highest-tech hardware still understands very little of our real environment, and what is actually happening in it.

What Archetype is suggesting instead is an AI that can track and react to the complexity of the physical world. Its “Newton” foundational model is trained on piles of open-sensor data from sources like NASA—which publishes everything from ocean temperatures gathered with microwave scanners to infrared scans of cloud patterns. And much like an LLM can infer linguistic reasoning by studying texts, Newton can infer physics by studying sensor readings.

The company’s big selling point is that Newton can analyze output from sensors that already exist. Your phone has a dozen or more, and the world may soon have trillions—including accelerometers, electrical and fluid flow sensors, optical sensors, and radar. By reading these measurements, Newton can actually track and identify what’s going on inside environments to a surprising degree. It’s even proven capable of predicting future patterns to foresee actions ranging from the swing of a small pendulum in a lab to a potential accident on a factory floor to the sunspots and tides in nature.

In many ways, Archetype is constructing the sort of system truly needed for ambient computing, a vision in which the lines between our real world and computational world blur. But rather than focusing on a grand heady vision, it’s selling Newton as a sort of universal translator that can turn sensor data into actionable insight.

“[It’s a] fundamental shift to how we see AI as a society. [Right now] it’s an automation technology where we replace part of our human labor with AI. We delegate to AI to do something,” Giusti says. “We are trying to shift the perspective, and we see AI as an interpretation layer for the physical world. AI is going to help us better understand what’s happening in the world.”

Poupyrev adds, “We want AI to act as a superpower that allows us to see things we couldn’t see before and improve our decision-making.”

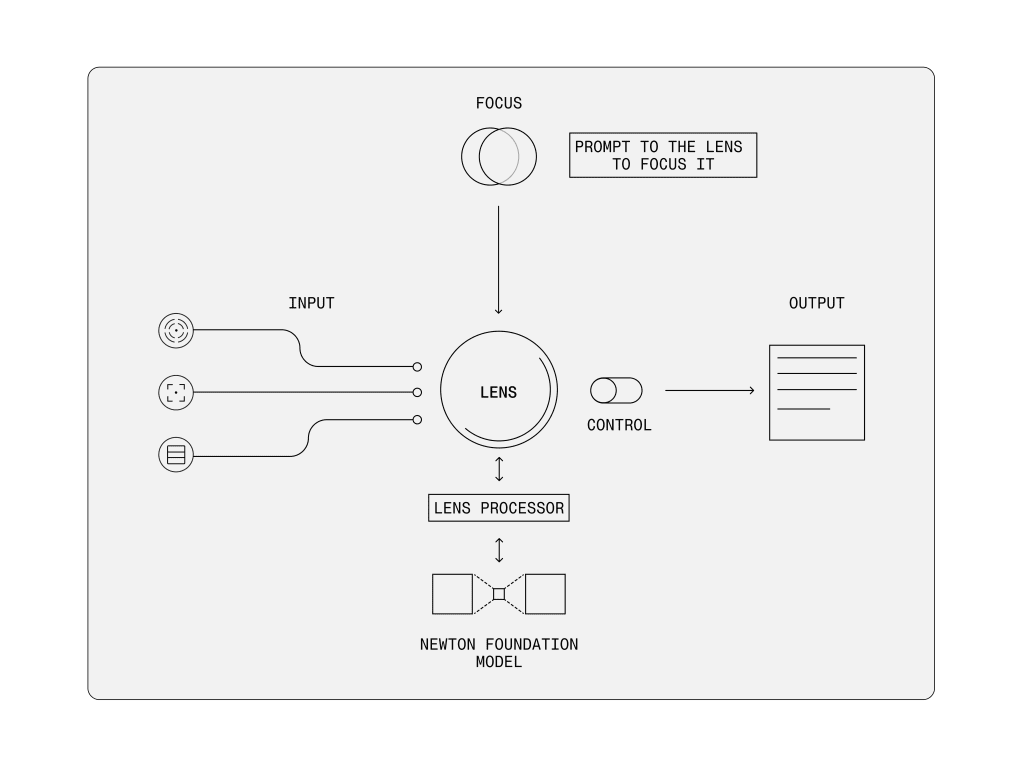

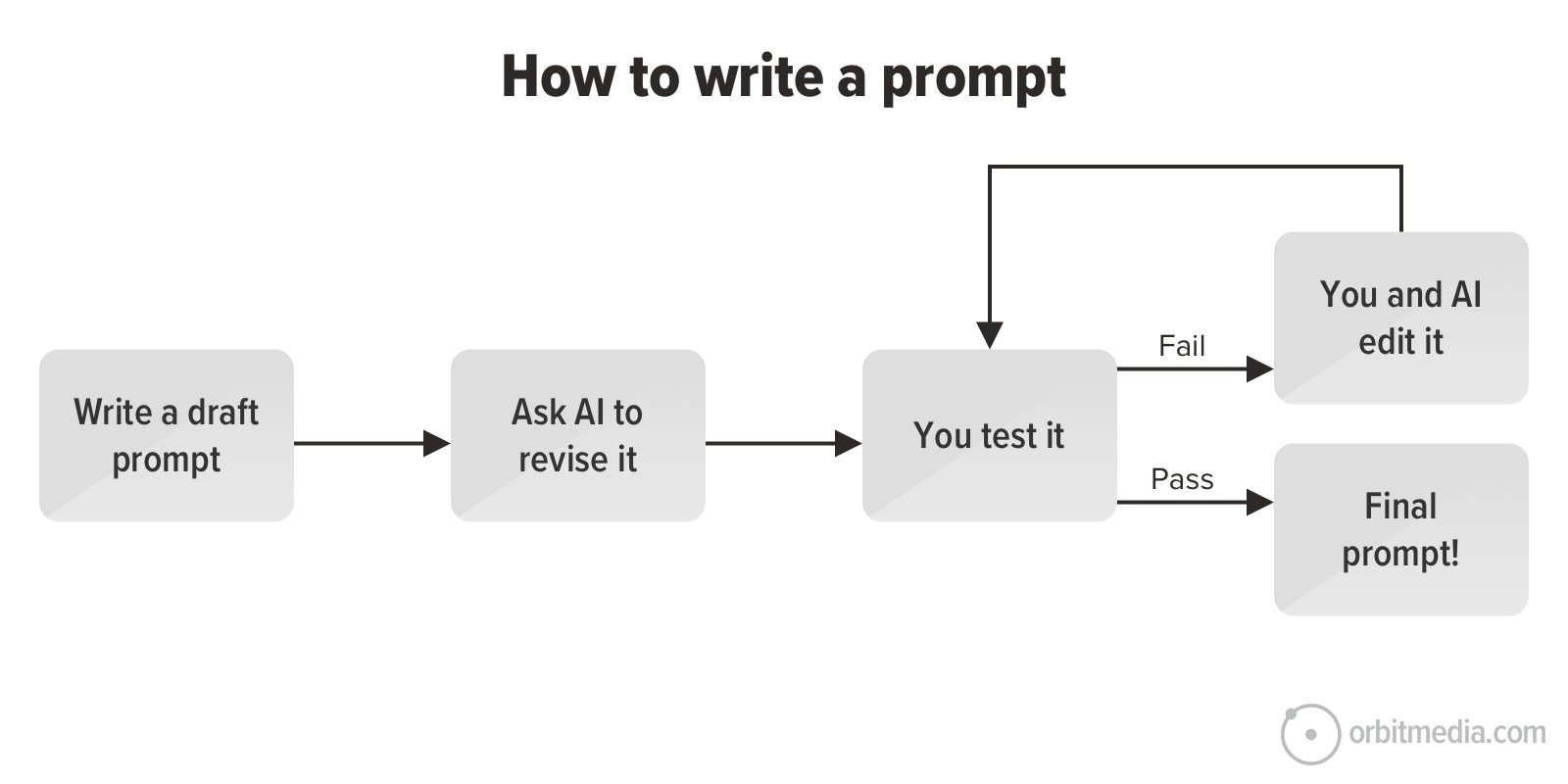

How does Archetype AI work? Lenses.

In one of Archetype’s demos, a radar notices someone entering the kitchen. A microphone can listen for anything prompted, like washing dishes. It’s a demonstration of two technologies that reside in many smartphones, but through the context of Newton, sensor noise becomes knowledge.

In another demo, Newton analyzes a factory floor and generates a heat map of potential safety risks (notably drawn in the path of a forklift coming close to people). In yet another demo, Newton analyzes the work of construction boats, and actually charts out a timeline of their active hours each day. Of course, physics alone can’t extrapolate everything happening in these scenes, which is why Newton also includes training data on human behavior (so it knows if, say, shaking a box might be inferred as “mishandling” it) and uses traditional LLM technology for labeling what’s going on.

Each different front-end UX described above required some custom code, and Archetype has been working with its early partners in a white-glove approach. But the core logic at play is all built upon Newton.

“Our companies don’t care about some AGI benchmark we can meet and not meet,” Poupyrev says. “What they care about is that this model solved their particular use cases.”

Much like entire apps are now built upon OpenAI’s ChatGPT and Anthropic’s Claude, Archetype is making Newton AI available as an API (and customers can request access now). Technically, you can run Newton from computers operated by Archetype, on cloud services like AWS or Azure, or even on your own servers if you prefer. Your primary task is simply to feed whatever sensor data your company already uses through a Newton AI “lens.”

The lens is the company’s metaphor for how it translates sensor information into insight. Unlike LLMs, which work on question-answer queries that lead us to metaphors like conversations and agents, sensors output streams of information that may need constant analysis. So a lens is a means to scrutinize this data at intervals or in real time. And the operational cost of running Newton AI will be proportionate to the amount and frequency of your own sensor analysis.

Tuning the lens is surprisingly simple: You can use natural language prompts to ask the system something like “alert me every time there’s a safety issue on this floor” or “notify me if an alarm goes off.” But what’s particularly exciting to the company is that in analyzing sensor waveforms, Newton AI has proven that it doesn’t just understand a lot of what’s happening, but it can actually predict what may happen in the future. Much like autocomplete already knows what you might type next, Newton can look at waveforms of data (like electrical or audio information from a machine) to predict the next trend. In a factory, this might allow it to spot the imminent failure of a machine.

To demonstrate this idea, Archetype shares data from an accelerometer measuring the swing of an elastic pendulum (aka a pendulum on a spring—which is a classic way to generate chaotic behavior). Even though the model has never been trained specifically on pendulum equations or been programmed to understand accelerometers, Archetype claims that it can accurately track the swing of the chaotic pendulum and predict its next movements. Poupyrev says the same is true for thermoelectric behaviors, like we might see in electronics.

By observing patterns normally ignored, and coupling that information with predictive analysis, Archetype believes it can revolutionize all sorts of platforms, ranging from industrial applications to urban planning. And for its next act, the company wants Newton to output in more than text; there’s no reason why it can’t communicate in symbols or real-time graphs.

The claims are, on one hand, outrageously large and tricky to grok. But on the other, Poupyrev has built his entire career on building mind-bendingly novel innovations from existing technologies—that actually work.

For any company interested in Newton, Archetype is still working closely with partners to use their API. Pilots start in the mid-six figures, while annual projects range into the millions, depending on scale.

![How One Brand Solved the Marketing Attribution Puzzle [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/marketing-attribution-model-600x338.png?#)

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)

![[HYBRID] ?? Graphic Designer](https://a5.behance.net/cbf14bc4db9a71317196ed0ed346987c1adde3bb/img/site/generic-share.png)