An Interview with Snowflake CEO Sridhar Ramaswamy About Data and AI

An interview with Snowflake CEO Sridhar Ramaswamy about taking over Snowflake, focusing on product, and competing in AI.

Good morning,

This week’s Stratechery Interview is with Snowflake CEO Sridhar Ramaswamy. Ramaswamy started his career at Bell Labs, before spending 15 years at Google building out Google’s Search Ads Product. Ramaswamy then started Neeva, a subscription-based search engine, which was acquired by Snowflake in 2023; eight months later and Ramaswamy replaced Snowflake Chairman Frank Slootman as CEO with a mandate to refocus Snowflake on products, specifically AI.

We cover all of these topics in this interview, including Ramaswamy’s background and experience at Google, and his current take on the company and the challenges it faces in search. Then we dive into Snowflake and his unexpected elevation to CEO, including topics like business models, go-to-market motions, and incentives. The rest of the interview is about AI and Snowflake’s position in the market: can Snowflake extend beyond its structured data warehouse roots before competitors like Databricks leverage AI to wrangle unstructured data into a more compelling offering?

As an aside, I did previously interview Databricks Founder and CEO Ali Ghodsi about similar topics, and while the two companies are bitter rivals, it is notable how similar their long-term view of their AI opportunity is, particularly relative to the hyperscalers on one side and SaaS companies on the other.

As a reminder, all Stratechery content, including interviews, is available as a podcast; click the link at the top of this email to add Stratechery to your podcast player.

On to the Interview:

An Interview with Snowflake CEO Sridhar Ramaswamy About Data and AI

This interview is lightly edited for clarity.

Topics: Google and Neeva | Taking Over Snowflake | Business Model and GTM | Snowflake and AI | Models and Products | The Enterprise Stack

Google and Neeva

Sridhar Ramaswamy, welcome to Stratechery.

Sridhar Ramaswamy: Hey, Ben. Excited to chat.

It’s great to get a chance to talk to you on the record. We chatted previously when you were at Neeva, which I do want to ask you about, but I always like to start these interviews with your background. Where did you grow up and how did you get started in technology?

SR: I grew up all over South India in a state called Tamil Nadu, and then I moved to Bangalore when I was 10 years old. Many of my formative memories are in that city and of course, it’s now called Bengaluru and it’s a whole lot different from the city that I grew up in.

Yeah, stuff changes a lot.

SR: It has changed a lot. I got a bachelor’s from IIT Madras, now called Chennai, and then I got a PhD in databases. In many ways, IIT Madras informed how I think about computing. An amazing set of colleagues that I studied with, professors that could have been anywhere in the world, but choose to be in India to teach students like us. Brown University was also very helpful in developing my critical thinking, more unstructured work. As you know, getting a PhD is all about finding interesting problems, which is very different from taking courses. And then a few years at Bell Labs. I would say those three, probably the biggest early influences in computing, and then I moved out to the West Coast.

What did you do at Bell Labs?

SR: I was in the database research group. I joke to people that this is the building at Murray Hill where the transistor was invented, I was a rather pedestrian database researcher. I tell people that my claim to fame is I was next door to one of the scientists that invented most theoretical aspects of quantum computing, but it was an amazing place. And from there I came to the Valley, joined Google early 2003 to a very different world of computing where you have to relearn what you knew about software and computing.

The database aspects, you’ve come full circle in some regards being at Snowflake.

SR: 100%.

But Google, a lot different. Tell me about Google, tell me about your time there. Like I said, you were there at a very interesting period for the company there for a long time, very distinguished career. Walk me through that.

SR: I joined in 2003, I actually ran a pretty large software group for a small startup of over 100 people making $100 million revenue. But when I joined Google, I decided to go back to being an individual contributor because I said this is a company that is rethinking, redoing how we think about computing and distributed programming, and I wanted to learn from the ground up. I was very lucky to get accidentally placed, mind you, into the Search Ad serving team. Little did any of us know that this would turn out to be among the best businesses, if not the best business mankind has ever invented.

Was there anything relevant to doing traditional databases when now, I mean obviously at the end of the day you could argue everything is a database, but at Google’s scale, it’s something else almost entirely.

SR: Well, yes and no. A lot of early Google’s approach to computing was driven by the fact that early Google mostly dealt with read-only problems. For example, web search is mostly read-only. There’s a little cache of your preferences and stuff like that, but the bulk of it is read-only, and so there are approaches to distributing information, distributing load that are very different from how you think say about database scale. We did have MySQL databases at the ads team at Google, mind you, and I used to be part-time sysadmin for those databases for a while as well.

That sounds like a very stressful job.

SR: But it was amazing to learn about computing. We also built some of the most amazing data processing systems ever, log joining at planet scale. You have logs coming in from every user in the world that’s seeing ads. You need to join them with clicks, I was supposed to do it on time.

I was going to ask that. You mentioned search is mostly going to be read-only, but with ads, there is a write problem.

SR: Again, yes and no in the sense of stats processing is delayed processing, you’re not trying to do that in real time. Absolutely, advertiser updates did go to the MySQL database, but that was a tiny part of overall ads data, things like logs, logs processing, aggregate processing at scale. Among the largest logistic regression systems built early on the planet was a system called Smart Ass. There’s a lot of distributed systems programming and of course, incredibly high-scale serving systems as well, and so you really got to learn what distributed computing was about.

That was when I also rediscovered my love for actually leading, and Google let you lead any which way you wanted. I was a very hands-on technical leader for the longest time. I loved the mix of managing teams, helping them deliver great products, but also being super technical, super hands-on and it helped to work on an incredibly technically demanding product. Over time that became me running more of the Search Ads teams and then more of the Ads teams, which I did for five, six years, Ads and Commerce. This included fun things like launching Google Pay to the world, which was a whole saga onto itself, but also the various journeys of Google Commerce that you have covered elsewhere. It was a fascinating place, 15 amazing and incredibly rewarding years of my life and career.

So then you decide to leave, and not just decide to leave but decide, “I want to build a product that has no ads”, referring to Neeva, a subscription search engine. We can get to Neeva in a moment, but what was the motivation? Was it, “I’ve just done this for a long time, I really wanted to build something different, Google’s changed”, where are those reasons?

SR: It’s none of that. I had done ads for 15-and-a-half something years and I was like, “That’s a long time doing one kind of product”. Obviously serving ads for YouTube, which my co-founder at Neeva and I did is very different from doing search ads, different problems, different advertisers, different goals. But there was a part of me that wanted a reset button and I wanted to start fresh, and I also knew that I had a limited amount of time before I could go start a company. I was not young even then, and mostly I just decided to reset and start over.

I actually joined a venture firm, Greylock, right after I left Google and the startup Neeva came a little bit later. As I said, working at Google was an incredible privilege, but there was also a part of me that said that I did not want that to be the period of my professional career and so Neeva was a start over and sometimes you just have to start new journeys and they take you to interesting places, and here I am.

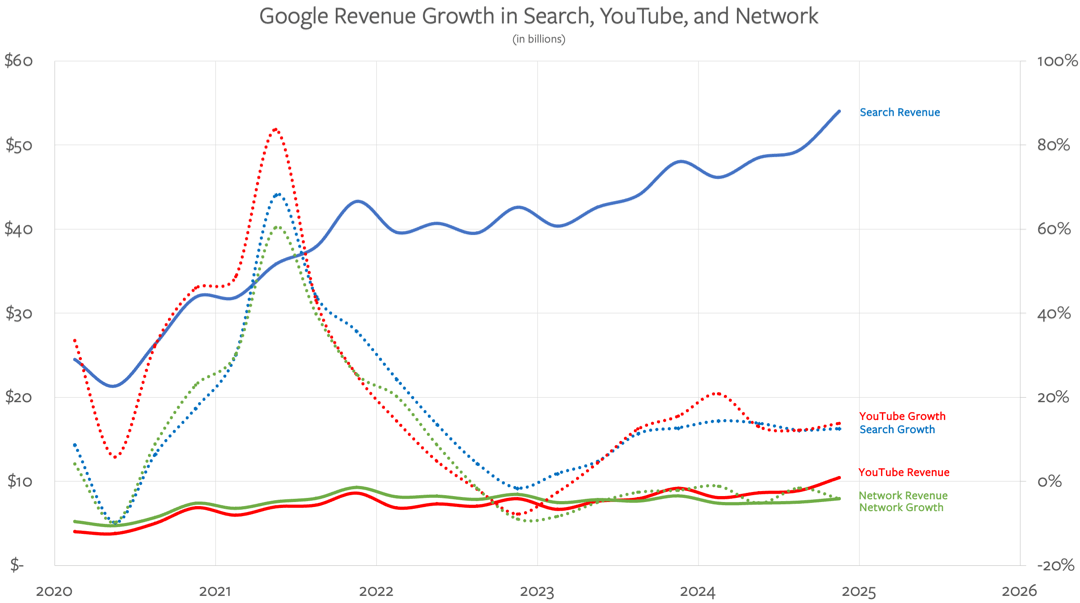

What’s your perception of Google today from afar? I think it’s been enough years, you can give some comment. Is Search fine? No one should be worried about it? Or are they moving too slowly? What’s your view?

SR: Look, this is the Valley, only the paranoid survive. That is as much true today as it was 5 or 10 or many more years ago. This is a time of incredibly rapid technological change, and we can go into detail about how different companies are approaching it.

I personally feel that there are phases of consolidation for companies when things are going well and you want to be more efficient at what you do. There are times when you really need radical change because there is radical change happening outside. Let’s face it, there are more and more questions where products like ChatGPT using web search just provides a better experience than searching on Google. Absolutely I search on Google as well, it is my default search engine on Safari, but I also have the ChatGPT app, I pay for it. I think you can say now that for many, many common use cases of search, a more conversational experience is a better experience.

And then on the other hand, for things like looking through complicated spaces where you really do not want a conversational experience, trying to figure out exactly which shoe to buy in a conversational experience, as you can imagine, that’s just plain annoying.

But on the other hand, I think you begin to have agentic systems that can do way more complicated workflows than what you could ever expect from a search engine. So I would say Search is under siege from both sides, from both the common things that you want simple answers to, and the complex things where you can apply very different techniques from what you have done before.

That’s a really interesting way to look at it. It’s like a pincer movement where you have the most complicated and the least complicated. Yeah, that’s a great way to frame it.

SR: And they all have very, very different solutions. And honestly, in my mind, that’s a story of data and Snowflake where, yes, we started at one end, but I think where we are headed to is an increasing blurring between product and service, for example. I think this is a rapidly changing world and, yes, one always has to worry that Search is not changing fast enough.

I was one of the key players in the mobile transition that Google made from being a desktop company to a mobile company. We used to have this phrase called RPM [revenue per thousand impressions] gap, which was basically the percentage of mobile RPM to desktop RPM. We started with that being 8% or 10% and spent a terrifying five years trying to get that closer to 100%.

Trying to close that gap, yeah.

SR: As I said, it was truly terrifying. I think this is one of those moments, it will take 3, 4, 5 years to unfold, but it is very difficult to think that how we consume information or products five years from now is going to be what we did three years ago.

Most of this interview is going to be about Snowflake, but before I get there, I did want to ask about Neeva. I think the high-level pitch is, “An ad-free search engine, subscription only”. Is that a good enough summary? What did you try to do and what lessons did you learn there?

SR: The intellectual underpinning of Neeva was basically that an ad-supported search model had reached its limit in terms of how much utility it could deliver and that one needed to start over. The best that I could do in 2019 was to say, “An ad-free private search engine”, those were the best concepts that I could find to describe.

Do you feel like you were in the right places at the wrong time?

SR: We absolutely were taking on the right problem probably two to three years too early. By the time we launched the first true web index RAG[retrieval-augmented generation]-based search engine in early 2023, we had been around for four-ish years. My team was tired, and we decided that we were better off applying what we knew to a new space in which we could be a lot more leveraged than continue on. You know how startups are, you have to be at the right place at the right time.

Yep.

SR: I think the idea was fine, but it needed one or two key pieces of technology that didn’t exist when we started.

The new product paradigm, which was the chat interface. I just talked to Sam Altman last week and he’s pretty anti-ad, he wants to try to make it all work with subscriptions, which is in line with what Neeva was doing, except they’re starting from being a chat interface and that’s probably a better place to start from.

SR: That’s right and 700 million users, which is also helpful.

I was going to ask that. Did you gain more appreciation for Google working at Neeva or maybe more disdain? It could go either way.

SR: Google is an amazing company, I’ve said this before. A lot of Google’s early users business was done both with a great product but also incredibly shrewd business deals with the likes of AOL and Yahoo and so on. I have a lot of respect for what Google did.

And that rolled forward to the deals with Apple and the Android and things like that.

SR: 100%, the Apple deal, the Firefox deal, I think the many PC manufacturer deals back when Microsoft was asleep.

Yep.

SR: All of those were what made Google the amazing business that it is. But business models also have shelf lives, and sometimes it’s very hard to disrupt yourself with a model that might not make any money when you’re making $200 billion on that model. It’s just not a thing that computes and that’s where we are.

Taking Over Snowflake

Snowflake acquired Neeva in May 2023, just after you’d finally figured out the AI RAG search idea, you were CEO eight months later, was that always the plan?

SR: It was not the plan.

Snowflake bought Neeva because of our expertise in both search and in early AI. We used to fine tune, it sounds like a joke now, seven nine-billion parameter models to do really good summarization to be able to write the cited answers that we now take for granted. We had to do all of this on a shoestring because we were serving web-scale traffic and certainly had web-scale indices six, eight billion pages large.

That was the expertise that Snowflake found attractive. We were all very clear about Snowflake and Neeva that we would not continue the consumer search engine. In fact, we shut it down even before the acquisition closed, and we went to work on creating a great search product within Snowflake. It’s now called Cortex Search, and then pioneering things like RAG-based search within Snowflake, but also then working on techniques for structured data.

My original deal with Frank Slootman, then CEO, was that I would stay at Snowflake for six months, work on AI, help set a roadmap for AI at Snowflake, and then figure out what it is that I wanted to do. And towards the end of the year, I started having a few conversations.

“Frank, I want your job, that’s what I’d like to do.”

SR: (laughing) It didn’t quite come to that, to be honest with you. Come October, I was trying to figure out what to do and this is when we started having a few conversations about whether there were different futures. Obviously, these things are complicated and transitions are hard, but it all came together in February a few months afterwards.

Well, when Frank Slootman retired, the stock dropped 20% overnight. It still hasn’t returned to the same level. Did you have any idea what you were getting into? Thrown right into the fire doing earnings calls, people are nervous?

SR: Yeah, even looking back, I think it is important to also recognize that we guided the year last year to 22% when the consensus expectation was 30%.

Right, there was a lot of stuff going on.

SR: There was a lot of stuff going on over there, and it did not help that Frank, the legend, was retiring, and I was coming in. I think it is really the double whammy of the change and the vastly lowered expectation, the guidance that really threw people for a tizzy.

On the other hand, yes, I’ve spent more time with our investors, analysts that are covering us, obviously the team. Look, Frank has always been a straight shooter and he pushed for this transition because he and the board strongly felt that it was really important for a product-oriented person, somebody that breathed and lived product for a long time to be the CEO at this time of tumultuous change. I have to give them a lot of credit, I have to give Frank a lot of credit for not dragging this out to be a three-year transition, I’m sure you’ve seen many of those in many other companies, he felt that a clean break was the right way to do it.

Yes, it was a little bit of shock for the people that were covering the company. It’s a remarkable company, I spoke to a lot of Snowflake customers before I became CEO and over just the past two, three quarters, you’ve seen how quickly the company has been resurgent, but also the speed at which we’ve been able to roll out new things.

So in that sense, I’m pretty happy with where we are. Obviously we are going through a bunch of macro changes that are depressing the whole stock market but I feel good about where Snowflake is both as a data platform, but much more importantly as an AI data platform and what we can look forward to in our future. Was it a trial by fire? Yes, but we will come out stronger.

Well, let’s spend the rest of the time on Snowflake. Just stepping back as someone who was relatively late to Snowflake, I think you had in your Twitter profile or LinkedIn somewhere, your job was learning Snowflake. What did you learn? How do you describe what Snowflake is today? What problems does it solve? Why is it a better solution? What’s your pitch to someone who doesn’t know what Snowflake is?

SR: You know of course that the adjective “learning” has multiple connotations. One is I am literally learning Snowflake as in you are using it as a verb, but there’s a different interpretation in which I’m a learning, a present continuous sense of just learning all the time, and it was meant to be tongue-in-cheek.

Snowflake came of age as an incredibly flexible analytic platform, a cloud data warehouse as it were, and like other technical products, it was born of the observation that whenever you and I wanted computing — and it still applies when we buy phones — people always bought boxes and they always came with fixed parameters for things like memory and storage and compute, and if you wanted to do more, well, that was too bad, you needed to wait for new boxes to show up. So we did this and our founders created Snowflake in the cloud to be this platform that could scale along multiple dimensions and it was an incredibly efficient platform.

Right. Separating the data and the compute.

SR: That’s right, separating storage and compute. So if you wanted to do super fancy machine learning analysis on your data set, you would struggle to do that in a legacy system. If it took too much compute because you had production jobs running on it here, that’s fine.

Or if you had two people that wanted to analyze something at the same time, tough luck.

SR: Tough luck, take the ticket and wait for it. Snowflake made all of that stuff easy.

Part two of Snowflake was then a collaboration platform, which we basically made the observation that businesses work with each other, interact with each other, partner with each other. As you know, many of those interactions were done by things like nightly transfer of FTP files. To this day, I find it dumbfounding that in 2025 ACH transactions take days to settle, that’s because banks are sending files to each other.

I had the same reaction when I was preparing for this interview and understanding how these data flows worked. And I’m like, “I was a big FTP user in 1998 when that’s how you used to get MP3 files before Napster came along”, are you the Napster of data sharing between companies? Is that a way to put it?

SR: No, it’s more like we are the circulatory system of the enterprise world. So companies like Fidelity for example, mandate that all their partners give data to them via Snowflake sharing. We are realtime, we are cross-cloud and data just shows up where you want it to show up and there’s no programming involved. What used to be an IT project is now someone configuring a screen and inviting a user on the other side to get at it.

An incredible number of data companies, companies that essentially sell data products for a living, whether it’s the New York Stock Exchange or S&P Global or State Street, they all distribute their products through Snowflake. So it’s been an incredibly important part of Snowflake.

Snowflake Integration

I think it’s actually quite interesting. One of the first big changes that happened under your watch was supporting Apache Iceberg, and letting your customers utilize storage outside of Snowflake. So you started out, we’re going to break apart storage and compute which is a big differentiator from your competitors, but it was also, “Oh great, we have two revenue streams”. Well, it turns out you have two separate revenue streams, you now have pressure to actually give up one of those revenue streams, which is kind of what happened.

SR: You know what I think, I think it was a mistake to think of storage as a revenue stream. I think we should always have sold that at cost and encouraged people to put as much as they could into Snowflake.

But independent of that, though, I think the open format standards are here to stay. This is because the progressive customers out there, enterprises that are out there, at some level do not want to go through more migrations again, they want to have their data in formats that they can directly run compute on. By the way, this is also going to put a lot of pressure on SaaS companies to give their data to their customers. These people all legitimately say, “At the very least, I want a copy of my data”, and Snowflake we embraced it.

I’ve made two big product changes obviously with the team over the past year. One is a wholesale embrace of open formats. Of course, one way to look at it is storage revenue that we used to get, now we won’t get because it’s sitting in open storage formats on cloud storage. A different way to look at it is most large enterprises have hundreds, sometimes thousand times as much data sitting in cloud storage as they do inside Snowflake, and all of a sudden our amazing compute engine can now be used for data engineering, can now be used for data ingestion.

So it significantly expands the value that we can bring to our customers and that’s been a change that we have embraced. Iceberg is the most popular format that is out there, but we are pushing beyond Iceberg. We released something called Apache Polaris, which is an open catalog format because we want to make it easy for people to discover data sets as well. And then of course, part two of that is AI which we will talk about soon.

What strikes me, though is you brought up the network effects that Snowflake has, and one of my senses is companies get in trouble when they hold on to too many points of integration, and so you had, “We’re going to sell you storage and compute”, and yay, two revenue streams. But to your point, that actually limited what was more of a lock-in. Lock-in is a fancy way to say an attractiveness of your platform, which was this data sharing layer, and it’s like you need to be one or the other. If you’re going to be a network, then everything else around it needs to be free.

SR: I completely agree with that. I think Snowflake’s core value is that of an amazing data platform for large-scale data computation. We have successfully brought elements of machine learning AI into it, so it’s a little bit of one-stop shop for many different kinds of computation that people want to run on that, and the network of customers that we have and how they work with each other, that’s the enduring value of Snowflake. Yes, we started as a proprietary format company, and it’ll take some time for it to play out, but I think there’s a huge opportunity in the open data space as well.

Well, it’s also a bit of a risk, because if you can just get compute, if you have your data independent, you could very easily go and use a competitor because the data is just sitting in the middle and anyone can lock into it.

SR: There’s a lot more to compute than running a SQL query. It gets into everything like, “What’s the governance support that you provide? What’s the amazing collaboration facilities that you provide?”. We provide disaster recovery for our biggest customers. There are customers that are required by regulators to run a primary in one cloud provider and a backup, which by the way costs only 10, 15% of the original one, in a different cloud provider, and all of this comes integrated in one tight, simple to use product. So there’s a lot more to Snowflake than, “Here’s a SQL query that you can run”. Does this mean that we have to keep competing and keep getting better at what we do? Well, welcome to competition.

Business Model and GTM

Yeah. Snowflake’s model, we’ve sort of touched on it a little bit, has been usage-based. And like we talked about, it was storage usage and compute usage and it was a big deal at the time to separate those and do them differently. In retrospect, do you feel very fortunate that, again, usage models was a new thing at the time, you’re not seat-based? Is there any world where Snowflake could have been seat-based or is that just something you take for granted at this point?

SR: There’s no easy answer to some of these questions and some changes are hard to make. The consumption model absolutely aligns value creation on both sides. In other words, we recognize revenue only when our customers actually use the compute that they have bought from us and so we, similar to the hyperscalers, we now have a finely developed go-to-market motion of “How do we work with customers? How do we create what we call use cases that deliver value for them, and then increase consumption along the way in value creating ways?”, I think it’s a very aligned model.

Every now and then I will meet some customer who will say, “I love Snowflake, I used it to optimize some key aspect of how I should do my pricing and the pricing algorithm costs a million dollars a year to run and I save like $300 million every year”. And you go, oops, that’s when you wish you had done things like value-based pricing, but I think the core model is very strong.

Do you ever feel any tension though in the incentives? Like there are horror stories out there about un-optimized runs blowing out your Snowflake bill, so obviously you want to help customers avoid those, you don’t want bad press as it were. On the other hand, it is good for the bottom line. How do you balance those incentives?

SR: I’m very, very clear with our customers and our sales team about this. I tell them any inefficient computation that a customer is running is a ticking time bomb, because they’re going to inevitably find out and be really upset about it.

Can you build that into the incentive structure of your team? Like, “If we lose a customer because their bill blew up, we’re going to claw back some commission”, or whatever it might be. How do you even deal with that other than pounding the table and yelling at folks?

SR: We have an entire process by which use cases are created, how they’re rolled out into production, the kind of metrics that our customers should be looking at. We help our customers set up governance schemes for, “How do you spin up a new project?”. I tell our customers you need to have a lightweight process. It can be as simple as a Google Form by which someone that wants to experiment can go spend $100 or $1000 on Snowflake. You can get a lot done with it, but if they want to run a real production use case that’s going to cost, I don’t know, $100-$200,000, they really need to size out the project and get approval from their finance person in order to be able to do it and a lot of our best customers do this. We teach this as a process that both our solution engineers and our customers adopt and it’s one of my priorities to actually have these be built into the core Snowflake platform itself. So things like lifecycle management is built as part of Snowflake, so cases like the one that you’re talking about don’t happen.

Along these lines, has it been a challenge from your perspective, so this is more of a personal question, learning how to lead a sales-driven organization? That’s a lot different than how things work at Google. Google, especially the ads, the biggest part of the market is self-serve, and obviously you have teams and you have a sales organization, but it’s a lot different than how it works in the enterprise.

SR: I think we don’t give the Google Business teams enough credit. I think they have produced some of the most amazing business leaders on the planet who have gone on to do amazing things, like my friend [Palo Alto Networks Chairman and CEO] Nikesh [Arora] or even [Google CBO] Philipp [Schindler] who’s been running the business there for a long time. It’s a very good team, and I have to also say that they pioneered many of the techniques in things like incentive structures for inside sales organizations.

Google had every kind of sales motion possible. Absolutely self-serve is the big deal, it was a little less than 50%, but still it’s 50% of a very large number which was self-serve. We also had inside sales teams that would do things like call customers or email them with potential new campaigns that they could be running, new traffic, new conversions that they could be getting, and there were also account with name sales teams that did quarterly planning about how to increase the business, what their objectives were. So Google did everything and they did everything remarkably well.

Do you feel that was transferable or did you still have some things to learn?

SR: Every company is different. There are things that I have learned over the past year with respect to how enterprise sales runs. And in fact, Snowflake is a little unique because it is both a deal-oriented team, our customer signed contracts because in exchange for the guaranteed spend, they get a better deal on how we price the underlying compute. But there’s also the art of driving consumption with use cases and creating value. So Snowflake is always the yin and yang of consumption and deals. So there are new techniques here.

Google’s billing was always 30 days arrears kind of billing, it was always invoiced, that’s not quite how it works at Snowflake, but many of the techniques that I learned both optimizing Google Search but also working with the sales teams are techniques that I have adopted here. I’m a big fan of things like Boolean metrics for measuring efficiency where instead of measuring an average, if you have a team of 300, you want to know what fraction of your team is beyond a baseline of excellence that you set. So there are some techniques that transfer over and other new things that I’ve had to learn, but that’s life and that’s fun.

Snowflake and AI

Yeah. Well you said that’s why learning was in the bio. The pitch, the AI angle with Snowflake is super obvious, you have ideally all the data or at least some of the data that is important for business. But one thing that’s interesting to me, just thinking about Snowflake, I mean Snowflake was a big deal, it grew, got large before AI, and in many respects was well known for being easy to use and it’s a platform for direct data manipulation and analysis. Does this almost make AI a bit more of a challenge in a weird way, just because you are enabling humans who know what they’re doing and so the comparison, it’s not like, “Oh, you have a bunch of random data that’s been sitting around, now you can get usage out of it”, it’s, “You have a lot of data that we’ve worked with you to structure well”, so that people who know what they’re doing can go in and get great results and now AI almost has a higher standard to reach. Is that a fair way to think about it?

SR: It is one way to think about it, but remember, data at Snowflake is typically consumed through a set of people and tools, typically through things like BI tools or a notebook.

In my mind, AI produces two large changes for a company like Snowflake. One is it made data a lot more fungible, meaning your ability to analyze a PDF document let’s say, and extract structured data from it just got a whole lot easier because of things like multimodal models. Information is a lot more fungible than it was before and so part of what we did when we introduced the AI layer was make it super easy for people to access models just by writing SQL queries. So the analysts that were analyzing the data on Snowflake basically became AI-enabled analysts.

They worked the same way, just got a lot more data.

SR: They got a lot more data. But on the other hand, where we see a very big opportunity is these BI tools that typically stood between Snowflake and the end-business user, I think consumption can be a very different experience with AI, you don’t need to go through a specific BI tool. And my take for example is that at the end of the day, a BI tool is a 2D structure that is trying to represent a very complex multidimensional world and if you can imagine a dataset with just like 10 or 12 dimensions, trust me, there’s no simple dashboard that can represent that. But what you can now create is a way for humans to easily query that dataset, get the kind of aggregates that they want if they want that, or to be able to do follow-up analysis.

So I think it vastly expands the set of people that can get value from Snowflake data, but there is a big if, and it’s a little bit of a carryover in my life from even Neeva. One of the big things when we did AI with Neeva was we said we need cited answers, we don’t want search to hallucinate the same way that we could see the ChatGPT was hallucinating. We basically took the same techniques, we said Cortex Search is going to make it easy for people to ask questions of unstructured data but give citations. Similarly, we developed a product that could write SQL queries, could extract structured data, but we put in things like a feedback loop so that people can reliably get at answers. In fact, our watchword for AI on Snowflake is “Easy, efficient, and trusted”. To me, that’s the big need that is going to make AI truly useful on top of the structured and unstructured data that we have and that’s very much the lens that we have adapted so far.

The nice angle about this is this is building on strength. In other words, if you have strong stable operators that can get structured or unstructured information, now you can work on platforms that string them together into things. You can call them agentic workflows, but we’ve been very deliberate about taking a measured approach to AI at Snowflake and building on our strengths as opposed to randomly trying to reinvent ourselves into a foundation model company or let’s do cheap-inference-as-a-service kind of company. We have stayed true to our mission of helping people mobilize their data and AI is an accelerant on that.

Do we need some better metrics as an industry where if you look at a lot of the measurements for models, and your old employer just released Gemini 2.5, it’s scoring great on the metrics, and the metrics are all incremental metrics in that their scores are higher than the scores that came before. But do we need a negative metric where 100% is a human going in and doing a SQL query and you know the data is correctm and the AI can we measure if the AI goes and does it’s at 89 or it’s at 91 relative to a human, where’s that metric?

SR: I think this is exactly the kind of thing that we need. We have published metrics on Cortex Analyst, which is our product for unstructured data. And in fact, in very much the design aspect of Cortex Analyst that I was very involved in, part of what I told the team that we needed to think about was the precision-to-recall trade-off. As you know, search operates in this mode of pretending that it has infinite recall, it doesn’t matter what question you ask, Google is like, “Yep, I got an answer for that”, and it will never tell you how good the answer is and whether you should believe it or not.

That was beauty of the ten blue links that at the end of the day, you the human had to make the final decision about what was the answer.

SR: You are the human, you are one deciding that. Even though I do find that there are more cases where Google will tell you there are not great results for this query if you type in like dumb stuff, which is a little bit of a change over the past few years, I actually think that that’s a good change. But yes, early models of ChatGPT for sure would just answer any question that you asked even if they didn’t really have any information or any confidence about it.

So we very much build in, we call it a verified query repository of positive and negative examples for Cortex Analyst, so that you can pattern match both against positive examples that an analyst has vetted that this product should feel confident answering, but also a set of questions that we should not be answering.

Because roughly speaking, if you put a chat bot in front of a person, one of the first questions they’re going to ask is, “What will my revenue be 15 years from now?”, which no model has any business answering. So I think having metrics like that and making explicit trade-offs for when can you bring value is a really important aspect of any AI-driven product that simply doesn’t get enough attention.

What’s more valuable do you think? Maybe I’m probably leading the horse to water here, but to have say a GPT-3 level model with 99% reliability or a GPT-4.5 model with 95% reliability?

SR: I mean, I’ll give you the same answer. When people give me choices about speed or quality, my answer is, “Yes”.

(laughing) Well no, you have to choose one.

Models and Products

I mean you’re not building or publishing massive foundational models, and you actually were fretting in some public comments a year ago that, “Boy if it takes billions of dollars to make a model and we’re reduced to only a couple of model providers, that wouldn’t be great”. Has this year been a very exciting year in your perspective with these open source models that are so capable of coming out and are those models super critical to your business going forward?

SR: We work a lot with open source model providers. We have an excellent partnership with Meta and the Llama team. I think the amount of progress being made in the world of models, I think it’s been pretty remarkable, I think it also essentially prevents models from becoming an oligopoly. I think having a world in which there are only one or two big model providers or three is just not that great an outcome. I think last year has been pretty good from that perspective.

So where do you see your utilization of this happening? Do you see customers in the long run just switching these in and out willy-nilly? Do you need to do pretty intensive post-training on a per individual customer basis? You have a competitor that has their own model and their promise is to train a model for their customers, or is this just going to be fancy RAG with a verification system around it? I mean, where do you see leveraging your position but remaining competitive in the long run?

SR: I look at AI as a natural addition to what we do as a data company, and so this is why we build products that complement what we do. The search product for example, for unstructured data, along with embracing things like open formats. We’ve invested in a big way in connectors, so more data from more applications can be brought either into cloud storage or into Snowflake so that they’re readily available for query. Similarly, when it comes to structured data, we want to make it super easy for people to get at the structured data by asking natural language questions, but also being able to create things like dashboards on top of the data.

Where we are headed is now in how can you compose these different data elements, these agentic operators as they are, to build more complex workflows, and this was a point that I made earlier about how products got set into companies. As you know, there’s a very large ecosystem of system integrators that take products and then create real utility dealing with all of the detail and messiness that exists in every company. I see a world in which more and more of real use cases that you and I do is directly solvable on a platform, that’s the angle that we very much see Snowflake pushing in, it is driving workflows on top of data. I think we are redefining the line between product and services thanks to AI and thanks to agentic capabilities.

To answer your question, by the way, with respect to which model would I pick, the way I try to not have that be an either-or is for cases where you really want precise quantitative answers, you want a system that is tuned for maximum precision. If on the other hand, you’re dealing with a little bit more of an analytical task that does not have a fixed answer, you want a more powerful thinking model which will make more mistakes but will also come up with better plans. It’s a question of having the things that are in your foundation be reliable. So where I see Snowflake is building on top of the data platform, of the data estates that Snowflake has and using that to deliver faster value for our customers.

You sort of were driving at what to me was one of my big takeaways thinking about Snowflake, and Frank Slootman is a legend, it’s hard to take over for him. He had certain axioms, one of them was, “Narrow your focus”, I think is something he’s famous for. One thing that occurs to me however, is that if the overall Snowflake proposition is we make your data accessible and easier to work with, you can get much more utility out of it. There’s a user experience component to that, and often a way to deliver a better user experience is to be more integrated, to do more products. Sometimes if you were using Snowflake, you would hit a wall, “Sorry, you need to go get someone else to fill this product need”, and it’s like, “I like Snowflake, can’t you just do it for me?”.

You’re referring to that it sounds like in this regard, where there’s more stuff that the product should just be able to do instead of going outside. Have you felt like one thing you need to do is actually Snowflake needs to expand its focus a little bit here? It needs to be broader and needs to have — you need to not hit those walls as much as you did previously?

SR: For sure broader at the base. Snowflake restricted itself to essentially what’s called the gold analytic layer that data that has been cleaned, that has been processed that you can run analytics and machine learning on. I think what we have done absolutely with our embrace of open formats, with our embrace of connectors, is this ability to act on much larger amounts of data. We’ve made the bottom a whole lot beefier, which again plays to our core strength.

Does it though? I mean you have other folks that started out — you had the Warehouse versus Lakehouse, all the various — and you mentioned at the beginning about different competitors coming in at different angles. So you have a competitor that starts out very broad and that turns out to be great for AI because it deals well with unstructured data, Snowflake’s more narrow, but easier to use. Do you really feel your advantage in that case is going broader, or are you fighting on unfamiliar terrain and you have to get your data out of Snowflake actually to make it more accessible and that’s been a battle you’ve been having to fight?

SR: It’s not an either/or. I think there is, first of all, it’s important to understand that we are in the midst of a secular transition over from generations of legacy systems over to the cloud.

So your competitor is actually the data still in data centers on premise.

SR: There is a huge amount of that, this is a rapidly expanding business and so there is plenty more. And in areas like migrations, for example, you’ll be horrified at how difficult and how long some of these migrations take. Part of what we are very excited about is, “How do you apply similar techniques?”, essentially the AI-assisted human loops for making things like migrations go faster.

But back to your main point, though. I do think that the world of AI, the world of interactive consumption of information does create interesting new opportunities for products that are not competing with the Tableaus of the world, because that’s an entirely new category.

But back to your points about innovation that you have made in other podcasts, I think AI-driven consumption is going to be very broad and somewhat shallow to begin with. It is never going to have the sophistication of a dashboard that someone spent six months creating. On the other hand, many, many more users can get data way faster than needing to wait for an analyst.

Over time we are absolutely going to have a talk to my pivot chart widgets where you can manipulate a pivot table without really needing to know programming by simply saying, “Hey, drop this attribute as the X attribute”, or, “Compute a measure like this”, where you are more assistively creating things like this. Those are the opportunities that I think Snowflake is creating. And again, super aligned and complementary to our strengths as a data platform, we are not trying to reinvent ourselves to be some other new company.

The Enterprise Stack

You mentioned a couple times the combination of products and services, and it’s funny you mentioned system migrations. Are we in some respects going back to the old days where actually what needs to happen is people will go through this honeymoon period of, “Yeah, just throw an AI model at it”, but actually what is necessary is 6 month, 12 month, 18 month migrations, refashioning your data, and then the AI is then useful? It’s actually downstream of doing a lot of work up front that everyone thought we were done with. But now it’s back to the ’70s, back to the ’80s, as it were.

SR: No enterprise person is going to tell you that they’re done with their enterprise migration from on-prem systems. I challenge you, you can go talk to any large bank, they will all tell you that there is a ton of that work. What is different about today is I think we have the potential to dramatically decrease the timeline of these kinds of migrations. We are doing one migration for a very large bank that, it’s an 18-month migration. It’s easily the most important data set that they have and they’re terrified, and so are we, about making any mistake with that migration.

Isn’t there a bit, though, where if we end up in a world where migrations take a really long time and that is downstream from, “We just realized data needs to be really well-structured”, and that’s a big part of the process. Isn’t that a world that’s good for Snowflake because you’re on the more well-structured data side of things already?

SR: That is correct. That’s right.

So migrations for the win.

SR: Migrations 100% for the win. Migrations faster for the win. I’ve even met people that have told me, “I will have you start on one instance of this legacy database that we have, do a good job quick and you can have 50 of these”, and sometimes that ends up taking three months, which doesn’t sound like a long time, but 50 times three is a very long time.

Do you need an even larger service organization, then, going forward?

SR: I don’t think it’s a matter of people, I think it’s a matter of much better technology, and I fundamentally believe in the human-assisted AI loop where AI can solve classes of problems that are easy to solve and the difficult ones can be solved in a more assistive fashion. I think that can drive a lot of progress in these gnarly areas which do have a lot of corner cases.

How do you see this playing out in the long run? Obviously you’re going to be optimistic about Snowflake, but how do you overcome this? Your selling point to date has been relatively easy-to-use but totally predictable and having that be an advantage versus the pretty unstructured, but actually that’s a good thing because, it’s like there’s the old saying there back when people were less bullish about Netflix, “Can Netflix become HBO before HBO becomes Netflix?”, both ended up being fairly wrong in certain regards, but there’s a bit where, can Snowflake become unstructured and broad-based before unstructured and broad-based solutions become exact and useful and predictable for making your beautiful dashboard? Is that a fair way to think about the competition?

SR: Well, we are active players in this, we get to write the history as much as anyone else. We are not recipients of this history and I’ll argue that the characteristics that make Snowflake great, which is a simple-to-use, tightly-integrated product, is not something that you can post-fact bolt onto things.

I love the hyperscalers that we work with but I tell people that they’re really 300 GMs that are all competing to have their product line succeed. Nobody is thinking about, “What does it take to create one unified, tight data platform?”, that’s been our obsession from day one, and that is where we think we are set up to win. Obviously there are many other aspects of computing that the hyperscalers are going to be great at but, similar to how there are two or three great model makers in the world, even though the hyperscalers, again, clearly have more money than God, I think creating a tight unified data platform is hard.

And I’m biased, but I would bet my money any day on Snowflake becoming much better with unstructured data with a tight product than a sprawling product built on unstructured data becoming amazingly good and tight and easy to use on all data.

So if you have the hyperscalers, they’re better at infrastructure, they have advantages there, they’re going to end up sticking there, it’s actually harder to go up into the platform layer and you’re at the platform layer — that leaves the SaaS layer. And you mentioned before, people want to get their data out of their SaaS apps. At the same time, if you’re a SaaS app, that is pretty terrifying for similar reasons that it’s somewhat scary for you to lose control of the data that you have.

Do you see the market pressures on SaaS companies leading to this, where they will build integrations with Snowflake, more easily get their data into there? Or are you going to have to more vigorously apply that market pressure and having your own applications, your own vertical approaches that says, “Look, just switch over because they’re going to be slow, they’re hoarding the data. If you want it integrated, we have a better solution and it’s better because it’s tied to everything else”.

SR: First of all, we believe in partnerships. We work with a number of SaaS providers, we often have bidirectional data integration with them where our customers can bring data from the SaaS platform into Snowflake, but if they want to take a data set and put it into that SaaS platform for analytic purposes, we absolutely facilitate it.

I would actually say that when it comes to the SaaS players, whether it is ServiceNow or Salesforce or SAP, action has shifted over to, “What do agentic workflows mean for those products?” — there’s a reason why they are all stressing that part because I think agentic AI, agentic workflows offer an alternate to the people that are doing work on those platforms. This is part of the reason why they are leaning even more into these kinds of solutions. We work actively with them, it’s a large market. We have some customers that move data from Snowflake to those platforms, we have many others that bring their data from those platforms to Snowflake for central analysis and followup actions like we do.

But there is also another layer of action and interaction that is going on. As you know, the world of agentic AI is very confusing, it is hard to find an exact definition for what an agent is or what a component of an agent is, but there are efforts at making these things interoperable, so I do think we are looking to a world in which, for example, a company makes an agent that makes it easy to query one or take action on one data set, and that being interoperable with another agent that’s sitting on top of data that is in Snowflake.

In fact, we want to help people create these kinds of composite workflows. One of Snowflake’s amazing offerings is this thing called a Native Application, where a provider, say S&P Global, can not only ship data but also ship code as this native application into a customer deployment. Now this code cannot look at anything in the customer deployment other than what the customer says this app should look, but the customer cannot see any of S&P’s data, and now you have an application.

To do that, does that customer, it can look at data they have — that has to be on Snowflake?

SR: That has to be on Snowflake. But the customer has to grant permission to this app when they install it.

But the beautiful thing about a model like this is that this app can now take action and give insights based on both S&P’s own proprietary data and the data from the customer. So think S&P Global has great information about all stocks and let’s say your portfolio is sitting with some asset manager. Now this app can give a view of, “What are important metrics for your portfolio?”, that is now a mix of data that only the asset manager has and what S&P has.

Now think about how this model works in an agentic setup. You can now have agents, both from the customer side as well as the application provider side, that can come together to do meaningful orchestrations so I do think that there’s going to be integration at that level. In fact, we are working with Microsoft to have Snowflake Intelligence components be available as part of their Copilot and Power BI and things like that, so this is a rapidly changing world. And yes, the SaaS players have to lean into it, because I think agentic AI offers options for the combination of people and products that are their mainstay.

So what does success look like? If we’re talking again in five years and people are like, “Look, salute Frank, he took the company to IPO, but wow Sridhar really transformed what Snowflake is to be bigger and better and greater”, what does that Snowflake look like?

SR: As I said, I think we are in an era of unprecedented change and opportunity. We made $3.5 billion last year, growing at 30% and if we fulfill our mission to truly help enterprises mobilize data, we should be growing at faster than that clip for the next 10-ish years. I was part of Google Search growing from $1.5 to close to $100 billion the year I left. If you’re asking me about an audacious ten-year goal, that would be an audacious ten-year goal.

Headlines: Snowflake To Be The Next Google. That sounds like a great, great plan.

SR: (laughing) Clearly aspirational, but it’s also the journey along the way, Ben. I think creating a team that prizes excellence, that is really good at what it does, that can deal with change itself and help customers navigate change at this time of both opportunity and hype is also a pretty powerful mission and I’m reminded of that every time I talk to our customers, our sponsors within our customers, they put a lot of trust in us and to be that partner that is the data arm of what they do, I think that’s the real privilege.

What I tell people is that the most celebrated companies of the 21st century, like Google, like Meta took data almost as seriously as they took their main product. In fact, it’s those feedback loops that have created greatness and I tell our customers that it is our vision and my dream that Snowflake is that data partner for them to become that efficient and that insightful with their data as these great companies were. To me, fulfilling that mission with more and more customers is, I think, reward. But yes, monetarily or in terms of just growth, aspiring to things like mid-30s growth for a decade, that compounds.

Sridhar Ramaswamy, great to talk with you and I look forward to talking to you again soon.

SR: Thank you, Ben. Take care.

This Daily Update Interview is also available as a podcast. To receive it in your podcast player, visit Stratechery.

The Daily Update is intended for a single recipient, but occasional forwarding is totally fine! If you would like to order multiple subscriptions for your team with a group discount (minimum 5), please contact me directly.

Thanks for being a supporter, and have a great day!

![How One Brand Solved the Marketing Attribution Puzzle [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/marketing-attribution-model-600x338.png?#)

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)

![[HYBRID] ?? Graphic Designer](https://a5.behance.net/cbf14bc4db9a71317196ed0ed346987c1adde3bb/img/site/generic-share.png)