How Hebbia is building AI for in-depth research

A New York-based AI startup called Hebbia says it’s developed techniques that let AI answer questions about massive amounts of data without merely regurgitating what it’s read or, worse, making up information. To help generative AI tools answer questions beyond the information in their training data, AI companies have recently used a technique called retrieval-augmented generation, or RAG. When users ask a question, RAG-powered AI typically uses a search-engine-style system to locate relevant information it has access to, whether that’s on the web or in a private database. Then, that information is fed to the underlying AI model along with the user’s query and any other instructions, so it can use it to formulate a response. The problem, says Hebbia CEO George Sivulka, is that RAG can get too bogged down in keyword matches to focus on answering a user’s actual question. For instance, if an investor asks a RAG-powered system whether a particular company looks like a good investment, the search process might surface parts of the business’s financial filings using that kind of language, like favorable quotes from the CEO, rather than conducting an in-depth analysis based on criteria for picking a stock. “Traditional RAG is good at answering questions that are in the data, but it fails for questions that are about the data,” Sivulka says. It’s a problem that surfaces in general-purpose AI-powered search engines, which can confidently regurgitate satire, misinformation, or off-topic information that matches a query, as well as special-purpose tools. One recent test of a legal AI tool, where the system was asked to find notable opinions by a made-up judge, found it highlighted a case involving a party with a similar name. And, according to Hebbia, questions that require an analysis of a big data set that goes beyond finding relevant documents often can’t be answered by RAG alone. Hebbia, says Sivulka, has approached the problem with a technique the company calls iterative source decomposition. That method identifies relevant portions of a data set or collection of documents using an actual AI model rather than mere keyword or textual similarity matching, feeding what it finds into a nested network of AI models that can analyze portions of the data and intermediate results together. Then, the system can ultimately come up with a comprehensive answer to a question. “Technically, what it’s doing is running a LLM over every token that matters and then using that to feed that into another model, that feeds it into another model, and so it recurses all the way up to the top,” Sivulka says. Hebbia’s nested processing techniques also help overcome limitations with AI context windows, which limit the amount of information that can be provided to a language model in one query, he says. Hebbia announced a $130 million Series A funding round in July and claims clients like the U.S. Air Force, law firm Gunderson Dettmer, and private equity firms Charlesbank and Cinven. Sivulka says various clients harness the company’s technology to answer complex questions about financial data for potential investments or to search for valuable information buried deep in voluminous legal discovery data sets, among numerous other use cases. The tool can also be configured to notify users when new data enables it to draw new conclusions, such as when new financial filings by a particular company appear online, Sivulka says. Demand is high enough that Hebbia, which uses models from big providers like OpenAI and Anthopic, has developed its own software to maximize the number of queries it can send to different models. Its system even takes into account varying rate limits for, say, access to GPT offered by Microsoft and by OpenAI directly. And, says Sivulka, demand continues to increase, as customers use Hebbia’s software to consider larger data sets than previously would have been possible in making decisions. The company says it’s handled 250 million queries from users so far this year, compared to 100 million last year. “If you’re an investor and you’re building a case to invest or not to invest, you can look at—and corroborate your assumptions with—way more data,” Sivulka says.

A New York-based AI startup called Hebbia says it’s developed techniques that let AI answer questions about massive amounts of data without merely regurgitating what it’s read or, worse, making up information.

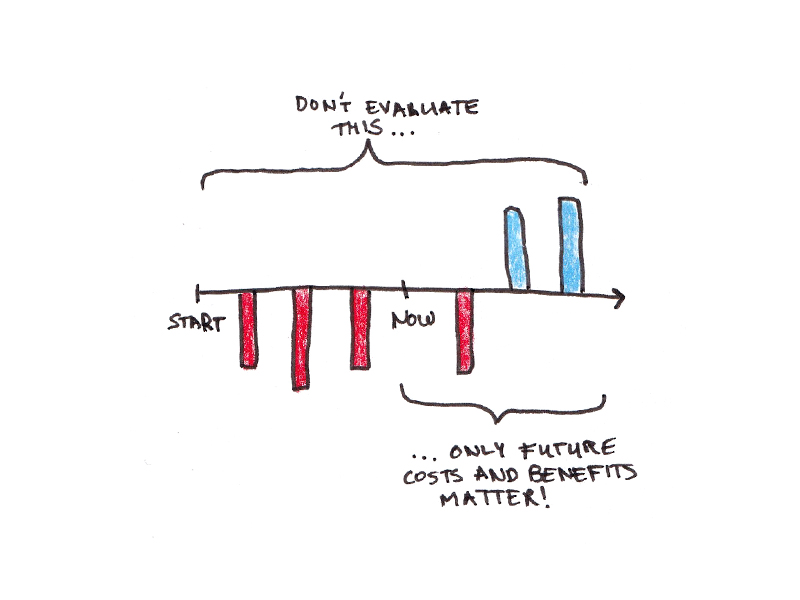

To help generative AI tools answer questions beyond the information in their training data, AI companies have recently used a technique called retrieval-augmented generation, or RAG. When users ask a question, RAG-powered AI typically uses a search-engine-style system to locate relevant information it has access to, whether that’s on the web or in a private database.

Then, that information is fed to the underlying AI model along with the user’s query and any other instructions, so it can use it to formulate a response.

The problem, says Hebbia CEO George Sivulka, is that RAG can get too bogged down in keyword matches to focus on answering a user’s actual question. For instance, if an investor asks a RAG-powered system whether a particular company looks like a good investment, the search process might surface parts of the business’s financial filings using that kind of language, like favorable quotes from the CEO, rather than conducting an in-depth analysis based on criteria for picking a stock.

“Traditional RAG is good at answering questions that are in the data, but it fails for questions that are about the data,” Sivulka says.

It’s a problem that surfaces in general-purpose AI-powered search engines, which can confidently regurgitate satire, misinformation, or off-topic information that matches a query, as well as special-purpose tools. One recent test of a legal AI tool, where the system was asked to find notable opinions by a made-up judge, found it highlighted a case involving a party with a similar name.

And, according to Hebbia, questions that require an analysis of a big data set that goes beyond finding relevant documents often can’t be answered by RAG alone.

Hebbia, says Sivulka, has approached the problem with a technique the company calls iterative source decomposition. That method identifies relevant portions of a data set or collection of documents using an actual AI model rather than mere keyword or textual similarity matching, feeding what it finds into a nested network of AI models that can analyze portions of the data and intermediate results together. Then, the system can ultimately come up with a comprehensive answer to a question.

“Technically, what it’s doing is running a LLM over every token that matters and then using that to feed that into another model, that feeds it into another model, and so it recurses all the way up to the top,” Sivulka says.

Hebbia’s nested processing techniques also help overcome limitations with AI context windows, which limit the amount of information that can be provided to a language model in one query, he says.

Hebbia announced a $130 million Series A funding round in July and claims clients like the U.S. Air Force, law firm Gunderson Dettmer, and private equity firms Charlesbank and Cinven. Sivulka says various clients harness the company’s technology to answer complex questions about financial data for potential investments or to search for valuable information buried deep in voluminous legal discovery data sets, among numerous other use cases. The tool can also be configured to notify users when new data enables it to draw new conclusions, such as when new financial filings by a particular company appear online, Sivulka says.

Demand is high enough that Hebbia, which uses models from big providers like OpenAI and Anthopic, has developed its own software to maximize the number of queries it can send to different models. Its system even takes into account varying rate limits for, say, access to GPT offered by Microsoft and by OpenAI directly.

And, says Sivulka, demand continues to increase, as customers use Hebbia’s software to consider larger data sets than previously would have been possible in making decisions. The company says it’s handled 250 million queries from users so far this year, compared to 100 million last year.

“If you’re an investor and you’re building a case to invest or not to invest, you can look at—and corroborate your assumptions with—way more data,” Sivulka says.

![Building A Digital PR Strategy: 10 Essential Steps for Beginners [With Examples]](https://buzzsumo.com/wp-content/uploads/2023/09/Building-A-Digital-PR-Strategy-10-Essential-Steps-for-Beginners-With-Examples-bblog-masthead.jpg)

![How One Brand Solved the Marketing Attribution Puzzle [Video]](https://contentmarketinginstitute.com/wp-content/uploads/2025/03/marketing-attribution-model-600x338.png?#)

![How to Use GA4 to Track Social Media Traffic: 6 Questions, Answers and Insights [VIDEO]](https://www.orbitmedia.com/wp-content/uploads/2023/06/ab-testing.png)

![[Hybrid] Graphic Designer in Malaysia](https://a5.behance.net/920d3ca46151f30e69b60159b53d15e34fb20338/img/site/generic-share.png)